What is GGML?

ggml.ai - AI at the edge, a cutting-edge tensor library designed for machine learning, empowering large models and high performance on everyday hardware. With features like 16-bit float support, integer quantization, automatic differentiation, and optimized for Apple Silicon, ggml revolutionizes on-device inference with minimal memory allocation and guided language output.

Key Features:

🧠 Tensor Library for Machine Learning: Written in C, ggml supports large models on commodity hardware, enabling seamless integration into diverse projects.

🛠️ Optimized Performance: With 16-bit float support and integer quantization, ggml ensures efficient computation, leveraging built-in optimization algorithms like ADAM and L-BFGS.

🌐 Versatile Compatibility: From Apple Silicon optimization to WebAssembly support, ggml adapts to various architectures without third-party dependencies, ensuring smooth integration into any environment.

🚀 High Efficiency: Zero memory allocations during runtime and guided language output support streamline development, enhancing productivity and performance.

Use Cases:

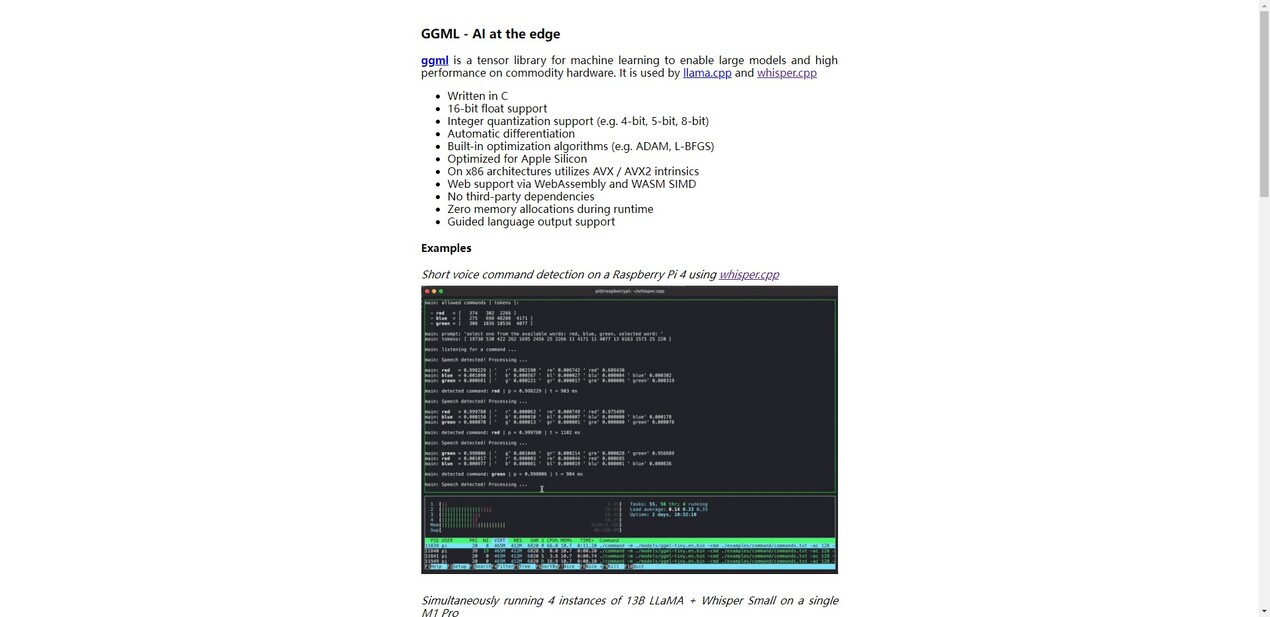

Edge-Based Voice Command Detection: Utilize ggml for short voice command detection on devices like Raspberry Pi 4, ensuring quick and accurate responses.

Multi-Instance Inference: Run multiple instances of large language models like LLaMA on Apple M1 Pro, maximizing computational efficiency for diverse applications.

Real-time Language Processing: Achieve rapid token generation with large language models on cutting-edge hardware like M2 Max, enhancing natural language processing capabilities for various tasks.

Conclusion:

ggml.ai offers a transformative solution for on-device inference, empowering developers to harness the full potential of machine learning on everyday hardware. Join us in simplifying AI development, exploring new possibilities, and pushing the boundaries of innovation. Experience the efficiency and flexibility of ggml.ai today, and unlock the future of on-device inference.

More information on GGML

Top 5 Countries

Traffic Sources

GGML Alternatives

GGML Alternatives-

Explore Local AI Playground, a free app for offline AI experimentation. Features include CPU inferencing, model management, and more.

-

Gemma 3n brings powerful multimodal AI to the edge. Run image, audio, video, & text AI on devices with limited memory.

-

GLM-4.5V: Empower your AI with advanced vision. Generate web code from screenshots, automate GUIs, & analyze documents & video with deep reasoning.

-

Gemma 3 270M: Compact, hyper-efficient AI for specialized tasks. Fine-tune for precise instruction following & low-cost, on-device deployment.

-

Gemma 2 offers best-in-class performance, runs at incredible speed across different hardware and easily integrates with other AI tools, with significant safety advancements built in.