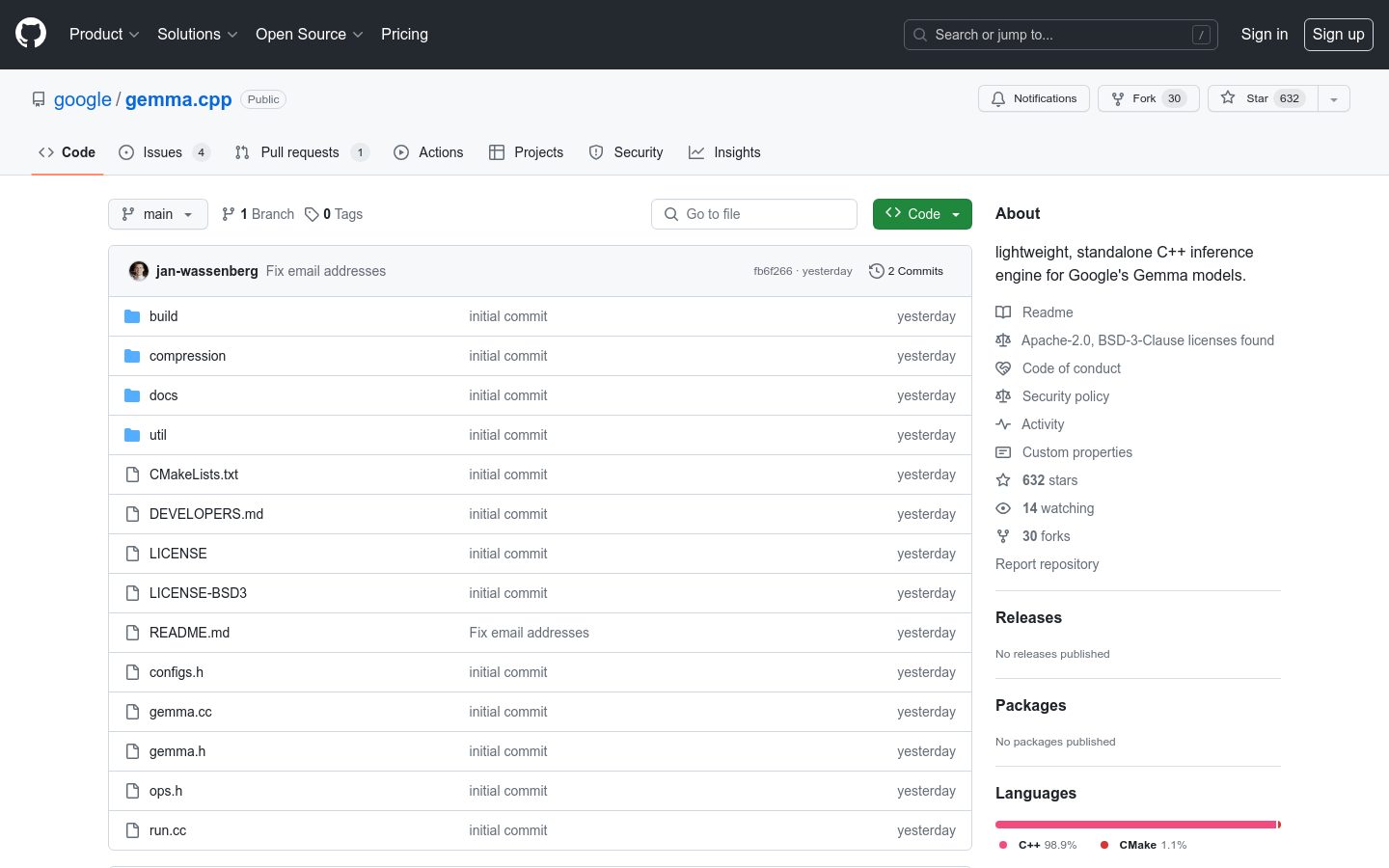

What is Gemma.cpp?

gemma.cpp is a lightweight, standalone C++ inference engine designed for the Gemma foundation models from Google. It offers a simplified implementation of Gemma 2B and 7B models, prioritizing simplicity and directness. With gemma.cpp, researchers and developers can easily experiment with and embed Gemma models into their projects, while also benefiting from its minimal dependencies and portability. It is recommended for experimentation and research use cases, while production-oriented edge deployments are better suited for Python frameworks like JAX, Keras, PyTorch, and Transformers.

Key Features:

🔧 Minimalist Implementation: gemma.cpp provides a simplified implementation of Gemma 2B and 7B models, focusing on simplicity and directness.

🧪 Experimentation and Research: It is designed for experimentation and research use cases, allowing users to easily embed it in other projects with minimal dependencies.

🚀 Portable SIMD: gemma.cpp leverages the Google Highway Library to take advantage of portable SIMD for CPU inference, ensuring efficient and optimized performance.

Use Cases:

Research and Experimentation: gemma.cpp is ideal for researchers and developers who want to experiment with Gemma models and explore new algorithms. It provides a simple and straightforward platform for testing and modifying Gemma models with minimal dependencies.

Model Embedding: gemma.cpp can be easily embedded into other projects, allowing developers to incorporate Gemma models into their own applications and systems. This enables the utilization of Gemma's capabilities without the need for extensive modifications or additional dependencies.

Portable Inference: With gemma.cpp, users can perform CPU inference using portable SIMD, ensuring efficient and optimized performance across different systems and architectures. This makes it suitable for edge deployments where resource efficiency and performance are crucial.

Conclusion:

gemma.cpp is a lightweight and standalone C++ inference engine specifically designed for the Gemma foundation models from Google. Its minimalist implementation, focused on simplicity and directness, makes it an excellent choice for researchers and developers looking to experiment with Gemma models. With gemma.cpp, users can easily embed Gemma models into their projects, benefiting from its minimal dependencies and portable SIMD for efficient CPU inference. While gemma.cpp targets experimentation and research use cases, it is recommended to use Python frameworks for production-oriented edge deployments. Embrace the potential of gemma.cpp and revolutionize your research and development processes today! Visit ai.google.dev/gemma for more information.