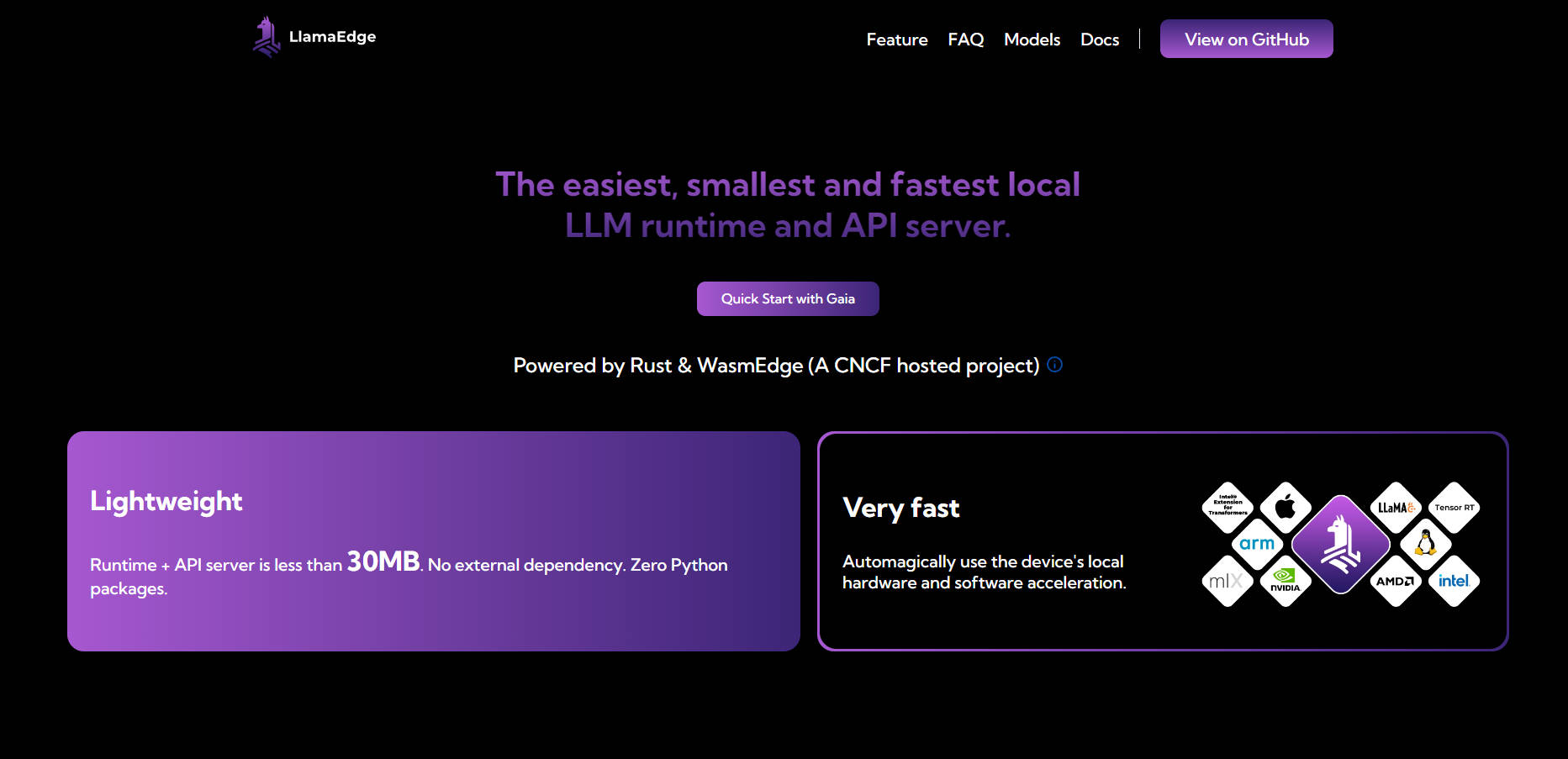

What is LlamaEdge?

Imagine having the power to run and fine-tune large language models (LLMs) directly on your device—without the cloud, without the complexity, and without compromising on performance. That’s exactly what LlamaEdge delivers. Whether you’re a developer building AI-powered applications or a business looking to deploy private, customized LLMs, LlamaEdge is the lightweight, fast, and portable solution you’ve been waiting for.

With a runtime that’s less than 30MB and zero dependencies, LlamaEdge is designed to simplify the process of running LLMs locally or on the edge. It’s built to take full advantage of your device’s hardware, ensuring native speeds and seamless cross-platform deployment.

Key Features

💡 Run LLMs Locally or on the Edge

Deploy and fine-tune LLMs directly on your device, ensuring data privacy and eliminating reliance on costly cloud services.

🌐 Cross-Platform Compatibility

Write your code once and deploy it anywhere—whether it’s on a MacBook, an NVIDIA GPU, or an edge device. No need to rebuild or retest for different platforms.

⚡ Lightweight and Fast

With a runtime under 30MB and no external dependencies, LlamaEdge is incredibly lightweight. It automagically leverages your device’s hardware acceleration for optimal performance.

🛠️ Modular Design for Customization

Assemble your LLM agents and applications like Lego blocks using Rust or JavaScript. Create compact, self-contained binaries that run seamlessly across devices.

🔒 Enhanced Privacy and Security

Keep your data local and secure. LlamaEdge runs in a sandboxed environment, requires no root permissions, and ensures your interactions remain private.

Use Cases

Building Private AI Assistants

Create AI-powered chatbots or virtual assistants that run entirely on your device, safeguarding sensitive data while delivering fast, responsive interactions.Developing Customized LLM Apps

Fine-tune LLMs for specific industries or use cases—whether it’s legal document analysis, customer support, or healthcare diagnostics—without the need for cloud-based solutions.Deploying AI on Edge Devices

Bring AI capabilities to edge devices like IoT sensors or mobile apps, enabling real-time decision-making without latency or connectivity issues.

Why Choose LlamaEdge?

Cost-Effective: Avoid the high costs of hosted LLM APIs and the complexity of managing cloud infrastructure.

Customizable: Tailor LLMs to your specific needs without the limitations of general-purpose models.

Portable: Deploy your applications across different platforms and devices with a single binary file.

Future-Proof: Stay ahead with support for multimodal models, alternative runtimes, and emerging AI technologies.

FAQ

Q: How does LlamaEdge compare to Python-based solutions?

A: Python-based solutions like PyTorch come with large dependencies and are slower for production-level inference. LlamaEdge, on the other hand, is lightweight (under 30MB), faster, and free from dependency conflicts.

Q: Is LlamaEdge compatible with GPUs and hardware accelerators?

A: Absolutely. LlamaEdge automatically leverages your device’s hardware acceleration, ensuring native speeds on CPUs, GPUs, and NPUs.

Q: Can I use LlamaEdge with existing open-source models?

A: Yes. LlamaEdge supports a wide range of AI/LLM models, including the entire Llama2 series, and allows you to fine-tune them for your specific needs.

Q: What makes LlamaEdge more secure than other solutions?

A: LlamaEdge runs in a sandboxed environment, requires no root permissions, and ensures your data never leaves your device, making it a more secure choice for sensitive applications.

Ready to Get Started?

With LlamaEdge, running and fine-tuning LLMs locally has never been easier. Whether you’re building AI-powered applications or deploying models on edge devices, LlamaEdge empowers you to do more—with less. Install it today and experience the future of local LLM deployment.

More information on LlamaEdge

Top 5 Countries

Traffic Sources

LlamaEdge Alternatives

Load more Alternatives-

LM Studio is an easy to use desktop app for experimenting with local and open-source Large Language Models (LLMs). The LM Studio cross platform desktop app allows you to download and run any ggml-compatible model from Hugging Face, and provides a simple yet powerful model configuration and inferencing UI. The app leverages your GPU when possible.

-

-

-

-