What is Cortex?

Cortex, a powerful and versatile AI engine, offers developers an OpenAI-compatible interface for building applications grounded in Large Language Models (LLMs). Its Docker-like CLI and Typescript libraries streamline local AI development, abstracting hardware and engine complexities. Supporting engines like Llama.cpp, ONNX Runtime, and TensorRT-LLM, Cortex ensures high-performance execution across a spectrum of devices, from IoT to servers. Built with flexibility and adaptability in mind, Cortex allows pulling models from various registries and supports multiple databases for efficient data management.

Key Features:

OpenAI-Equivalent API:Cortex provides an API mirror of OpenAI, facilitating a smooth transition to self-hosted, open-source alternatives without the need for extensive code rewrites.

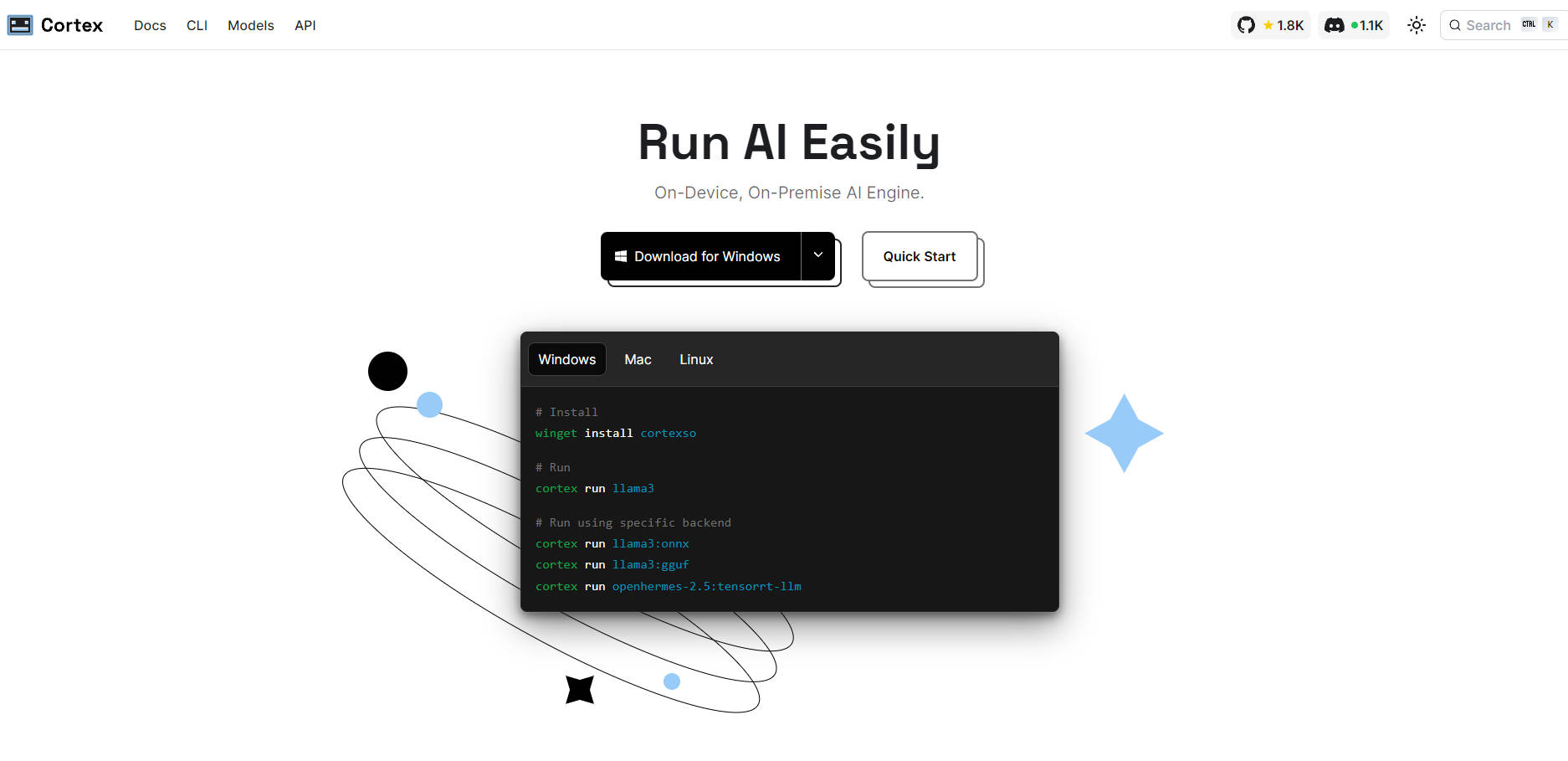

Multi-Engine Support:Developers can choose from Llama.cpp, ONNX Runtime, and TensorRT-LLM engines for model execution, offering optimization based on specific hardware configurations.

Docker-Inspired CLI & Libraries:The CLI and Typescript libraries enable easy model deployment and application development, simplifying the process of working with complex AI systems.

Flexible Model Management:Cortex supports models from any registry, expanding compatibility and making it straightforward to integrate with pre-trained models.

Scalable Data Management:Equipped with MySQL and SQLite databases, Cortex can handle both large-scale models and simpler applications, offering optimized data handling and storage.

Use Cases:

IoT Device Integration:Cortex's lightweight design allows AI functionalities on IoT devices, enabling on-device processing without cloud dependency.

Custom Model Deployment on Servers:Enterprises can host their models locally for privacy and speed, utilizing Cortex's multi-engine support to optimize performance.

Edge Computing Solutions:Deploying Cortex on edge devices brings AI capabilities closer to the data source, reducing latency and improving response times in real-world applications.

Conclusion:

Cortex is revolutionizing AI development by offering a comprehensive, user-friendly solution for building, deploying, and running AI applications. With its robust features, Cortex not only simplifies the integration of AI but also empowers developers to leverage cutting-edge technology across diverse platforms. Explore Cortex today and harness the full potential of AI in your projects!

FAQs:

What is Cortex's main advantage over OpenAI?

Cortex provides a self-hosted, open-source alternative with equivalent API functionality, allowing for greater control over data, performance optimization, and reduced costs.Can I use Cortex for developing applications on edge devices?

Absolutely! Cortex's support for IoT and SBCs, combined with its multi-engine compatibility, makes it an ideal choice for edge AI applications.How does Cortex handle model management?

Cortex simplifies model management by allowing pulls from any model registry, ensuring flexibility and ease of integration with pre-trained models.