What is DeepSeek-R1?

DeepSeek-R1 Series offers a set of powerful reasoning models designed to advance AI research and development. These models, including DeepSeek-R1-Zero and DeepSeek-R1, excel in math, code, and reasoning tasks, achieving performance levels comparable to, and in some cases exceeding, those of leading models like OpenAI-o1.

Key Features:

DeepSeek-R1-Zero and DeepSeek-R1 were trained differently, leading to significant advancements in AI reasoning.

Leverage Reinforcement Learning (RL):DeepSeek-R1-Zero was trained exclusively with RL, bypassing the traditional supervised fine-tuning (SFT) step. You can observe how RL alone fosters sophisticated reasoning behaviors in models, leading to emergent capabilities like self-verification, reflection, and generation of long chain-of-thoughts (CoTs).

Incorporate Cold-Start Data:Unlike DeepSeek-R1-Zero, DeepSeek-R1 includes data before RL training. With this, you avoid issues seen in DeepSeek-R1-Zero, such as endless repetition and poor readability. It also enhances overall reasoning performance.

Employ Distillation for Smaller, Efficient Models:DeepSeek-R1's reasoning capabilities are distilled into smaller, more accessible models. You can utilize these distilled models, which outperform models trained directly with RL on smaller scales, for various applications without sacrificing performance.

Utilize Open-Source Models:The release includes open-source versions of DeepSeek-R1-Zero, DeepSeek-R1, and six distilled models based on Llama and Qwen. You have access to these models, allowing you to integrate advanced reasoning capabilities into your projects and contribute to the research community. Specifically, DeepSeek-R1-Distill-Qwen-32B outperforms OpenAI-o1-mini across multiple benchmarks, setting a new standard for dense models.

Use Cases:

Researchers and developers can use DeepSeek-R1 models in several ways:

Academic Research:If you're a researcher, DeepSeek-R1-Zero provides a unique opportunity to study the impact of pure RL on model training. You can explore how complex reasoning behaviors emerge without SFT, potentially uncovering new training methodologies. DeepSeek-R1 also provides a unique opportunity to study the impact of cold-start data on model training.

Model Development:As a developer, you can integrate DeepSeek-R1 or its distilled versions into your applications. For instance, using DeepSeek-R1-Distill-Qwen-32B, you can build advanced code generation tools that offer superior performance compared to existing solutions. DeepSeek-R1 provides API services to output the thought chain, which can be called by setting

model='deepseek-reasoner'.Benchmarking and Evaluation:Use the comprehensive evaluation results provided to benchmark your models against DeepSeek-R1. For example, if you're working on improving mathematical reasoning, you can compare your model's performance on the AIME 2024 benchmark, where DeepSeek-R1 achieved a 79.8% pass rate.

Conclusion:

DeepSeek-R1 Series models represent a significant step forward in AI reasoning. By leveraging advanced training techniques and making models open-source, DeepSeek empowers researchers and developers to explore new frontiers in AI. Whether you're conducting academic research, developing AI-powered applications, or seeking to benchmark your models, DeepSeek-R1 provides the tools and performance you need to succeed.

FAQ:

1. What makes DeepSeek-R1 models unique?

DeepSeek-R1 models are unique due to their training methodologies. DeepSeek-R1-Zero was trained solely through reinforcement learning, demonstrating that complex reasoning can emerge without supervised fine-tuning. DeepSeek-R1 leverages cold-start data to enhance performance and address issues like poor readability. Additionally, the ability to distill these advanced reasoning capabilities into smaller models makes them more accessible for various applications.

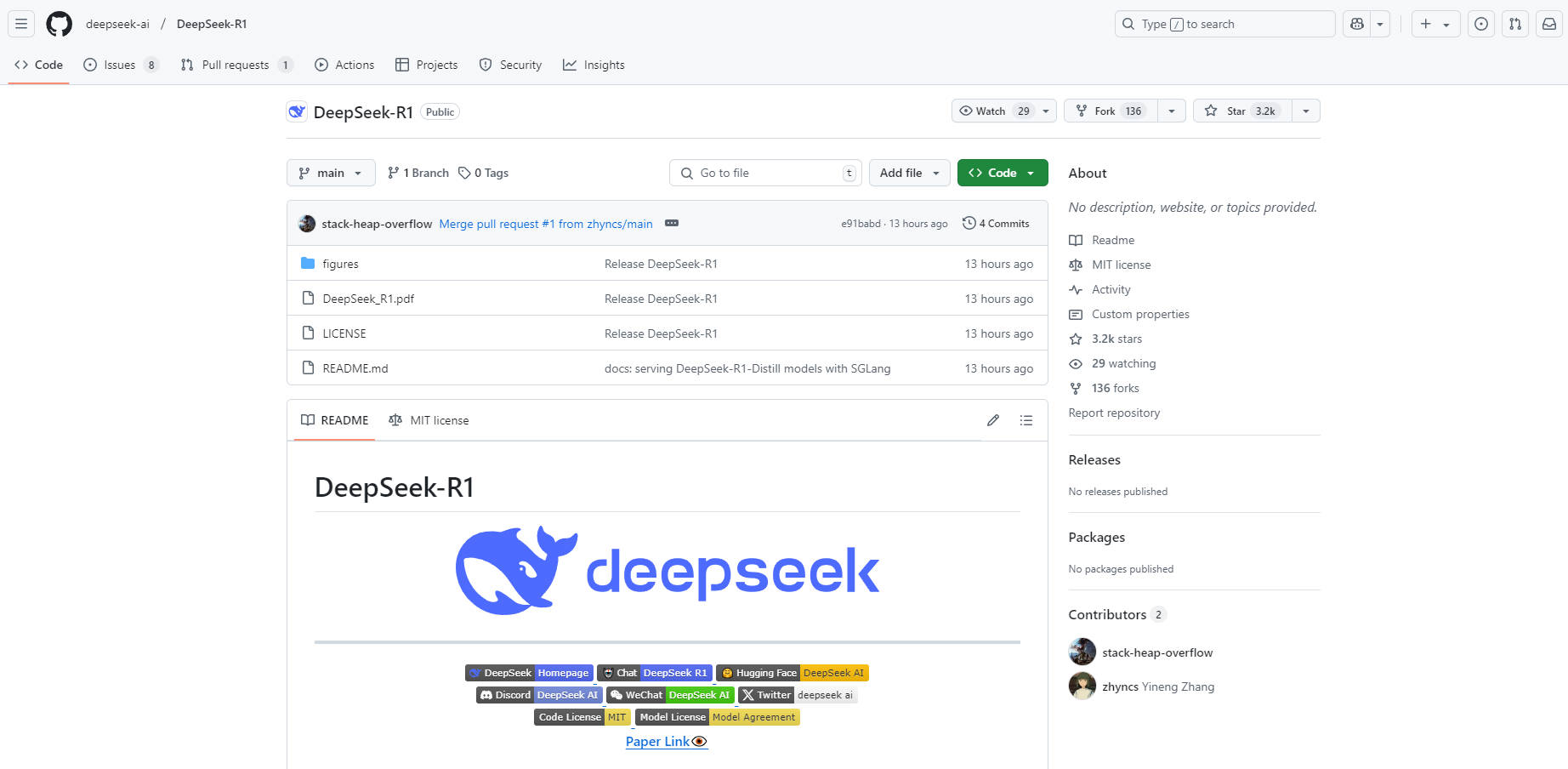

2. How can I access and use DeepSeek-R1 models?

You can access DeepSeek-R1 models through the official DeepSeek website and API platform. The models, including DeepSeek-R1-Zero, DeepSeek-R1, and the distilled versions, are also available on Hugging Face. You can download and integrate them into your projects using standard procedures for Qwen or Llama models.

3. What are the benefits of using the distilled models?

The distilled models, such as DeepSeek-R1-Distill-Qwen-32B, offer several benefits. They retain the advanced reasoning capabilities of the larger DeepSeek-R1 model but are smaller and more efficient. This means you can deploy them in resource-constrained environments without sacrificing performance. They also outperform models of similar size trained directly with reinforcement learning, providing superior results for tasks like code generation and mathematical reasoning.

4. How do DeepSeek-R1 models compare to other leading models?

DeepSeek-R1 models perform exceptionally well in benchmarks, often matching or surpassing leading models like OpenAI-o1. For example, DeepSeek-R1 achieved a 79.8% pass rate on the AIME 2024 benchmark and a 97.3% pass rate on MATH-500. In coding tasks, it achieved a Codeforces rating of 2029, outperforming 96.3% of human participants. The distilled models also show strong performance, with DeepSeek-R1-Distill-Qwen-32B outperforming OpenAI-o1-mini across various benchmarks.

More information on DeepSeek-R1

DeepSeek-R1 Alternatives

DeepSeek-R1 Alternatives-

DeepCoder: 64K context code AI. Open-source 14B model beats expectations! Long context, RL training, top performance.

-

DeepSeek-VL2, a vision - language model by DeepSeek-AI, processes high - res images, offers fast responses with MLA, and excels in diverse visual tasks like VQA and OCR. Ideal for researchers, developers, and BI analysts.

-

DeepSeek-V2: 236 billion MoE model. Leading performance. Ultra-affordable. Unparalleled experience. Chat and API upgraded to the latest model.

-

DeepSearcher: AI knowledge management for private enterprise data. Get secure, accurate answers & insights from your internal documents with flexible LLMs.

-

DeepSeek LLM, an advanced language model comprising 67 billion parameters. It has been trained from scratch on a vast dataset of 2 trillion tokens in both English and Chinese.