What is RememberAPI ?

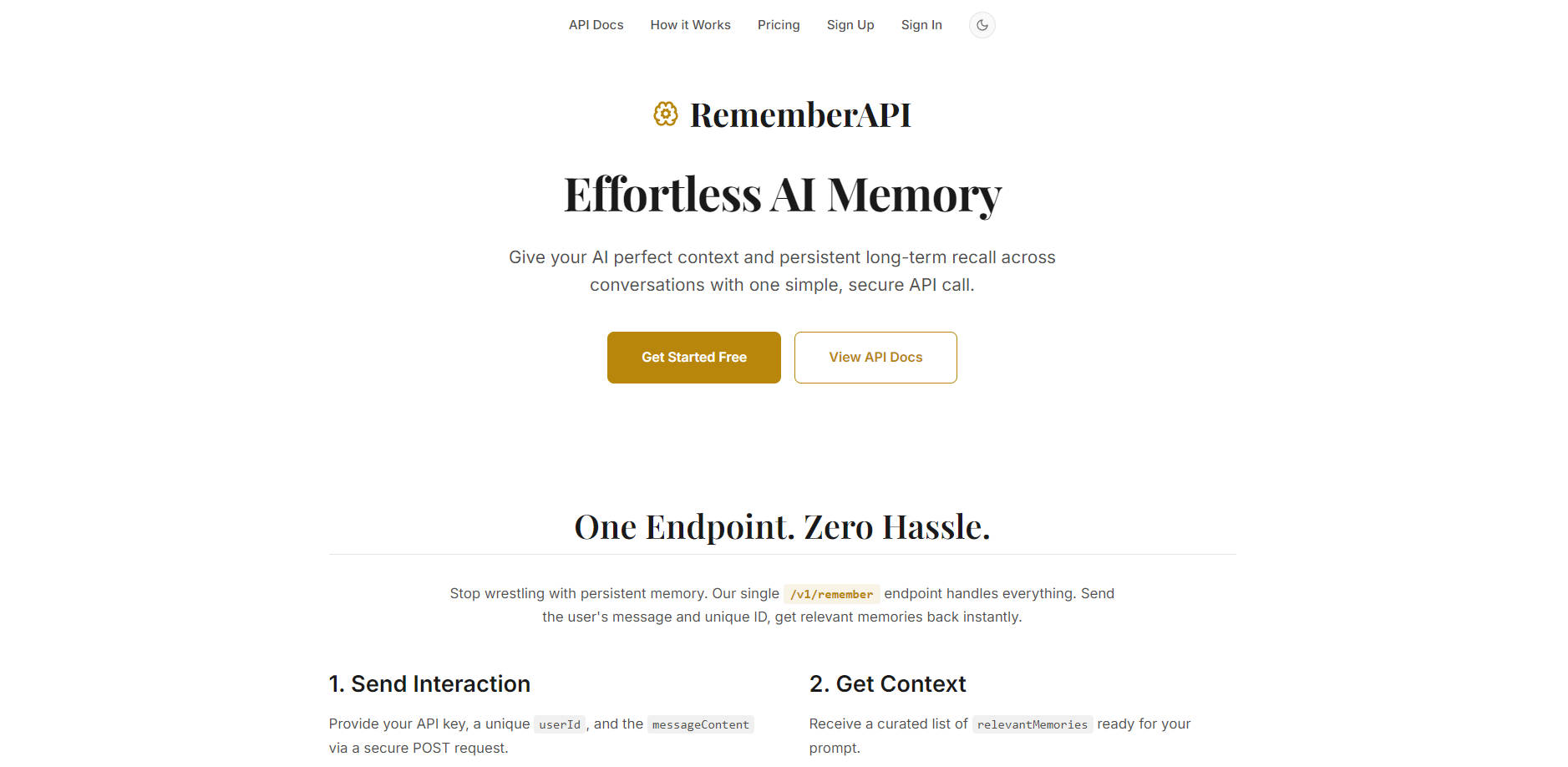

RememberAPI is the dedicated memory layer for your conversational AI, agents, and personalized applications. It solves the critical challenge of stateless LLMs by providing persistent, long-term recall across all user interactions. By integrating one simple, secure API endpoint, developers can ensure their AI always retrieves the exact historical context needed to deliver highly personalized, accurate, and continuous experiences, overcoming the limitations of short context windows.

Key Features

RememberAPI is designed for immediate integration and maximum performance, handling complex memory retrieval and storage with a single, streamlined endpoint.

🧠 Automatic Relevance Scoring & Intelligent Recall

Our proprietary AI automatically scores and surfaces the most important memories for the current interaction, eliminating the need for manual tagging or complex vector database queries. Memories are returned with human-readable relative timestamps (e.g., "[yesterday]"), allowing your LLM to effortlessly weigh both recent context and long-term facts for superior decision-making.

⚡ Ultra-Low Latency Context Enrichment

The core /v1/remember call is optimized for speed, adding only approximately 333ms round trip latency for memory retrieval. Memory storage of the user’s latest message happens asynchronously in the background. This architecture ensures that context is delivered instantly to enrich your prompt without delaying the user experience.

🔒 Secure, Isolated, and Privacy-First Design

Security is paramount. All data is strictly isolated per account, and communication is secured via HTTPS (TLS). You maintain control over user identities by providing internal IDs or hashes, allowing you to stay as anonymous as your architecture demands, while data remains encrypted at rest and in-transit on SOC2 compliant infrastructure.

🛠️ Single Endpoint Simplicity for Developers

Stop wrestling with complex memory pipelines. The single /v1/remember endpoint handles both context retrieval and new memory storage simultaneously. Integrating powerful, persistent memory into your application requires just a secure POST request and can be accomplished in minutes.

Use Cases

RememberAPI enables development teams to build truly personalized and consistent AI experiences across various domains.

1. Building Personalized Recommendation Engines

When a user asks for a product recommendation (e.g., "What shoes should I buy?"), RememberAPI instantly retrieves all relevant historical context—such as the user's job ("mail carrier"), their daily activity ("walk 8+ hours"), and specific physical needs ("plantar fasciitis"). This context allows the recommendation engine to move beyond general suggestions and provide hyper-specific, actionable advice instantly.

2. Enhancing Customer Support and Agent Tools

Implement RememberAPI to give your support chatbot or internal agent tools a persistent memory of past support tickets, preferences, and previous troubleshooting steps. Instead of asking the user to repeat their history, the agent can immediately recall facts like, "The user noted their printer model P450 had a driver issue two weeks ago," dramatically reducing resolution time and improving customer satisfaction.

3. Maintaining Long-Running Conversational Context

For complex, multi-session projects—such as writing assistants, financial planning tools, or educational tutors—RememberAPI ensures continuity. If a user returns after a month, the AI can recall the key goals, project parameters, or learning challenges previously discussed, allowing the user to pick up exactly where they left off without needing to re-state their objectives.

Why Choose RememberAPI?

We focus on delivering measurable functional value and effortless integration, ensuring your AI systems are not just smarter, but faster and more reliable.

- Five-Line Integration: The core functionality can be added to your application workflow in as few as five lines of code, minimizing development overhead and accelerating time-to-market.

- Optimal Performance Pattern: We recommend and support a pre-LLM context enrichment pattern, guaranteeing that your prompt is fully contextualized before calling the LLM, maximizing output quality without introducing significant performance drag.

- Granular Control: Beyond the core retrieval endpoint, RememberAPI provides optional granular API control for viewing, deleting specific memories, or completely erasing all user data upon request, ensuring compliance and user sovereignty.

Conclusion

RememberAPI provides the essential infrastructure for building next-generation conversational AI that feels truly intelligent and recognizes the user over time. By handling the complexity of long-term memory retrieval and storage seamlessly, you can focus on refining your core AI logic.

Make your AI smarter today. Explore how RememberAPI can enhance your application.

More information on RememberAPI

RememberAPI Alternativas

Más Alternativas-

-

Supermemory proporciona memoria a largo plazo a tus LLM. En lugar de una generación de texto sin estado, estos recuperan la información pertinente de tus archivos, chats y herramientas, asegurando que las respuestas se mantengan coherentes, contextuales y personalizadas.

-

-

-

Los agentes impulsan un razonamiento de tipo humano y constituyen un significativo paso adelante en la construcción de AGI y la comprensión de nuestra propia naturaleza. La memoria es un componente fundamental en cómo los seres humanos abordan las tareas y debería recibir la misma consideración al desarrollar agentes de IA. memary emula la memoria humana para impulsar el desarrollo de estos agentes.