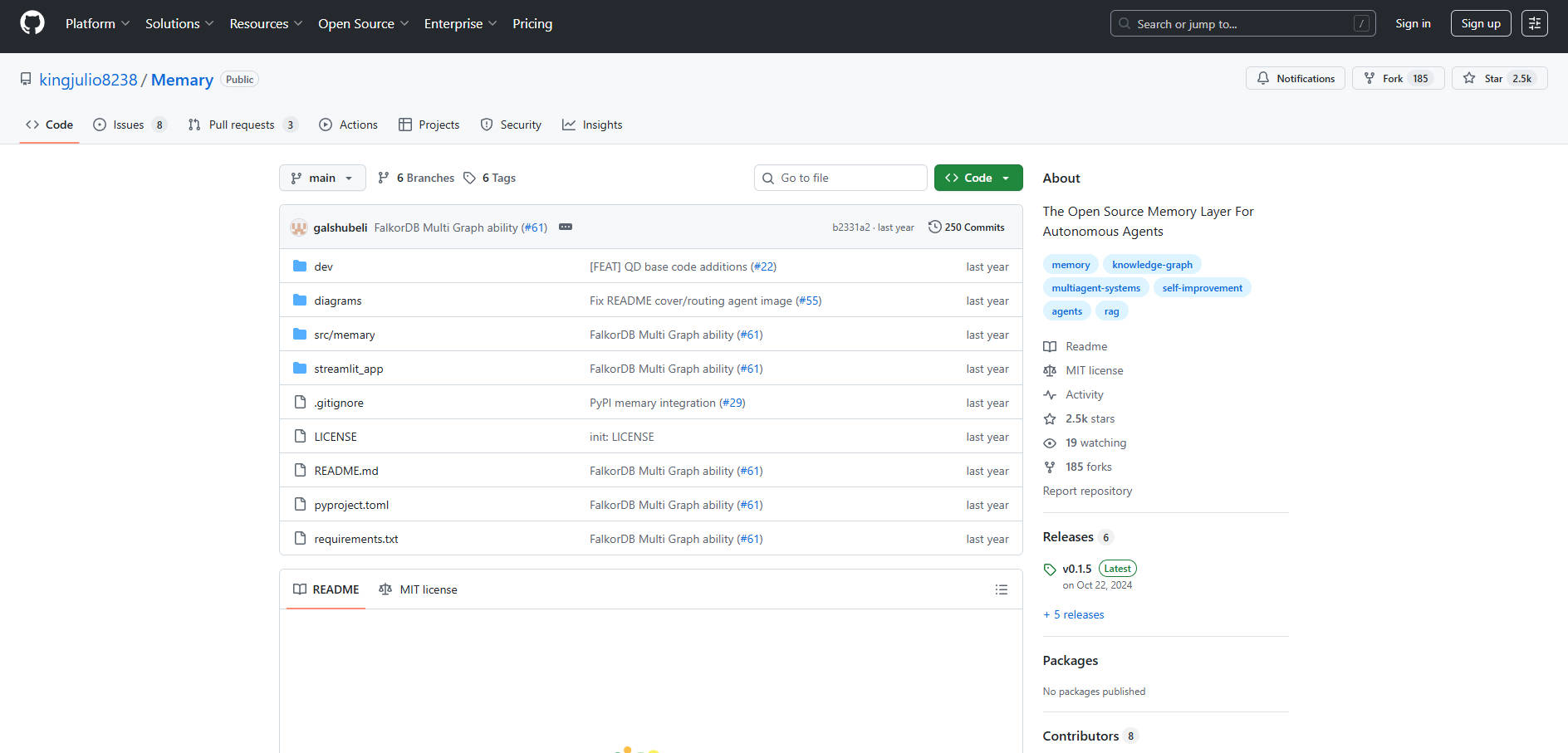

What is Memary ?

memary is a robust Python library engineered to equip your AI agents with persistent, human-like memory structures, fundamentally advancing their ability to reason and maintain context over time. It addresses the core limitation of short-term LLM context windows by integrating sophisticated knowledge management and memory analysis modules. Designed for developers and engineers building advanced, personalized, and truly context-aware AI agents, memary provides the essential foundation for building intelligent systems that learn and evolve.

Key Features

memary integrates seamlessly with your existing agent architecture (supporting models via Ollama or OpenAI) to provide deep, analytical memory capabilities.

🧠 Integrated Knowledge Graph (KG) Management

memary utilizes a dedicated graph database (e.g., FalkorDB) to store knowledge, allowing agents to move beyond simple sequential memory. It employs a recursive retrieval approach that determines key entities in a query, builds a localized subgraph, and uses that context to generate precise responses. This technique drastically reduces latency compared to searching an entire knowledge graph.

📈 Dual Memory Modules: Stream and Store

The system divides memory into two complementary components, mimicking human cognitive functions:

Memory Stream: Captures all entities and their timestamps, providing a broad timeline of exposure and interaction. This allows for Timeline Analysis to track the evolution of user interests.

Entity Knowledge Store: Tracks the frequency and recency of entity references. By ranking entities based on these factors, the store reflects the user’s depth of knowledge and familiarity with specific concepts, enabling highly tailored responses.

⚙️ Automatic Memory Generation and System Improvement

Once initialized, memary automatically updates the agent’s memory structure as it interacts, capturing all relevant memories without requiring further developer intervention. This constant, evolving feedback loop mimics how human memory learns over time, providing a clear path for continuous agent improvement.

🧩 Flexible Model and Tool Support

Developers maintain full control over the underlying models. memary supports local models running via Ollama (Llama 3, LLaVA suggested) or remote services (GPT-3.5-turbo, GPT-4-vision-preview). Furthermore, you can easily add or remove custom external tools (e.g., search, vision, stocks) to the agent to expand its functional capabilities.

👥 Multi-Agent and Multi-Graph Context Switching

When utilizing a graph database like FalkorDB, memary enables the creation and management of multiple distinct agents, each corresponding to a unique knowledge graph ID. This functionality is crucial for developers needing to manage personalized contexts, allowing seamless transitions between different user personas or projects without memory bleed.

Use Cases

memary is designed for scenarios where contextual depth, personalization, and long-term memory are critical to the user experience.

Developing Personalized Research Assistants: Build an agent that remembers not only the facts gathered but also the user's specific research methodologies, preferred sources, and prior conclusions. The Entity Knowledge Store ensures the agent prioritizes recently or frequently referenced topics, providing highly relevant context for ongoing projects.

Scaling and Managing Distinct User Personas: For a platform supporting multiple distinct users, utilize the Multi-Graph capability to assign a unique memory structure to each user ID. This guarantees that User A’s personal preferences, chat history, and knowledge base remain entirely separate from User B’s, enabling true personalization at scale.

Enhancing Conversational Clarity and Efficiency: Apply memary’s memory compression techniques to manage the LLM context window effectively. By summarizing past conversations and injecting only the most relevant entities alongside the agent’s response, you ensure the agent maintains long-term context without incurring excessive token usage or losing focus.

Why Choose memary?

memary offers unique architectural advantages that distinguish it from standard vector database retrieval systems, providing genuine information gain for developers.

Accelerated Retrieval via Multi-Hop Reasoning: When complex queries involve multiple key entities, memary uses multi-hop reasoning to join several relevant subgraphs. This intelligent traversal ensures the agent can answer complex, interconnected questions quickly and accurately, a capability that standard single-pass retrieval often struggles to match.

Deep Contextual Insight: Unlike simple chat history storage, memary’s Memory Module performs analytical functions (timeline, frequency, recency analysis) to infer user knowledge and preferences. This allows agents to tailor responses more precisely to the user's expertise level and current interests.

Designed for Integration: memary is built on the principle of minimal implementation effort. It integrates onto existing agent frameworks, and while it currently provides a simple ReAct agent implementation for demonstration, its future design is focused on supporting any type of agent from any provider.

Conclusion

By providing a structured, analytical, and persistent memory framework, memary empowers developers to transcend the limitations of stateless AI agents. It ensures your applications can maintain deep context, learn from interactions, and deliver personalized, insightful experiences.

Explore how memary can transform your AI agents into truly intelligent, reliable partners today.

More information on Memary

Memary Alternatives

Load more Alternatives-

-

Stop AI forgetfulness! MemMachine gives your AI agents long-term, adaptive memory. Open-source & model-agnostic for personalized, context-aware AI.

-

-

Supermemory gives your LLMs long-term memory. Instead of stateless text generation, they recall the right facts from your files, chats, and tools, so responses stay consistent, contextual, and personal.

-

MemoryGraph gives your AI assistants persistent memory. Store solutions, track patterns, recall context across sessions. Zero config, local-first, privacy by default.