What is FireRedASR?

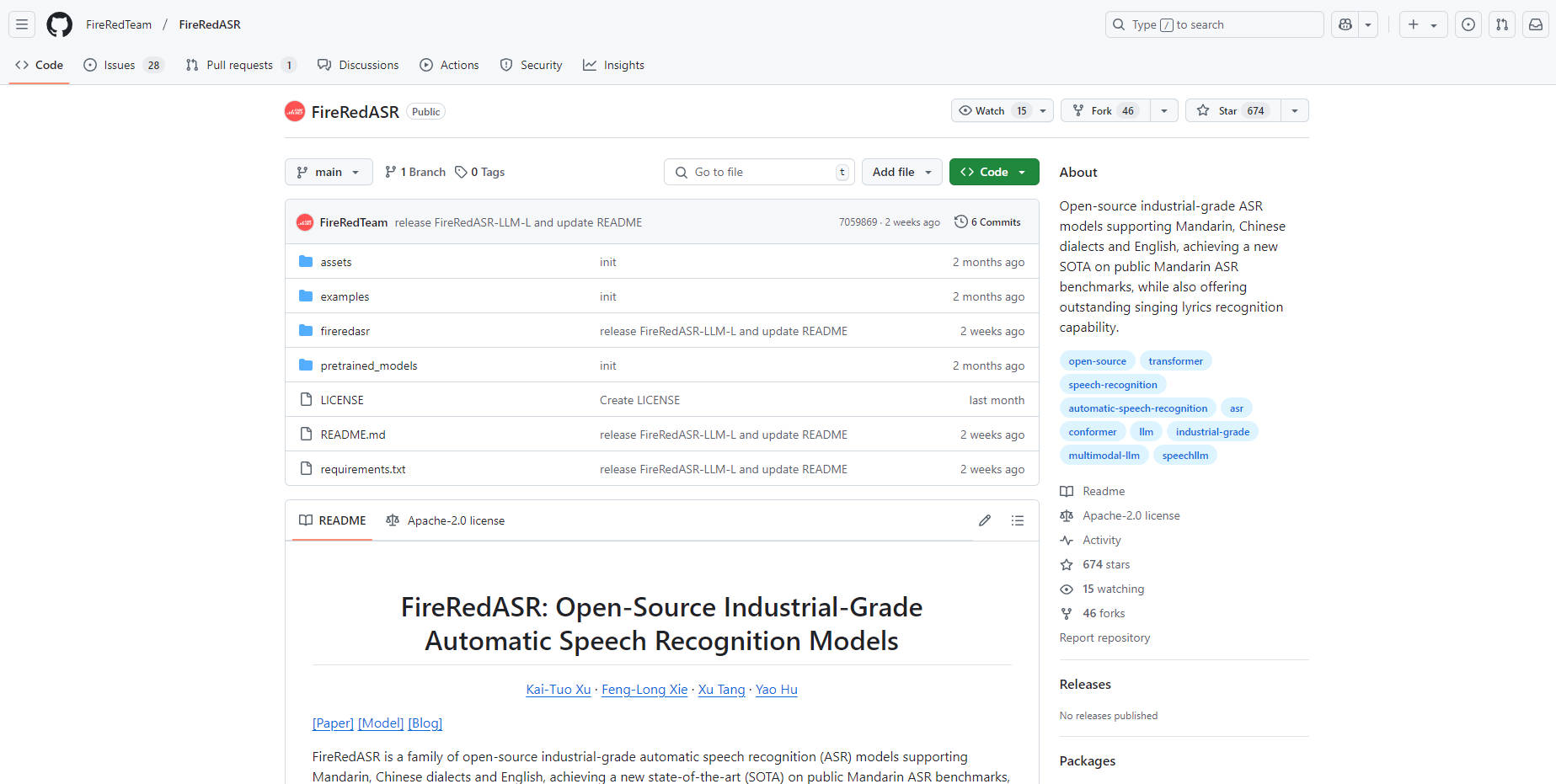

FireRedASR is a family of open-source automatic speech recognition (ASR) models designed for real-world applications. If you need accurate and efficient speech-to-text capabilities in Mandarin, Chinese dialects, or English, FireRedASR offers a powerful solution. It addresses the critical need for robust ASR that performs exceptionally well in diverse acoustic conditions, even extending to specialized tasks like singing lyrics recognition.

Key Features:

🗣️ Achieve State-of-the-Art Accuracy: FireRedASR delivers top-tier performance, achieving a new state-of-the-art (SOTA) on public Mandarin ASR benchmarks. This means fewer errors and more reliable transcriptions for your applications.

⚙️ Choose Your Architecture: Select from two model variants to suit your specific needs:

FireRedASR-LLM: Employs an Encoder-Adapter-LLM framework, leveraging the power of large language models (LLMs) for superior performance and seamless end-to-end speech interaction.

FireRedASR-AED: Utilizes an Attention-based Encoder-Decoder (AED) architecture, balancing high performance with computational efficiency. Ideal as a speech representation module in LLM-based speech models.

🌐 Support Multiple Languages and Dialects: Transcribe audio in Mandarin, various Chinese dialects, and English with high accuracy. This broad linguistic coverage opens up a wider range of application possibilities.

🎤 Recognize Singing Lyrics: FireRedASR excels in the challenging domain of singing lyrics recognition, offering unique capabilities for music-related applications.

💻 Easy to use: Create a Python environment, download and place model files, and install dependencies using simple commands.

Technical Details:

Model Variants: FireRedASR-LLM (8.3B parameters) and FireRedASR-AED (1.1B parameters).

Evaluation Metrics: Character Error Rate (CER%) for Chinese and Word Error Rate (WER%) for English.

Benchmarks: Rigorously tested on aishell1, aishell2, WenetSpeech (ws_net, ws_meeting), KeSpeech, and LibriSpeech (test-clean, test-other).

Architecture:

FireRedASR-LLM: Encoder-Adapter-LLM framework.

FireRedASR-AED: Attention-based Encoder-Decoder (AED) architecture.

Dependencies: Python 3.10, requirements.txt.

Use Cases:

Voice Assistant Integration: Integrate FireRedASR into voice assistants to enable accurate command recognition and natural language understanding, even in noisy environments or with diverse accents. The low error rates ensure reliable user interaction.

Real-time Transcription Service: Develop a real-time transcription service for meetings, lectures, or interviews. The AED model's efficiency allows for low-latency processing, while the LLM model provides the highest accuracy for critical applications.

Multimedia Content Analysis: Use FireRedASR to automatically generate subtitles for videos, index audio archives, or analyze the content of podcasts. The singing lyrics recognition capability enables unique features for music platforms.

Conclusion:

FireRedASR provides a powerful and versatile solution for developers and researchers seeking industrial-grade speech recognition. Its state-of-the-art accuracy, flexible architecture options, and multi-language support make it a compelling choice for a wide range of applications. The open-source nature of the project encourages community contributions and further advancements in the field.

FAQ:

Q: What are the input length limitations for each model?

A: FireRedASR-AED supports audio input up to 60 seconds. Input longer than 60 seconds may cause hallucination issues. Input exceeding 200 seconds will trigger positional encoding errors. FireRedASR-LLM supports audio input up to 30 seconds.

Q: How do I handle potential repetition issues with FireRedASR-LLM during batch beam search?

A: When using batch beam search with FireRedASR-LLM, ensure that the input utterances have similar lengths. Significant differences in length can lead to repetition in shorter utterances. You can sort your dataset by length or set the batch size to 1 to mitigate this issue.

Q: What are the key differences between the FireRedASR-LLM and FireRedASR-AED models?

A: FireRedASR-LLM is designed for maximum accuracy and end-to-end speech interaction, leveraging an LLM. FireRedASR-AED prioritizes computational efficiency while maintaining high performance, making it suitable as a speech representation module.

Q: How can I convert my audio to the required format?

A: Use the provided FFmpeg command:

ffmpeg -i input_audio -ar 16000 -ac 1 -acodec pcm_s16le -f wav output.wav. This converts the audio to 16kHz 16-bit PCM format.Q: Where can I download the model files?

A: Model files can be downloaded from Hugging Face. Links are available in the provided documentation [Model]. You also need to download Qwen2-7B-Instruct for FireRedASR-LLM-L.

Q: What Python version is required? A: Python 3.10.

More information on FireRedASR

FireRedASR Alternatives

Load more Alternatives-

Omnilingual ASR is an open-source speech recognition system supporting over 1,600 languages — including hundreds never previously covered by any ASR technology.

-

Aero-1-Audio: Efficient 1.5B model for 15-min continuous audio processing. Accurate ASR & understanding without segmentation. Open source!

-

Transform your podcasts & chatbots with FireRedTTS-2: natural, multi-speaker long-form speech. Enjoy ultra-low latency & multilingual voice cloning.

-

Discover Step - Audio, the first production - ready open - source framework for intelligent speech interaction. Harmonize comprehension and generation, support multilingual, emotional, and dialect - rich conversations.

-