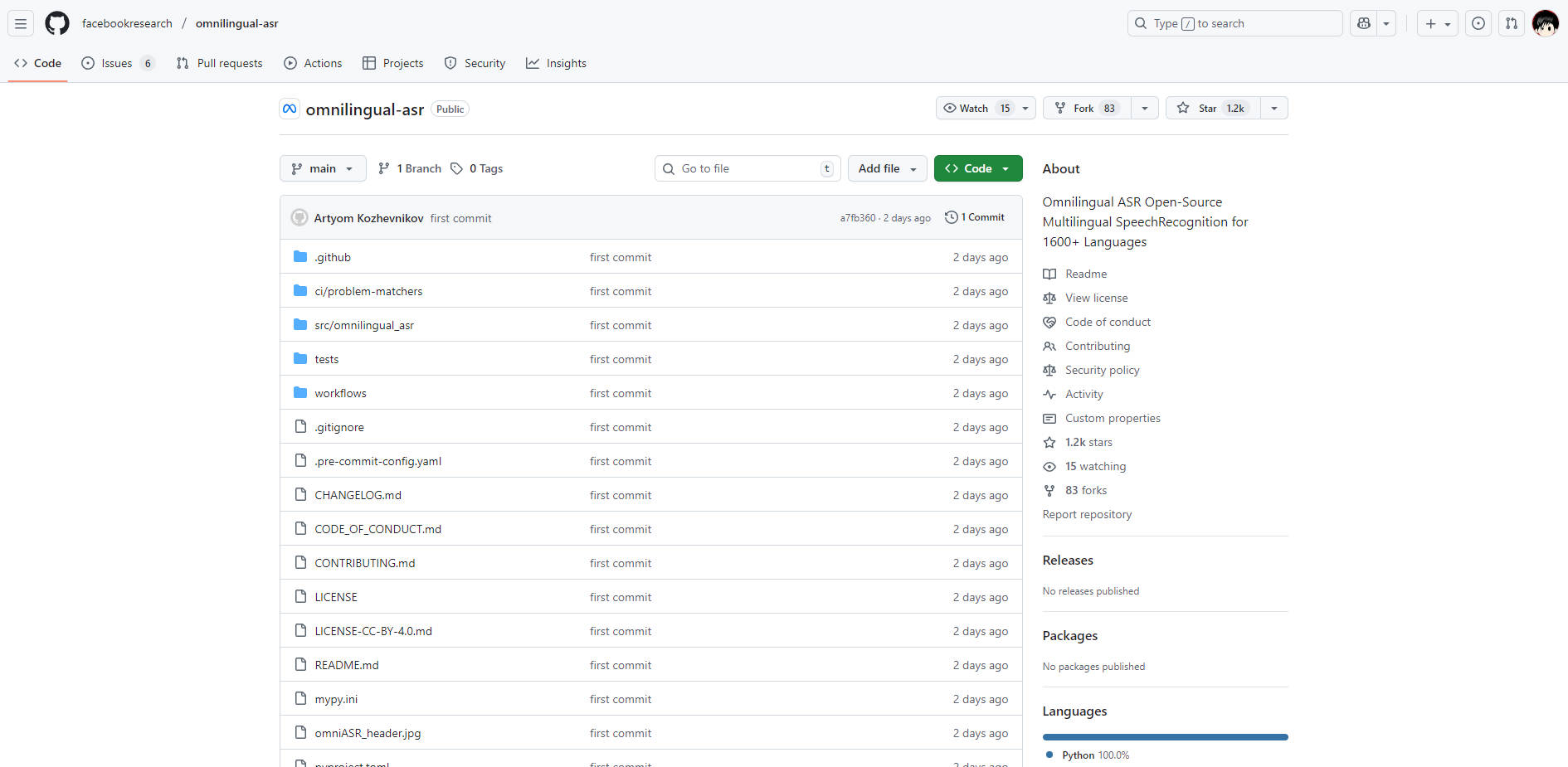

What is Omnilingual ASR?

Omnilingual ASR is a state-of-the-art, open-source automatic speech recognition system developed by Meta’s Fundamental AI Research (FAIR) team. This comprehensive suite of models addresses the critical challenge of global language inclusivity by supporting over 1,600 languages—including hundreds never before covered by any ASR technology. Designed for researchers, developers, and diverse language communities worldwide, Omnilingual ASR delivers high-quality, adaptable voice-to-text transcription at an unprecedented scale.

Key Features

Omnilingual ASR leverages advanced architectural design, combining a scaled wav2vec 2.0 speech encoder with LLM-style decoders, to provide powerful and flexible speech solutions.

🌍 Massive Language Inclusivity

This system supports over 1,600 global languages, significantly expanding the scope of speech technology. Crucially, it includes support for over 500 low-resource languages that have historically lacked ASR coverage, opening up vital transcription capabilities for underserved communities and linguistic research.

🚀 Effortless Language Extension via Zero-Shot Learning

Unlike traditional ASR systems that demand massive, costly datasets for new languages, Omnilingual ASR utilizes scalable zero-shot learning and in-context capabilities derived from LLMs. This allows you to extend the system to entirely new languages or dialects using just a few paired audio-text examples, dramatically lowering the barriers of entry regarding specialized expertise and high-end compute resources.

✨ State-of-the-Art Performance at Scale

The powerful 7B-LLM-ASR model achieves top-tier accuracy across its massive language portfolio. For 78% of the supported 1,600+ languages, the system maintains a Character Error Rate (CER) below 10, representing a significant step change in performance, especially for long-tail and low-resource languages.

⚙️ Versatile and Scalable Model Family

Omnilingual ASR provides a flexible suite of models tailored for diverse deployment needs. You can choose from lightweight 300M versions designed for efficient use on low-power devices, up to the powerful 7B models which deliver maximum accuracy for demanding, high-stakes use cases.

Use Cases

Omnilingual ASR empowers researchers, developers, and language advocates to build more inclusive and functional voice-enabled applications.

1. Archiving and Analyzing Low-Resource Language Data Local communities and linguistic researchers can utilize Omnilingual ASR to transcribe historical or newly recorded speech from low-resource languages that lack existing AI coverage. This capability facilitates the creation of searchable, shareable text corpora, aiding in language preservation and advanced scholarly analysis.

2. Developing Cross-Platform, Multilingual Applications Developers can integrate the suite of models to deploy ASR solutions tailored to specific hardware constraints. For instance, the lightweight 300M models enable accurate, on-device transcription for mobile or embedded systems, while the 7B models can power high-accuracy, server-side transcription services supporting hundreds of languages simultaneously.

3. Accelerating Speech Technology Research Researchers can leverage the accompanying Omnilingual ASR Corpus—the largest ultra-low-resource spontaneous ASR dataset ever released—along with the comprehensive training recipes and the foundational Omnilingual wav2vec 2.0 model. This enables rapid experimentation, fine-tuning, and advancement of speech-related tasks beyond standard ASR.

Unique Advantages

Omnilingual ASR stands apart by fundamentally redefining the accessibility and scalability of automatic speech recognition technology.

- Unprecedented Long-Tail Coverage: Omnilingual ASR is the first large-scale ASR system to successfully transcribe over 500 languages never before covered by AI, making speech technology truly global and inclusive.

- Ease of Extension: The framework is uniquely designed to be extended to entirely new languages with minimal data and zero specialized expertise. By harnessing in-context learning from LLMs, you bypass the typical requirement for massive, proprietary training sets and specialized high-end computing resources.

- Open-Source Foundation: Released by Meta’s FAIR team under the permissive Apache 2.0 license, the entire system is designed for community adoption. This open architecture, built on the PyTorch ecosystem and fairseq2, ensures maximum transparency, collaboration, and integration flexibility for developers worldwide.

Conclusion

Omnilingual ASR delivers the performance and adaptability required to bring accurate speech recognition to every language community globally. By combining state-of-the-art accuracy with unparalleled linguistic scale and an open-source framework, it offers a powerful foundation for the next generation of inclusive voice technology.

Explore how Omnilingual ASR can help you expand your research or deploy voice solutions for languages previously left behind.

FAQ

Q: What is the primary difference between Omnilingual ASR and previous large-scale ASR systems? A: The primary difference is the breadth of coverage and the method of extension. While previous systems focused heavily on high-resource languages, Omnilingual ASR covers over 1,600 languages, critically including hundreds of low-resource languages. Furthermore, it introduces in-context learning capabilities, allowing developers to add support for a new language with just a few paired examples, eliminating the need for large-scale data collection and expensive re-training.

Q: What is the licensing structure for Omnilingual ASR? A: Omnilingual ASR is fully open-source. The model assets are released under a permissive Apache 2.0 license, and the associated data (such as the Omnilingual ASR Corpus) is provided under the CC-BY license. This open licensing encourages broad adoption and community contributions.

Q: Are there any current limitations regarding audio input? A: Currently, the inference pipeline is optimized for shorter segments and accepts audio files shorter than 40 seconds. While this covers many standard use cases, the team is actively developing support for transcribing unlimited-length audio files in future updates to accommodate long-form recordings.

More information on Omnilingual ASR

Omnilingual ASR Alternatives

Load more Alternatives-

FireRedASR: Open-source speech recognition. Industrial-grade accuracy for Mandarin, English, dialects, & lyrics.

-

-

Aero-1-Audio: Efficient 1.5B model for 15-min continuous audio processing. Accurate ASR & understanding without segmentation. Open source!

-

Enhance your applications with AssemblyAI's powerful AI models for accurate transcription and understanding of human speech.

-