What is Hatchet.run?

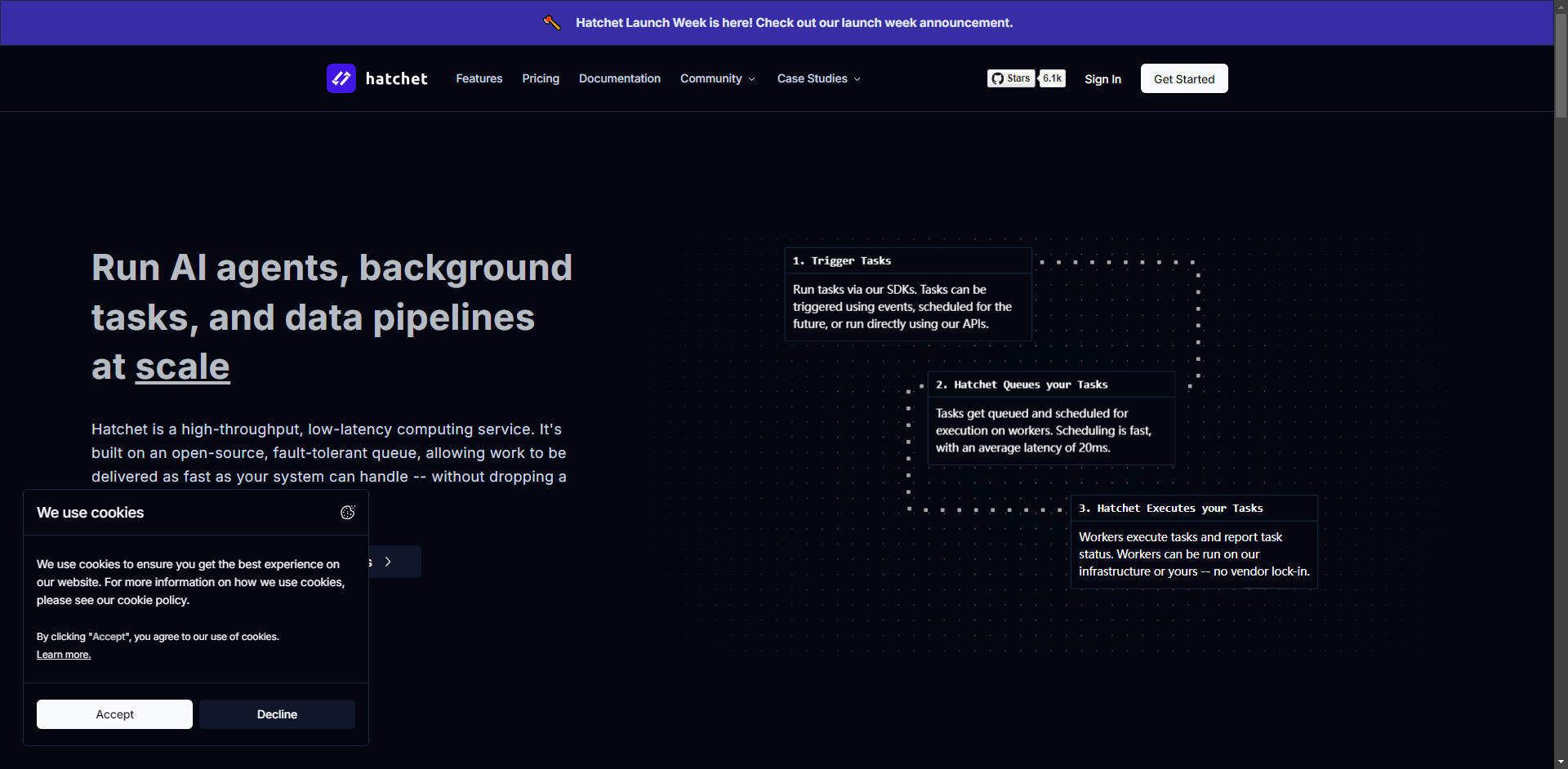

Hatchet is a high-throughput, low-latency orchestration platform engineered to simplify the complexity of building resilient distributed web applications and agentic AI pipelines. By serving as a durable execution layer, Hatchet replaces the need to manage complex, self-built task queues or fragile pub/sub systems for scaling. Engineering teams leverage Hatchet to effortlessly distribute functions, manage flow control, and ensure reliable task completion, allowing them to focus purely on business logic rather than infrastructure durability.

Key Features

Hatchet provides the essential controls and durability required to run background tasks and data pipelines reliably at massive scale.

1. Durable and Fault-Tolerant Execution

Hatchet guarantees that work gets completed, even if your application crashes mid-task. Every task invocation is durably logged to PostgreSQL, enabling the system to track progress and automatically resume workflows exactly where they left off. This durable execution model eliminates lost work, prevents duplicate calls (crucial for LLM interactions), and ensures that user requests are never dropped, even during system failures.

2. ⚡️ High-Throughput, Low-Latency Task Dispatch

The Hatchet Engine is optimized for speed, offering sub-25ms task dispatch latency for hot workers handling thousands of concurrent tasks. It utilizes intelligent assignment rules that automatically manage concurrency, fairness, and priority without requiring complex manual configuration, ensuring your workers receive tasks at the rate they can handle.

3. ⚙️ Code-First Workflow Orchestration

Define complex logic as easily versionable and testable code. You can compose simple functions, called tasks, into sophisticated parent/child relationships or Directed Acyclic Graphs (DAGs). This allows developers to break down large, complex operations into smaller, reusable steps, streamlining development and making deployments simpler across Python, Typescript, and Go environments.

4. 📊 Built-in Observability and Alerting

Hatchet comes bundled with real-time monitoring tools to provide immediate visibility into your distributed system. You gain access to live dashboards, metrics, and comprehensive logging that correlates task failures directly with system logs. Customizable Slack and email-based alerting ensures you are immediately notified when tasks or workflows fail, minimizing detection and response time.

Use Cases

Hatchet excels in scenarios demanding high reliability, complex scheduling, and massive throughput.

1. Real-Time Data Ingestion and ETL Pipelines For applications requiring up-to-date context, such as vector databases feeding Large Language Models (LLMs), Hatchet provides fast, reliable ETL (Extract, Transform, Load) functionality. You can define workflows that ingest, process, and update data sources at high throughput, using Hatchet's flow control to manage concurrency and prevent bottlenecks during large data spikes.

2. Scaling Agentic AI Systems Hatchet is specifically designed to support the complexity of modern AI agents. Features like event-based triggering, child workflow spawning, and dynamic routing enable you to build multi-step, sophisticated agents that react to external events, manage long-running conversations, and coordinate across multiple external services reliably.

3. Flattening Application Load Spikes If your application experiences unpredictable surges in traffic (e.g., flash sales, sudden viral growth), Hatchet's durable queue mechanism ingests all incoming requests instantly. It then flattens the execution curve, ensuring tasks are delivered to your workers at a controlled, sustainable rate, preventing worker overload and guaranteeing that critical user requests are processed without being dropped.

Conclusion

Hatchet empowers engineering teams to deploy resilient, scalable distributed applications without compromising on speed or reliability. By providing a unified platform for task orchestration, flow control, and fault tolerance, it significantly reduces operational overhead and development complexity.

Ready to build durable, scalable workflows? Explore the comprehensive documentation or get started quickly with Hatchet Cloud today.