What is Inferable?

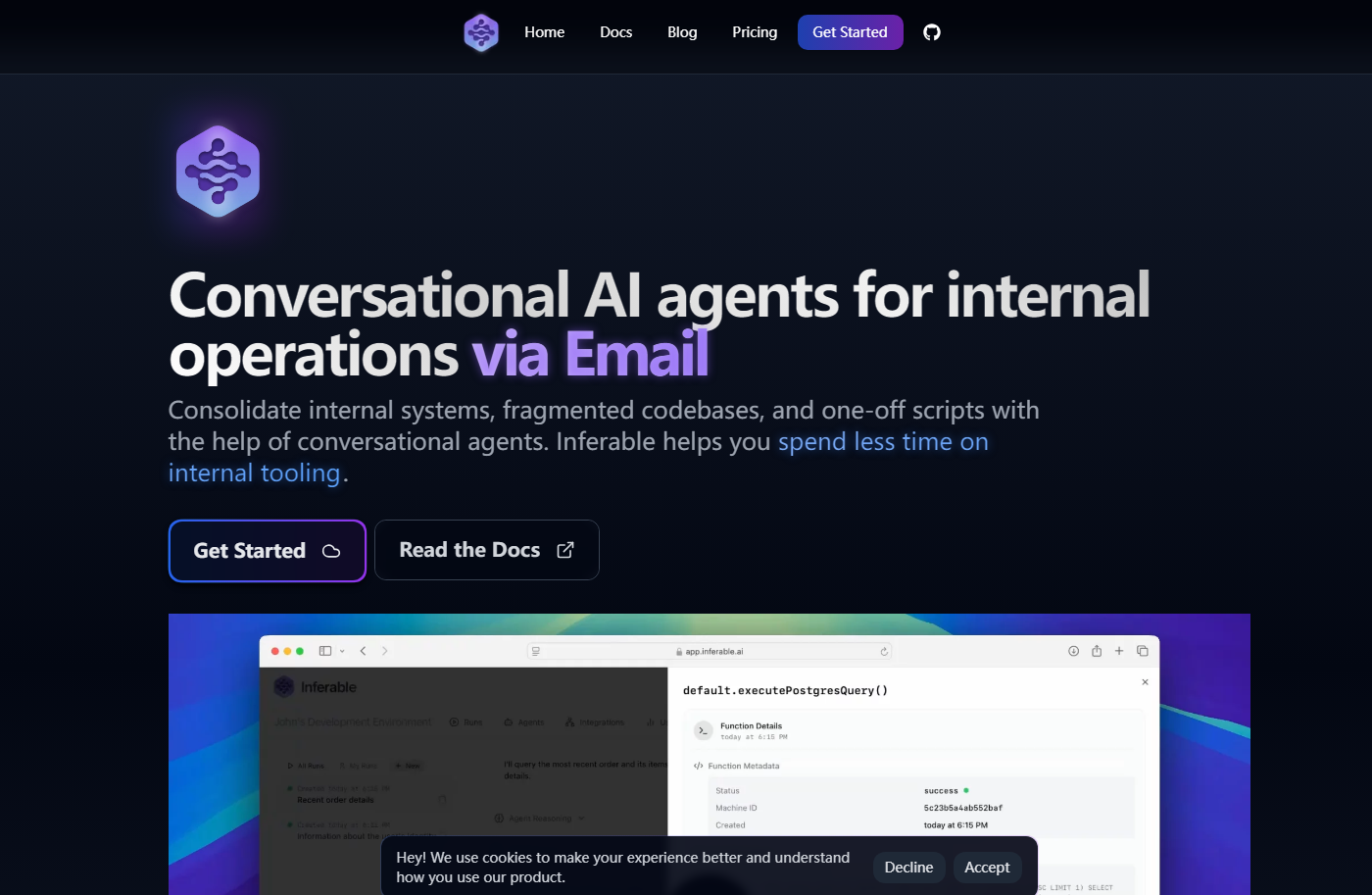

Inferable is an open-source platform designed to help developers and fast-moving engineering teams create conversational AI agents quickly and efficiently. Whether you're consolidating internal systems, automating workflows, or building AI-powered tools, Inferable simplifies the process by integrating seamlessly with your existing codebases, APIs, and data.

Key Features

🌟 Reuse Your Codebase

Decorate your existing functions and APIs without learning new frameworks. Inferable works with what you already have, saving you time and effort.

🚀 Distributed Function Orchestration

Built on a distributed message queue with at-least-once delivery guarantees, Inferable ensures your AI automations are scalable and reliable, no matter the complexity.

🤖 Managed Agent Runtime

Inferable includes a built-in ReAct agent that solves complex problems by reasoning step-by-step and calling your functions to handle sub-tasks.

🔒 On-Premise Execution

Your functions run on your own infrastructure, with no need for inbound connections or open ports. This significantly reduces your security attack surface.

👀 End-to-End Observability

Gain full visibility into your AI workflows and function calls without any additional configuration.

🛠️ Human-in-the-Loop

Pause and resume function executions seamlessly with a simple API, allowing for human intervention when needed—whether it’s minutes or months later.

Use Cases

Internal Operations Automation

Consolidate fragmented codebases and one-off scripts into conversational AI agents that handle repetitive tasks, freeing up your team to focus on higher-value work.Complex Workflow Orchestration

Use Inferable’s ReAct agent to break down complex workflows into manageable steps, ensuring reliable execution even for tasks that take weeks to complete.Secure, On-Premise AI Solutions

Build and deploy AI agents on your own infrastructure, maintaining full control over your data and compute resources.

Why Choose Inferable?

Open-Source & Self-Hostable

Inferable is completely open-source and can be self-hosted on your infrastructure, giving you full control over your data and compute.Enterprise-Ready

Designed to adapt to your existing architecture, Inferable supports BYO models and offers managed cloud options with auto-scaling and high availability.Developer-Friendly

With SDKs for Node.js, Golang, and .NET (and more on the way), Inferable provides a delightful developer experience, enabling you to go from zero to production in hours.

FAQ

Q: Where does my data reside?

A: All data processing occurs within your environment. Inferable’s SDK only sends function outputs to the control plane, ensuring sensitive data like environment variables and secrets remain secure.

Q: Can I use my own models?

A: Yes! Inferable is agnostic to the models you use in your functions. Enterprise customers can also bring their own models for fully integrated use within the managed runtime.

Q: How does Inferable handle data privacy?

A: Data is never stored by AI models beyond the context of your specific run. The control plane never has access to data processed by your functions, and all model providers guarantee zero data retention.