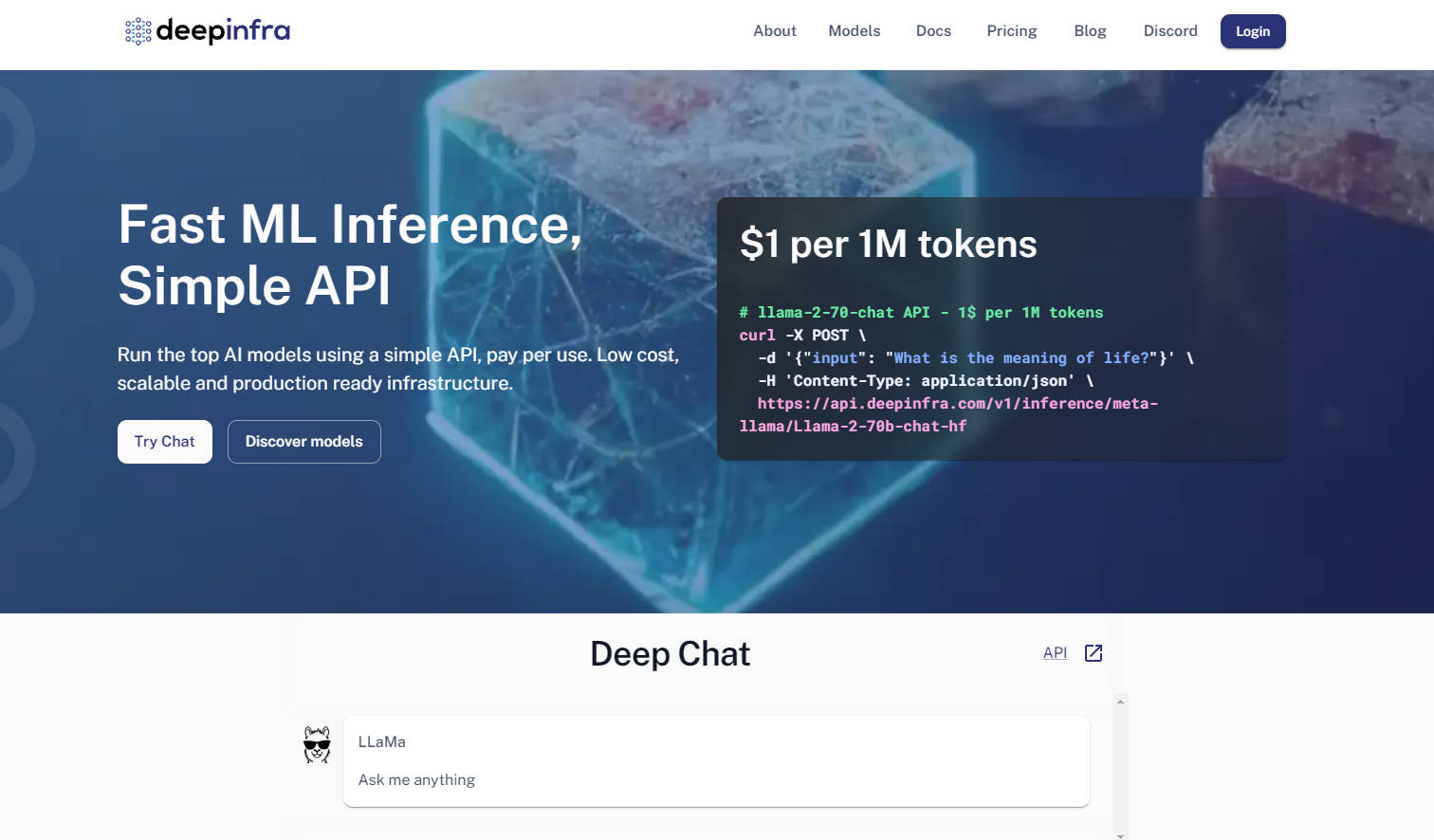

What is DeepInfra?

Developers building with large language models often face twin challenges: efficiently deploying models in production and maintaining flexibility within the fast-paced open-source ecosystem. DeepInfra offers a dedicated inference cloud infrastructure designed to solve these problems, making it your go-to platform for production-ready open-source AI model deployment.

Key Features

OpenAI API Compatibility & Multimodal API: Leverage familiar OpenAI-compatible APIs (REST, Python, JS SDKs) for text, image, embedding, and speech tasks. This allows for effortless migration and integration, minimizing code changes if you're already using OpenAI's ecosystem.

Extensive & Customizable Model Catalog: Access a rich catalog of popular open-source models like Qwen, Mistral, Llama, and DeepSeek, constantly updated with the latest releases. You can also upload your custom models or LoRA fine-tuned versions, giving you unparalleled control and flexibility.

Cost-Optimized & Auto-Scalable Infrastructure: Benefit from significantly lower inference costs, especially for embedding services and high-throughput scenarios, compared to many alternatives. DeepInfra's built-in auto-scaling and serverless GPU instances ensure you only pay for the compute you use, eliminating idle waste.

Dedicated GPU Instances for Advanced Workloads: Gain exclusive access to dedicated GPU instances within containers, suitable for both high-performance inference and small-scale training. This provides greater control and power for complex research and development needs beyond standard API calls.

Use Cases

Powering Advanced AI Agents: Deploy cutting-edge open-source models for your AI agents or Retrieval-Augmented Generation (RAG) systems, leveraging high-throughput embedding services and low-cost inference to process vast amounts of data efficiently.

Custom Model Deployment for Specialized Tasks: Easily host your proprietary fine-tuned models (e.g., LoRA adaptations) on a secure, scalable platform. This enables businesses to deploy domain-specific AI solutions without the overhead of managing complex GPU infrastructure.

Rapid Prototyping and Scalable AI Apps: Quickly test and scale new AI applications using a broad selection of popular open-source models. DeepInfra's flexible API and auto-scaling capabilities accelerate your development cycle from concept to production.

Why Choose DeepInfra?

DeepInfra distinguishes itself by focusing on the critical needs of the open-source AI community and production environments:

Cost Efficiency: DeepInfra stands out for its aggressive pricing, offering significantly lower costs for inference, particularly for embedding tasks and large-scale deployments. This can translate to substantial savings, making advanced AI accessible to more developers and businesses.

Unmatched Model Flexibility: Unlike many cloud providers, DeepInfra prioritizes the open-source ecosystem, providing rapid access to the latest models like DeepSeek-V3.1 and Qwen 2.5. You also gain the unique ability to deploy private endpoints with custom weights or LoRA fine-tuned versions, offering unparalleled adaptability.

Production-Oriented Optimization: Built by a team with deep experience in low-latency, large-scale systems, DeepInfra's inference optimization stack (TensorRT-LLM, Triton, FP8/INT8 quantization) ensures your models run faster and more efficiently in production. This focus on kernel-level optimization means higher throughput and lower operational costs for you.

Data Privacy and Enterprise Compliance: DeepInfra emphasizes data privacy by not storing user request data, a critical aspect for enterprise clients requiring strict compliance and security standards.

Conclusion

DeepInfra empowers developers to harness the full potential of open-source AI models without the typical deployment complexities or prohibitive costs. It provides the robust, flexible, and cost-effective infrastructure needed to bring your AI innovations to production, driving the next wave of intelligent applications. Explore DeepInfra today and transform your open-source AI deployment strategy.

More information on DeepInfra

Top 5 Countries

Traffic Sources

DeepInfra Alternatives

DeepInfra Alternatives-

Lowest cold-starts to deploy any machine learning model in production stress-free. Scale from single user to billions and only pay when they use.

-

Sight AI: Unified, OpenAI-compatible API for decentralized AI inference. Smart routing optimizes cost, speed & reliability across 20+ models.

-

Stop struggling with AI infra. Novita AI simplifies AI model deployment & scaling with 200+ models, custom options, & serverless GPU cloud. Save time & money.

-

Accelerate your AI development with Lambda AI Cloud. Get high-performance GPU compute, pre-configured environments, and transparent pricing.

-

Create high-quality media through a fast, affordable API. From sub-second image generation to advanced video inference, all powered by custom hardware and renewable energy. No infrastructure or ML expertise needed.