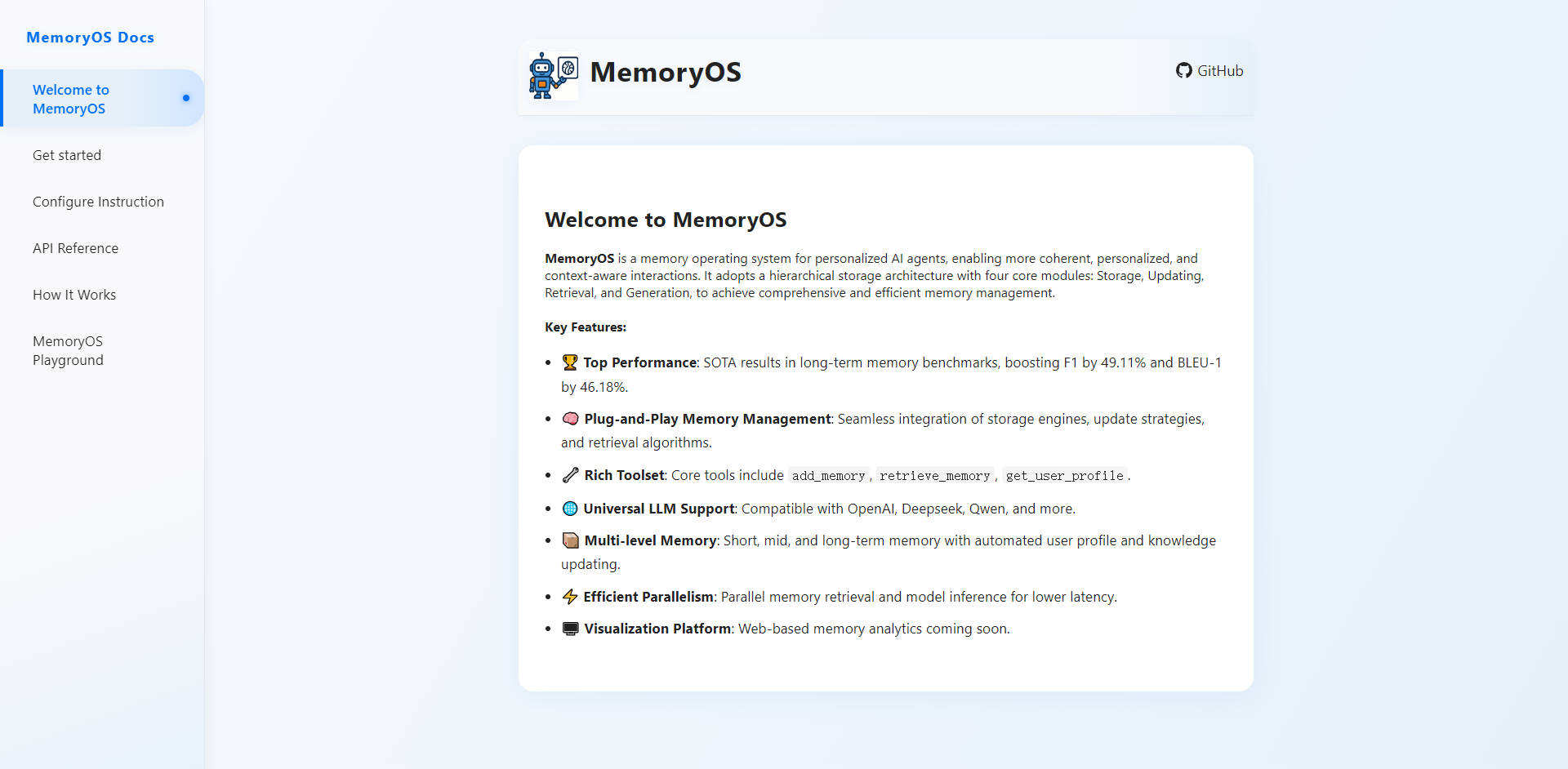

What is MemoryOS?

MemoryOS is a sophisticated memory operating system specifically engineered to provide AI agents with deep, consistent, and personalized context management. It solves the critical limitation of standard LLMs—the inability to maintain coherence and recall relevant details across thousands of interactions—by adopting a robust, hierarchical storage architecture. For developers and enterprises, MemoryOS ensures your AI agents deliver truly context-aware, human-like interactions rooted in a comprehensive understanding of the user's history and preferences.

Key Features

MemoryOS achieves stateful AI interactions through its modular, multi-level design, focusing on efficiency and depth of recall.

🧠 Multi-Level Hierarchical Memory: Emulating human cognition, MemoryOS segments context into Short-Term (current dialogue), Mid-Term (segmented, themed archives), and Long-Term Memory (persistent user profiles and general knowledge). This architecture ensures rapid access to immediate context while continuously refining deeply personalized insights.

🔄 Dynamic, Heat-Based Profile Updating: The system utilizes dynamic update strategies and heat-based algorithms. When mid-term segments are frequently accessed or contain high-value interactions, the system automatically analyzes and extracts critical user facts (e.g., specific preferences or domain knowledge) to update the Long-Term User Profile, ensuring the AI agent is always learning and adapting.

🚀 Validated Performance Gains: MemoryOS has demonstrated state-of-the-art (SOTA) results in long-term memory benchmarks, achieving a significant boost in recall and coherence metrics. This verifiable performance ensures your agents remain contextually accurate, even after thousands of turns.

🛠️ Integrated Toolset & Plug-and-Play Management: Developers access core memory functions (e.g.,

add_memory,retrieve_memory,get_user_profile) through a standardized interface. The core engine is designed for seamless integration, allowing developers to plug in different storage engines, update strategies, and retrieval algorithms without complex rework.⚡ Efficient Parallel Retrieval: To maintain low latency, MemoryOS employs parallel memory retrieval and model inference. This crucial engineering design ensures that even complex queries requiring context from all memory levels (short history, mid-term segments, and long-term profiles) are answered instantly, preventing the user experience from stalling.

Use Cases

Integrating MemoryOS transforms generic AI agents into specialized partners capable of long-term relationship management and complex task execution.

Enterprise Customer Success Agents

Implement an AI agent that remembers a customer's entire support history, product configuration, and specific pain points from previous quarters. Rather than starting over with every chat session, the agent instantly accesses the comprehensive User Profile, providing tailored solutions and proactive advice, significantly boosting resolution rates and customer satisfaction.

Personalized Educational Tutors

Deploy AI tutors that track student progress, learning preferences, and knowledge gaps over an entire academic year. The long-term knowledge base stores successful teaching strategies and areas requiring reinforcement. When the student asks a question, the agent retrieves context not just from the current session but from months of accumulated learning data, delivering hyper-personalized instruction.

Developer and Code Agents

For agents assisting software developers (like those integrated into IDEs), MemoryOS maintains project state across weeks of development. The agent remembers the specific architectural constraints, preferred coding styles, chosen libraries, and historical bugs related to a repository, enabling it to generate coherent, contextually appropriate code suggestions and documentation updates without needing constant re-instruction.

Unique Advantages

MemoryOS is built on architectural insights that move beyond simple vector databases, offering a sophisticated cognitive layer that ensures performance and ease of use.

Zero-Loss Performance Over Time: The highly efficient memory engine, utilizing techniques like topic clustering and temporal decay algorithms, ensures rapid, accurate retrieval in seconds, even across thousands of dialogue turns, effectively eliminating the "short-term memory loss" common in standard conversational AI.

Simplified Integration via MCP Server: The MemoryOS MCP (Memory Control Plane) Server interface provides developers with a standardized, low-barrier solution for integrating advanced memory management capabilities. This "open-box" approach supports seamless, one-click integration with mainstream Agent clients like Cline and Cursor.

Automated Personalization (Zero Development Overhead): Unlike systems requiring manual prompt engineering for personality, MemoryOS automatically precipitates a detailed user profile based purely on historical dialogue. This capability delivers highly personalized, relationship-based responses without requiring developers to write complex, specific personalization logic.

Verifiable Performance Metrics: With validated SOTA results, including a 49.11% boost in F1 score and 46.18% in BLEU-1 on long-term memory benchmarks, MemoryOS offers a measurable guarantee of improved context retention and response quality.

Conclusion

MemoryOS provides the essential operating system layer for building the next generation of truly intelligent, persistent, and personalized AI agents. By offering verified performance, multi-level context management, and effortless integration, it ensures your AI interactions are always coherent, deeply informed, and highly relevant.

Explore how MemoryOS can transform your AI agent capabilities and deliver unparalleled user experiences.

FAQ

Q: Which Large Language Models (LLMs) are compatible with MemoryOS? A: MemoryOS is designed for universal compatibility. It supports integration with all major LLM providers, including OpenAI, Deepseek, Qwen, and others, allowing you to utilize your preferred foundational models while leveraging the advanced memory architecture of MemoryOS.

Q: How does MemoryOS prevent "memory overload" or latency issues with massive dialogue histories? A: The system uses a dynamic load balancing mechanism that automatically adjusts memory strategies based on dialogue complexity and volume. Furthermore, the parallel retrieval engine ensures that even when retrieving context from massive long-term storage, the latency remains low, guaranteeing responsive, high-speed interactions.

Q: What is the purpose of the Mid-Term Memory layer? A: Mid-Term Memory acts as a crucial processing hub between raw Short-Term dialogue and refined Long-Term knowledge. It uses segmentation and thematic archiving (similar to "paging" in an operating system) to consolidate raw interactions into meaningful, high-level topics. This segmentation process is essential for extracting accurate user profile updates and maintaining topical coherence across longer dialogue gaps.