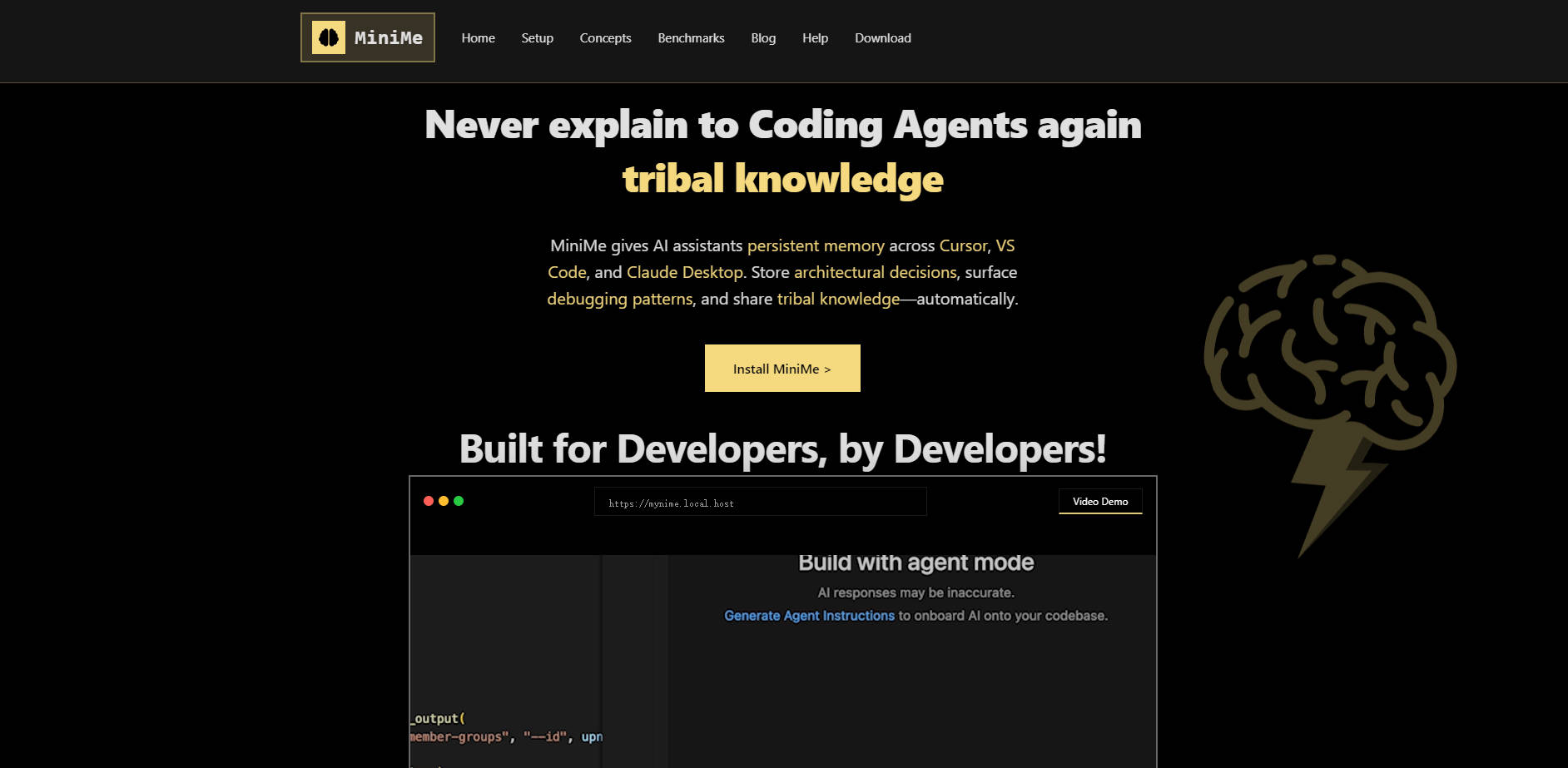

What is MiniMe?

MiniMe is the intelligent memory layer designed specifically for AI coding assistants, solving the critical problem of "context amnesia." It transforms your AI agents—whether you use Claude, Cursor, or VS Code—from session-limited helpers into true, persistent learning partners that understand your entire body of work. For developers and engineering teams, MiniMe ensures that architectural decisions, debugging patterns, and hard-won tribal knowledge never reset, allowing your context to compound instead of being lost.

Key Features

MiniMe functions as an AI Memory Operating System, moving beyond simple data storage to provide deep, analytical insights into your development history.

🧠 Multi-Strategy Hybrid Search for Precision Recall

MiniMe dramatically improves context retrieval by combining semantic, lexical (keyword), and categorical search strategies. This hybrid approach ensures that when you search for context, your AI assistant understands both the meaning of your query and the exact technical terms involved. Leveraging this multi-strategy intelligence, MiniMe achieves 85% precision@5, ensuring you find the precise memories you need without the noise that plagues pure vector database solutions.

💡 Persistent Pattern Discovery and Insight Generation

Unlike systems that store memories in flat, isolated buckets, MiniMe builds an evolving knowledge ecosystem. It automatically clusters related memories and discovers hidden connections across your entire project portfolio. This capability surfaces crucial insights you didn't know existed, such as detecting recurring bugs, tracking the effectiveness of previous solutions ("This fix worked 3/4 times, but failed in distributed systems"), and revealing shared architectural patterns across disparate projects.

🔒 Zero-Trust Privacy Architecture

Designed for proprietary, government, and sensitive development environments, MiniMe offers a 100% local deployment via Docker (supporting both AMD64 and ARM64 architectures). Your code and proprietary context never leave your machine and have zero cloud dependencies. This robust, self-hosted approach ensures maximum data security and compliance while providing powerful AI assistance.

🤝 Universal MCP-Native Tool Integration

MiniMe operates using the Model Context Protocol (MCP) standard, providing a single, unified "brain" that integrates natively across all your favorite AI tools and IDEs, including Claude Desktop, Cursor, VS Code, Windsurf, and JetBrains. This cross-session continuity means you can switch between projects, IDEs, or AI agents without ever needing to re-explain your code base or past decisions.

Use Cases

MiniMe integrates seamlessly into the daily developer workflow, delivering measurable improvements in efficiency and knowledge retention.

Debug Faster with Historical Context

When you encounter a familiar issue, your AI assistant can instantly query MiniMe’s cross-project memory to surface the exact debugging patterns, root causes, and successful solutions used previously. Instead of starting the troubleshooting process from scratch, you rapidly identify systemic problems and track solution effectiveness, significantly reducing time spent on recurring bugs.

Instant Onboarding and Tribal Knowledge Transfer

New engineering team members can immediately query the team's shared MiniMe memory to understand established architectural decisions, coding conventions, and the rationale behind complex technical choices. This instant access to collective intelligence replaces lengthy documentation reviews and manual knowledge transfer meetings, accelerating time-to-productivity for every new hire.

Enforce Consistency with AI Guardrails

Store your team's engineering standards, architectural principles, and code review guidelines within MiniMe. These memories act as intelligent guardrails for your AI coding agents, ensuring that every code suggestion, implementation, or refactoring aligns consistently with established organizational best practices and conventions across all projects.

Unique Advantages

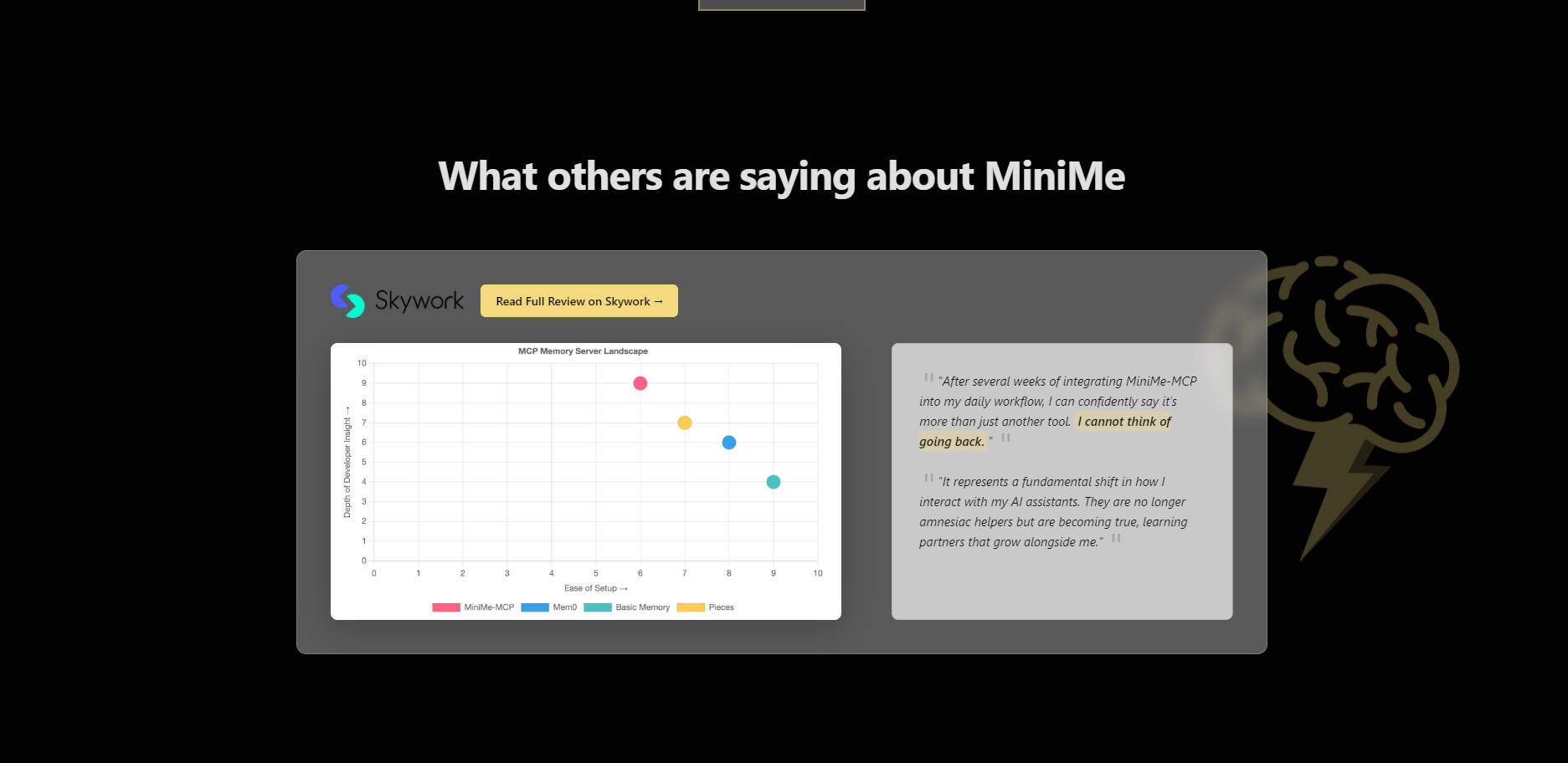

MiniMe is engineered to overcome the limitations of traditional AI memory solutions, which often rely on simple vector storage or keyword matching. Our distinct architecture delivers verifiable performance and superior value.

- Superior Search Accuracy: Unlike most AI memory solutions, which are glorified vector databases that rely heavily on semantic soup (often yielding low precision), MiniMe’s Hybrid Search (semantic+lexical+categorical) guarantees industry-leading 85% precision@5, ensuring the context retrieved is highly relevant and actionable.

- True Pattern Discovery: MiniMe goes beyond simple retrieval by automatically clustering memories and analyzing relationships, revealing complex, hidden connections (e.g., 'this fix worked 3/4 times but failed in distributed systems'). This deep analytical capability is provided automatically, requiring zero manual tagging or data preparation from the user.

- Freedom from Vendor Lock-in: By natively adopting the Model Context Protocol (MCP), MiniMe provides a single context layer compatible with every major AI tool. This standard ensures that your accumulated development knowledge remains portable, guaranteeing you can switch models or tools without losing your valuable context.

- Uncompromising Privacy: The 100% local, self-hosted deployment via Docker ensures that your proprietary code and intellectual property never touch a third-party cloud service, meeting the stringent security requirements of modern development and enterprise environments.

Conclusion

MiniMe moves beyond simple AI assistance to provide a true knowledge operating system for developers. By providing persistent context, deep analytical intelligence, and robust privacy controls, it ensures your AI coding assistant understands not just what you are doing, but why you are doing it, and what works. Stop wasting time re-explaining context and start compounding your development knowledge.

More information on MiniMe

MiniMe Alternatives

Load more Alternatives-

OpenMemory: The self-hosted AI memory engine. Overcome LLM context limits with persistent, structured, private, and explainable long-term recall.

-

Give your AI a reliable memory. MemoryPlugin ensures your AI recalls crucial context across 17+ platforms, ending repetition & saving you time and tokens.

-

-

Stop AI forgetfulness! MemMachine gives your AI agents long-term, adaptive memory. Open-source & model-agnostic for personalized, context-aware AI.

-

Supermemory gives your LLMs long-term memory. Instead of stateless text generation, they recall the right facts from your files, chats, and tools, so responses stay consistent, contextual, and personal.