What is OpenMemory?

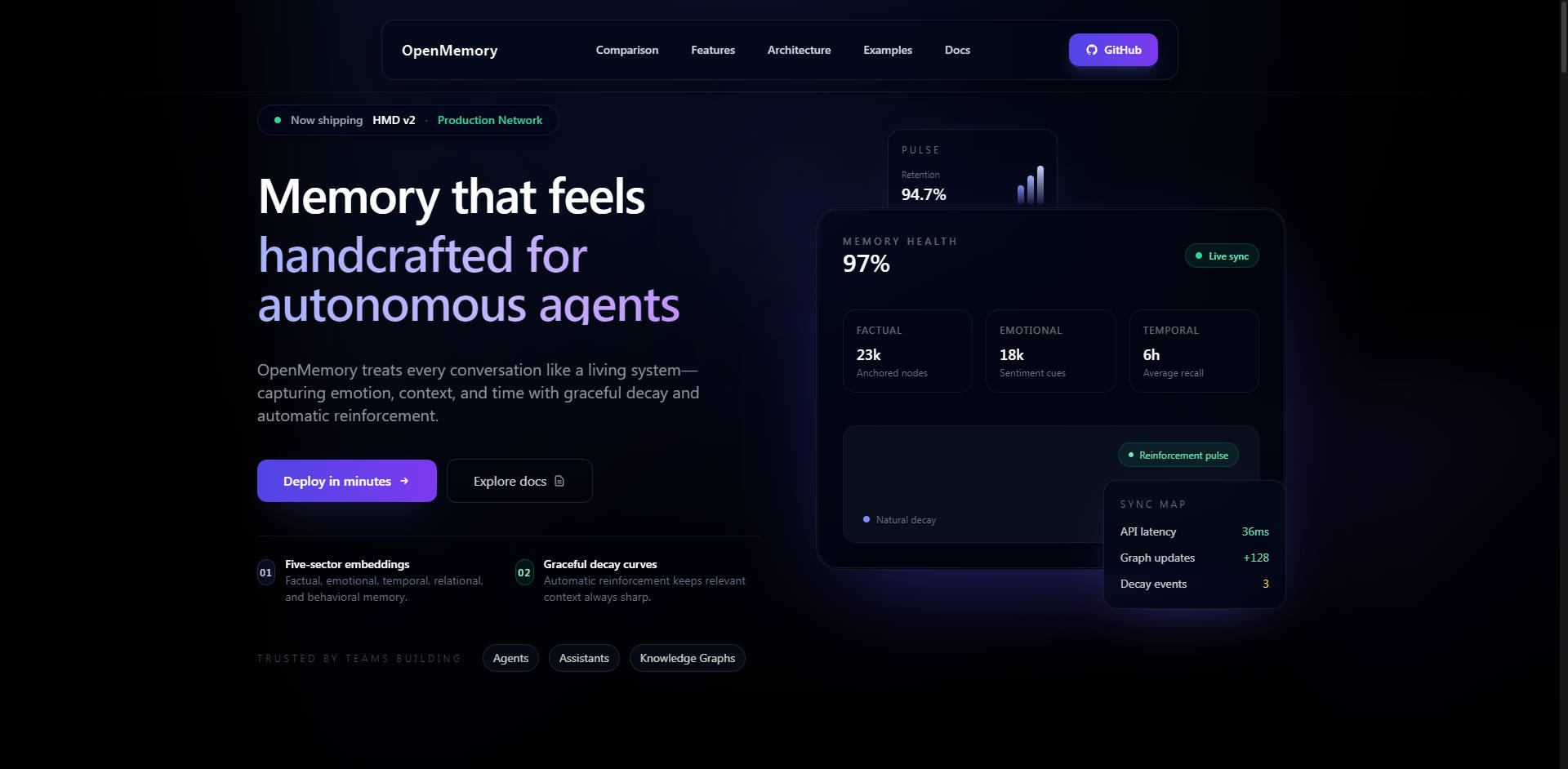

OpenMemory is a modular, self-hosted AI memory engine engineered to solve the critical problem of limited LLM context windows. It provides persistent, structured, and semantic long-term memory, enabling AI agents, assistants, and enterprise copilots to securely remember complex user data, preferences, and prior interactions across sessions and tasks. Designed for developers and organizations that prioritize control, performance, and transparency, OpenMemory ensures your AI applications retain context efficiently and privately.

Key Features

OpenMemory delivers true long-term memory through a specialized cognitive model, moving beyond simple vector storage to offer nuanced, explainable context retrieval.

🧠 Hierarchical Memory Decomposition (HMD) Architecture

HMD is the core innovation that distinguishes OpenMemory from traditional vector databases or simple memory layers. Instead of storing all context uniformly, HMD decomposes memories into multi-sector embeddings (episodic, semantic, procedural, reflective, emotional). This biologically-inspired architecture uses a sparse, single-waypoint linking graph to ensure one canonical node per memory, dramatically reducing data duplication and improving the precision of retrieval. This structure is key to achieving lower latency and explainable reasoning paths.

🔒 Self-Hosted Security and Data Ownership

By design, OpenMemory is self-hosted, deployable locally, via Docker, or in your private cloud environment. This architecture guarantees 100% data ownership and privacy, eliminating vendor data exposure inherent in hosted memory APIs. Security features include Bearer authentication for write APIs, PII scrubbing and anonymization hooks, optional AES-GCM content encryption, and robust tenant isolation for multi-user deployments.

💻 VS Code Extension for Copilot Persistence

The dedicated VS Code Extension gives AI assistants persistent memory across complex coding sessions. This extension offers zero-config integration, automatically tracking every file edit, save, and open. Crucially, it uses Smart Compression to intelligently reduce token usage by 30–70%, ensuring cost-effective context delivery and providing query responses under 80ms through intelligent caching.

🔗 Deep Integration with LangGraph and MCP

OpenMemory is designed for modern AI orchestration. It features a dedicated LangGraph Integration Mode (LGM), which provides specialized REST endpoints to automatically map node outputs (e.g., observe, plan, reflect) to the appropriate multi-sector HMD storage. Furthermore, the built-in Model Context Protocol (MCP) HTTP Server allows MCP-aware agents (like Claude Desktop and custom SDKs) to connect immediately without requiring an SDK installation, enhancing framework-agnostic interoperability.

Use Cases

OpenMemory is built for scenarios demanding high context fidelity, security, and long-term retention.

1. Enterprise Copilot Development

Deploy OpenMemory within your secured corporate network to power enterprise-grade copilots. This ensures all proprietary data, operational procedures, and employee preferences are remembered securely and privately. The PII scrubbing and tenant isolation capabilities allow for compliant, multi-user deployment where data integrity and control are non-negotiable.

2. Long-Term Agent Memory and Personalization

Build AI agents that truly evolve and learn over time. By utilizing the HMD architecture, agents can retrieve memories based not just on semantic similarity, but also procedural history or reflective insights. This allows the agent to recall specific past actions, understand complex user habits, and provide highly personalized, contextually relevant assistance over weeks or months.

3. Enhanced Developer Workflow Persistence

The OpenMemory VS Code Extension allows development teams using AI assistants (like GitHub Copilot or Cursor) to maintain persistent memory across reboots, code branches, and long projects. The assistant doesn't just remember the current file; it remembers the context of the entire project history, architectural decisions made last week, and specific debug steps taken yesterday, significantly reducing redundant context repetition and improving efficiency.

Why Choose OpenMemory? Unique Advantages

OpenMemory is engineered to outperform traditional memory solutions on key metrics: speed, cost, and functional transparency.

| Advantage | Benefit | Performance Differentiator |

|---|---|---|

| Superior Performance & Cost | Achieve better contextual accuracy and faster retrieval while drastically cutting operational costs. | 2–3× faster contextual recall and 6–10× lower cost compared to hosted “memory APIs.” (e.g., 2.5× faster and 10–15× cheaper than Zep at the same scale). |

| Explainable Recall | Understand precisely why a piece of context was retrieved, ensuring transparency and trust in AI reasoning. | Multi-sector cognitive model enables explainable recall paths based on specific memory types (episodic, semantic, etc.). |

| True Openness and Flexibility | Utilize the best embedding models for your specific use case, minimizing vendor lock-in. | Framework-agnostic design supporting hybrid embeddings (OpenAI, Gemini) and local models (Ollama, E5, BGE) at zero cost. |

| Data Control | Complete governance over your data lifecycle, security, and storage location. | 100% data ownership and full erasure capabilities via robust API endpoints. |

Conclusion

OpenMemory provides the critical infrastructure required for the next generation of intelligent, context-aware LLM applications. By offering a self-hosted, performance-optimized, and architecturally superior memory engine, you gain the control, speed, and depth necessary to build truly persistent and trustworthy AI experiences.

More information on OpenMemory

OpenMemory Alternatives

OpenMemory Alternatives-

MemOS: The industrial memory OS for LLMs. Give your AI persistent, adaptive long-term memory & unlock continuous learning. Open-source.

-

Supermemory gives your LLMs long-term memory. Instead of stateless text generation, they recall the right facts from your files, chats, and tools, so responses stay consistent, contextual, and personal.

-

Give your AI agents perfect long-term memory. MemoryOS provides deep, personalized context for truly human-like interactions.

-

Stop AI agents from forgetting! Memori is the open-source memory engine for developers, providing persistent context for smarter, efficient AI apps.

-

EverMemOS: Open-source memory system for AI agents. Go beyond retrieval to proactive, deep contextual perception for truly coherent interactions.