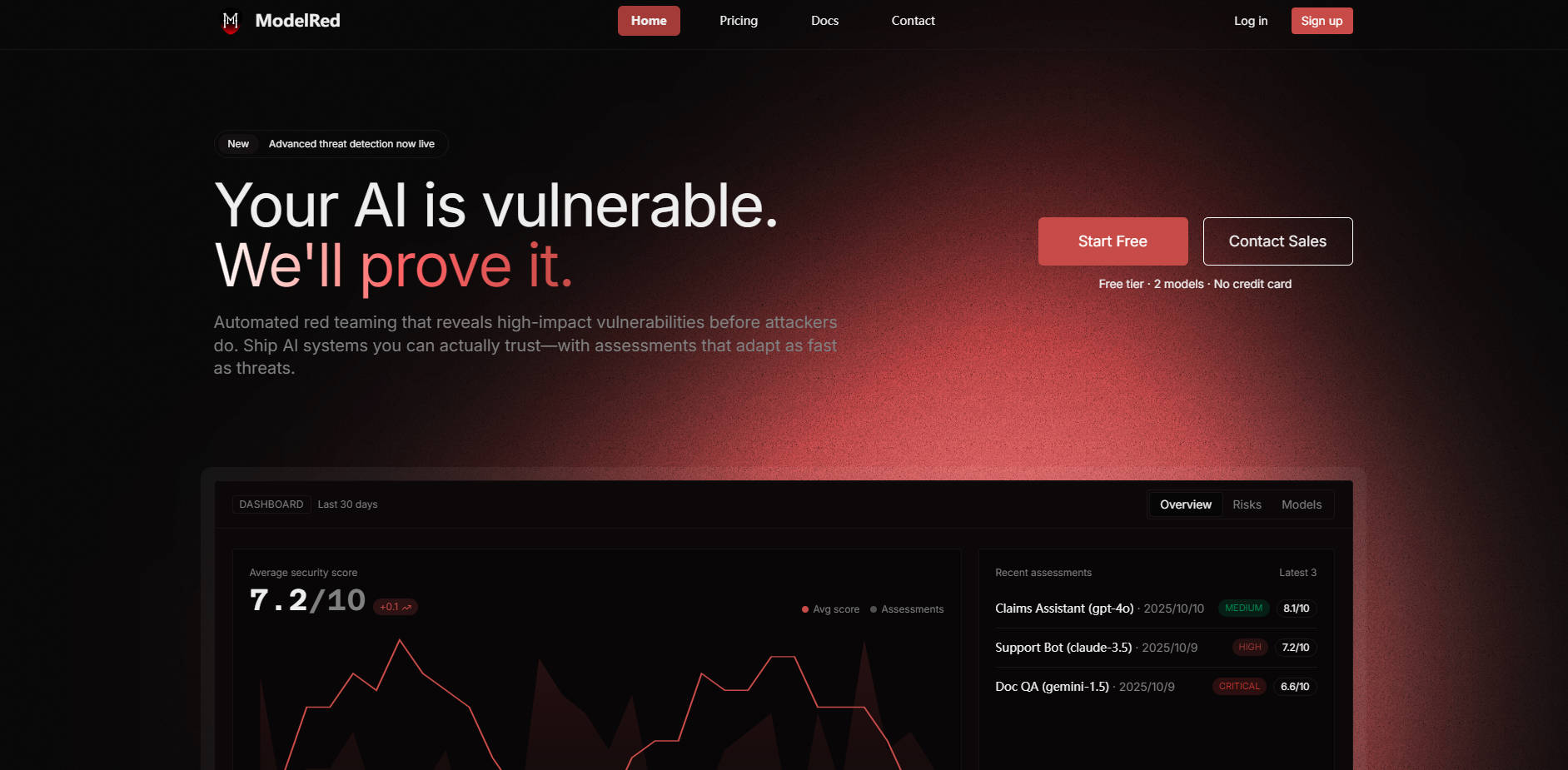

What is ModelRed?

ModelRed is a specialized AI Security & Red Teaming Platform designed to proactively identify and mitigate high-impact vulnerabilities within your Large Language Models (LLMs). It empowers engineering, security, and platform teams to build and deploy AI systems with genuine confidence, ensuring robust protection against evolving threats from pre-deployment to runtime.

Key Features

🛡️ Adaptive Red Team Probes: Leverage a library of 10,000+ evolving attack vectors that adapt to your model's responses. This dynamic approach uncovers hidden weaknesses and ensures comprehensive coverage of OWASP LLM risks, providing replayable evidence for effective remediation.

🚫 Prompt Injection Detection: Achieve 99.2% accuracy in detecting manipulation patterns and context hijacking attempts before they compromise your model. ModelRed employs advanced pattern and semantic heuristics for real-time protection both before deployment and during live operation.

🔒 Jailbreak Prevention: Stress-test your AI systems against sophisticated bypass attempts and guardrail drift with a median response time of just 2.3 milliseconds. This feature utilizes attack chaining and auto-generated variants to proactively strengthen your model's defenses.

🔄 Continuous Security at Scale: Benefit from a system that detects over 10,000 new attack vectors monthly, ensuring your AI defenses automatically evolve and adapt faster than emerging threats.

Use Cases

ModelRed helps you integrate robust AI security seamlessly into your operations:

Pre-Deployment Vulnerability Discovery: Integrate ModelRed into your CI/CD pipelines to automatically run security probes on every code change. This surfaces exploit paths early, providing clear, replayable evidence to gate risky merges and prevent vulnerabilities from ever reaching production.

Real-time Runtime Protection: Deploy ModelRed to continuously monitor your live AI applications, detecting and blocking 99.8% of identified threats automatically. This ensures your AI systems remain secure against ongoing adversarial attacks without manual intervention.

Industry-Specific Compliance: Leverage specialized probes tailored for highly regulated sectors. For instance, in healthcare, ModelRed screens patient data queries for HIPAA compliance; in financial services, it protects trading algorithms and blocks data leakage, supporting SOC 2 Type II readiness.

Why Choose ModelRed?

ModelRed delivers a unified, comprehensive approach to AI security that stands out for its effectiveness and operational efficiency:

Unmatched Adaptability: Unlike static testing methods, ModelRed's adaptive red teaming continuously evolves, detecting over 10,000 new attack vectors monthly. This ensures your defenses are always ahead of the latest threats, providing security that truly adapts faster than adversaries.

Proven Accuracy & Speed: With 99.2% accuracy in prompt injection detection and a 2.3ms response time for jailbreak prevention, ModelRed offers precise and rapid threat mitigation. It blocks 99.8% of detected threats automatically, significantly reducing your risk exposure.

Vendor-Agnostic & Integrable: ModelRed supports over 10 major AI providers, including OpenAI, Anthropic, Azure, and Google, and integrates seamlessly into your existing CI/CD workflows. This flexibility allows you to validate security consistently across diverse models and environments.

Quantifiable Risk Reduction: Teams using ModelRed have reported tangible benefits, such as a 30% reduction in manual security review time and a 25% decrease in risk exposure over 90 days, providing clear, measurable outcomes for leadership.

Conclusion

ModelRed provides the essential security infrastructure to build and deploy trustworthy AI systems, giving you the confidence to innovate without compromising safety. By automating red teaming and providing continuous, adaptive protection, you can proactively address vulnerabilities and ensure your AI remains secure.