What is PolyLM?

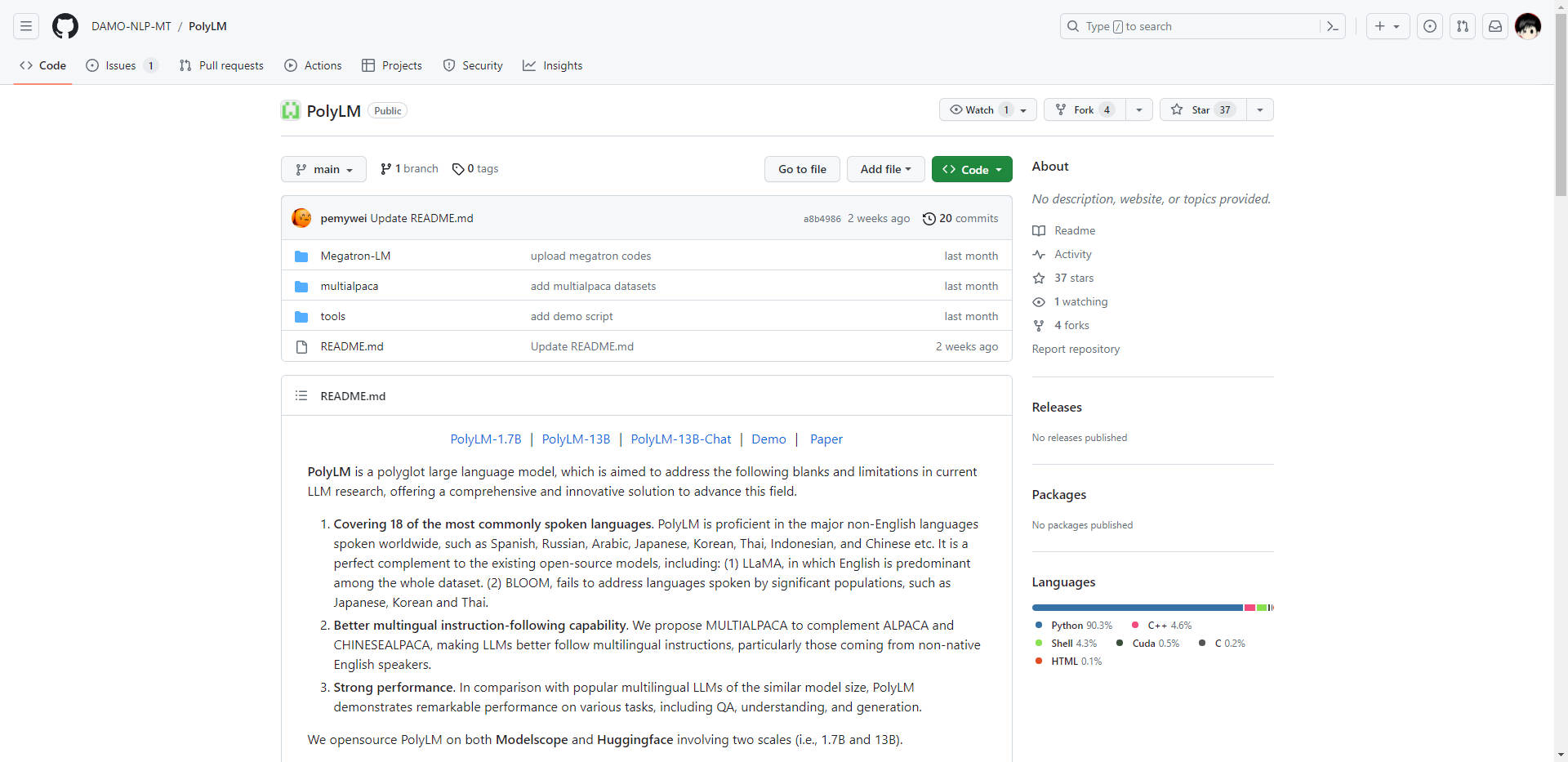

PolyLM is a cutting-edge polyglot large language model designed to bridge gaps in multilingual AI research. Supporting 18 widely spoken languages, including Spanish, Arabic, Japanese, and Chinese, PolyLM excels in understanding, generating, and following instructions across diverse linguistic contexts. With superior performance in tasks like question-answering and text generation, it complements existing models like LLaMA and BLOOM, addressing their limitations in non-English language support. Available in two scalable versions (1.7B and 13B), PolyLM is open-sourced on Modelscope and Huggingface, making it accessible for developers and researchers worldwide.

Key Features:

🌍 Multilingual Mastery

PolyLM supports 18 major languages, ensuring robust performance for global users, particularly in non-English contexts like Japanese, Korean, and Arabic.📜 Enhanced Instruction-Following

With MULTIALPACA integration, PolyLM excels at understanding and executing multilingual instructions, making it ideal for non-native English speakers.💪 Strong Task Performance

Outperforms similar-sized multilingual models in tasks like question-answering, comprehension, and text generation, delivering reliable and accurate results.🔓 Open-Source Accessibility

Available in 1.7B and 13B scales on Modelscope and Huggingface, PolyLM empowers developers and researchers to innovate without barriers.

Use Cases:

Global Customer Support

Businesses can deploy PolyLM to provide multilingual customer service, ensuring seamless communication with clients in their native languages, such as Spanish, Arabic, or Chinese.Multilingual Content Creation

Content creators can use PolyLM to generate high-quality, culturally relevant text in multiple languages, from blog posts to marketing materials, saving time and resources.Language Learning Tools

Educators and edtech platforms can leverage PolyLM to develop interactive language-learning applications, offering real-time translations, explanations, and practice exercises in 18 languages.

Conclusion:

PolyLM is a game-changer for multilingual AI, offering unparalleled language support, robust performance, and open-source accessibility. Whether you're a developer, researcher, or business, PolyLM empowers you to break language barriers and deliver solutions that resonate globally. Its ability to handle diverse linguistic tasks with precision makes it a must-have tool for anyone working in a multilingual environment.