What is Real-Time Voice Cloning?

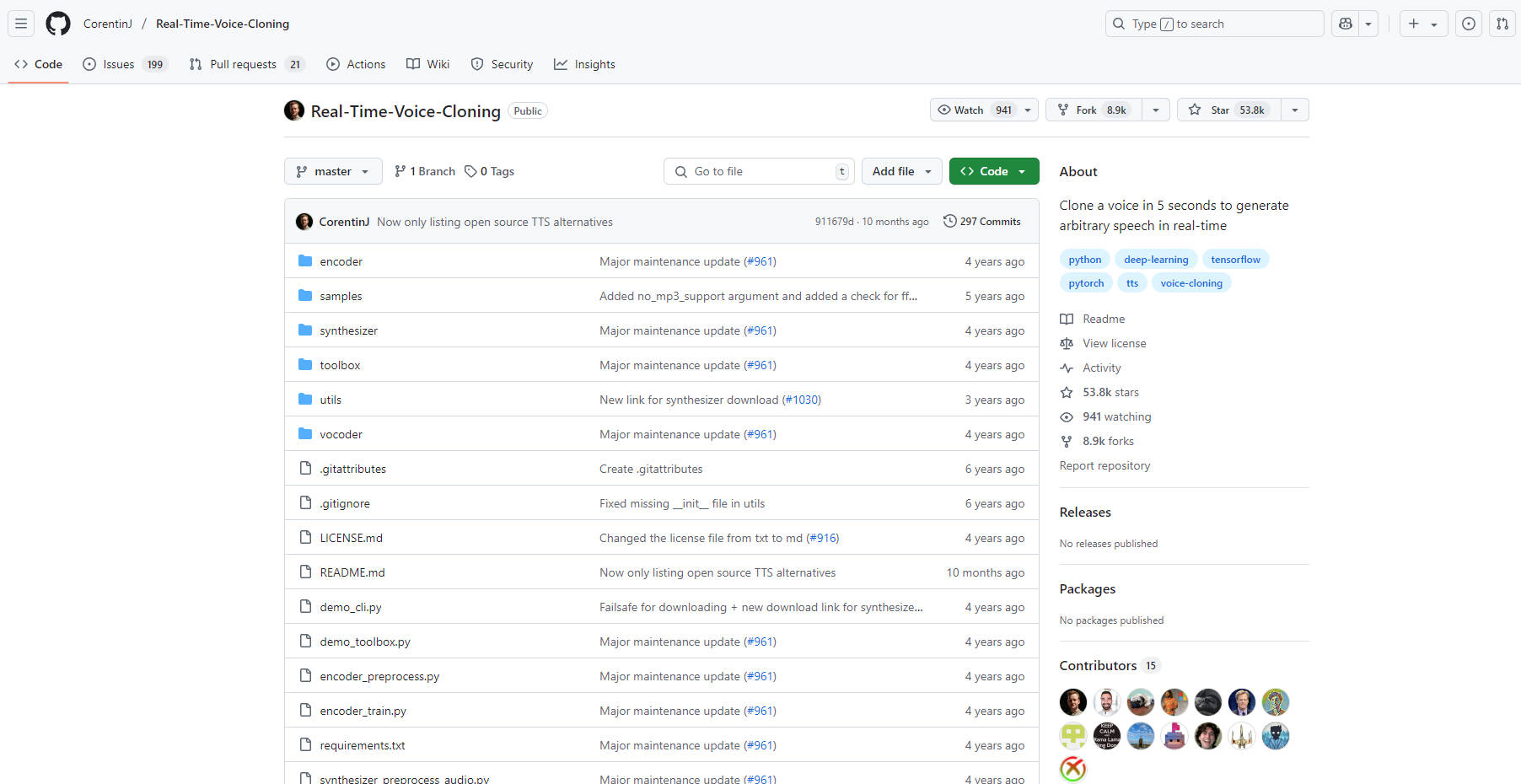

This repository provides a real-time implementation of Transfer Learning from Speaker Verification to Multispeaker Text-To-Speech Synthesis (SV2TTS), a powerful deep learning framework for voice cloning. Based on the original SV2TTS paper (1806.04558), this project allows you to create a digital representation of a voice from just a few seconds of audio and then use that representation to generate speech with arbitrary text. This is a practical, working implementation of the technology, designed for researchers and developers.

Key Features:

Implement SV2TTS: Provides a complete, functional implementation of the three-stage SV2TTS process, including speaker encoder, synthesizer, and vocoder.

Utilize a Real-Time Vocoder: Leverages a WaveRNN-based vocoder (1802.08435) for efficient and real-time audio synthesis.

Adapt Pre-Trained Models. Pre-trained models are automatically downloaded for immediate use, or you can train your own.

Integrate with Multiple Datasets: Supports various datasets, including LibriSpeech, for training and experimentation. (See detailed list here.)

Run Comprehensive Tests: Includes a built-in testing suite (

demo_cli.py) to verify your configuration and ensure proper functionality.Employ Generalized End-to-End (GE2E) Loss: Implements the GE2E loss function (1710.10467) for improved speaker verification performance.

Technical Details:

The system is built on a three-stage deep learning pipeline:

Speaker Encoder: Extracts a fixed-dimensional embedding vector (d-vector) from a short audio sample of a target speaker. This embedding represents the unique characteristics of the speaker's voice. This stage implements the GE2E loss function.

Synthesizer: Based on the Tacotron architecture (1703.10135), this stage takes the speaker embedding and an input text sequence as input. It generates a mel spectrogram, which is a time-frequency representation of the audio signal.

Vocoder: This component, built on WaveRNN (1802.08435), converts the mel spectrogram into a raw waveform, producing the final synthesized speech.

Use Cases:

Custom Voice Assistant Development: Create unique, personalized voices for voice assistants and other interactive applications. Instead of relying on generic system voices, you can tailor the voice to match a specific brand or persona.

Speech Synthesis Research: Serve as a foundation for further research in voice cloning, text-to-speech, and speaker verification. The modular design allows for experimentation with individual components.

Audio Content Creation: Generate realistic voiceovers for videos, podcasts, or audiobooks using cloned voices. This provides flexibility and control over the vocal characteristics of the content.

Conclusion:

This Real-Time Voice Cloning repository offers a powerful and accessible platform for experimenting with and developing state-of-the-art voice cloning technology. While newer, often paid, SaaS solutions may offer higher audio quality, this open-source project provides a valuable tool for research, development, and customization. It's a solid starting point for anyone interested in exploring the capabilities of SV2TTS and real-time voice synthesis.

More information on Real-Time Voice Cloning

Real-Time Voice Cloning Alternatives

Real-Time Voice Cloning Alternatives-

Discover OpenVoice V2, the latest AI voice cloning innovation! Enjoy superior audio fidelity, multi-lingual support, and versatile voice control for free commercial use.

-

All Voice Lab is the AI voice platform for ultra-realistic TTS & voice cloning. Powered by SOTA MaskGCT 2.0 model. Multilingual, expressive audio for creators & devs.

-

Clone voices & generate lifelike speech in 50+ languages with Open-VoiceCanvas. Open-source, customizable TTS platform.

-

VoxCPM: Realistic, tokenizer-free AI Text-to-Speech. Get context-aware speech generation & true-to-life voice cloning for natural audio.

-

MegaTTS3: AI TTS for bilingual voice generation (EN/CN). Lightweight, voice cloning, & accent control. Open-source!