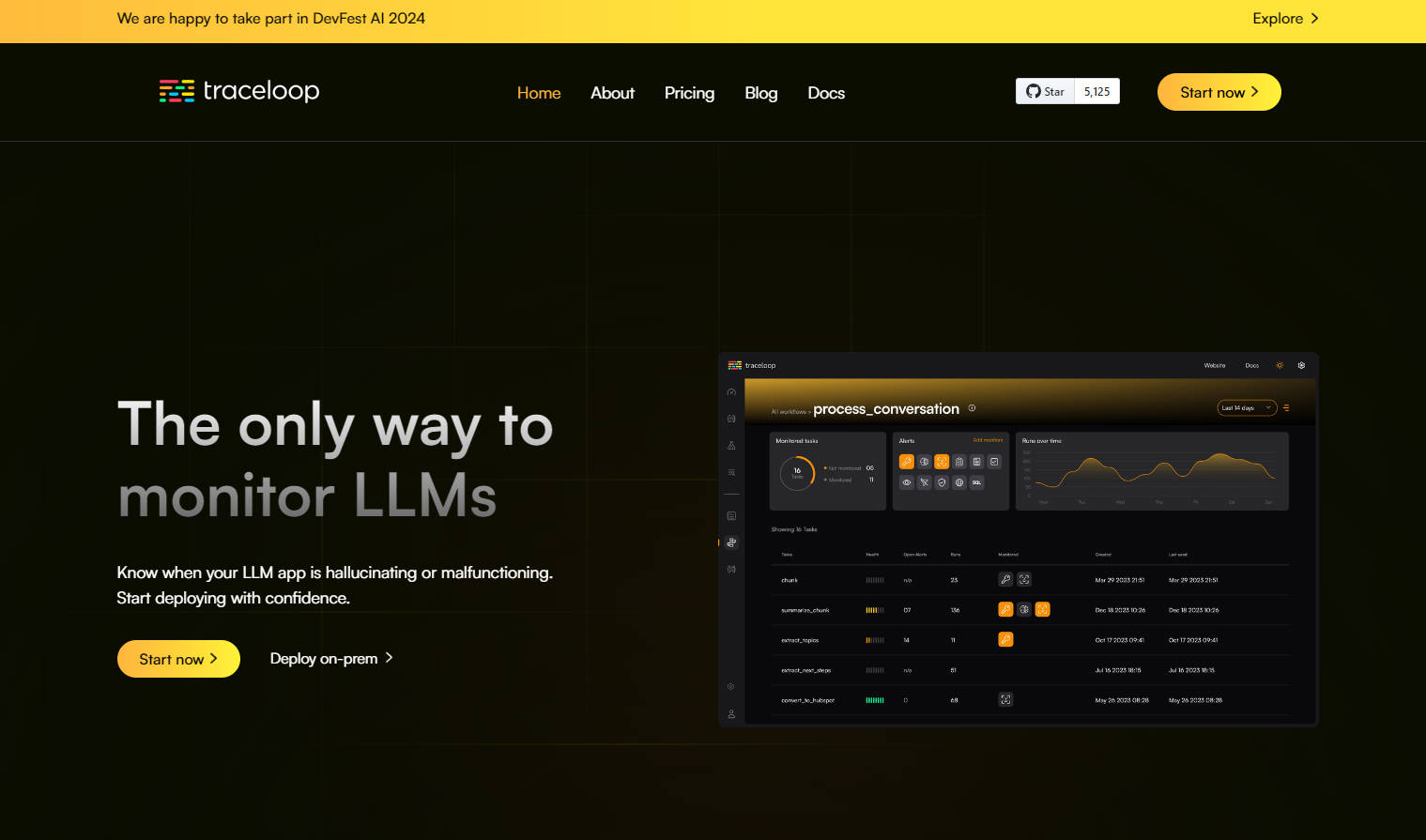

What is Traceloop?

Traceloop is an observability tool designed specifically for applications using Large Language Models (LLMs). It eliminates the need for manual testing, helps detect inconsistencies, and allows for real-time monitoring of LLM outputs. With support for multiple LLM providers and an intuitive integration process, Traceloop ensures that you can deploy your LLM applications confidently, whether on-prem or in the cloud.

Key Features:

🔍 Real-time Monitoring: Track LLM outputs and detect anomalies like hallucinations instantly.

🛠 Backtesting & Debugging: Test changes to models and prompts, and debug issues before they hit production.

⏰ Instant Alerts: Get notified about unexpected changes in output quality, ensuring quick responses to issues.

🌍 Multi-platform Support: Compatible with 22 LLM providers and integrates with 25+ observability platforms.

Use Cases:

AI Startups: A startup developing a customer service chatbot uses Traceloop to monitor its LLM-powered responses. The team catches hallucinations early and ensures consistent, reliable outputs, avoiding embarrassing mistakes in customer interactions.

Enterprise Deployment: A financial institution deploys an LLM-based fraud detection system. With Traceloop, they backtest model changes and monitor output quality to ensure compliance and accuracy, all while maintaining SOC 2 standards with on-prem deployment options.

Open-source Contributors: A developer contributing to OpenLLMetry uses Traceloop to debug and validate changes to the model. By integrating Traceloop, they can ensure their contributions improve the system without introducing new issues.

Conclusion:

Traceloop is the go-to solution for developers and enterprises looking to ensure the reliability and consistency of their LLM applications. With features like real-time monitoring, backtesting, and instant alerts, Traceloop empowers teams to deploy with confidence. Its open-source nature and support for multiple platforms make it a versatile and accessible tool for all.