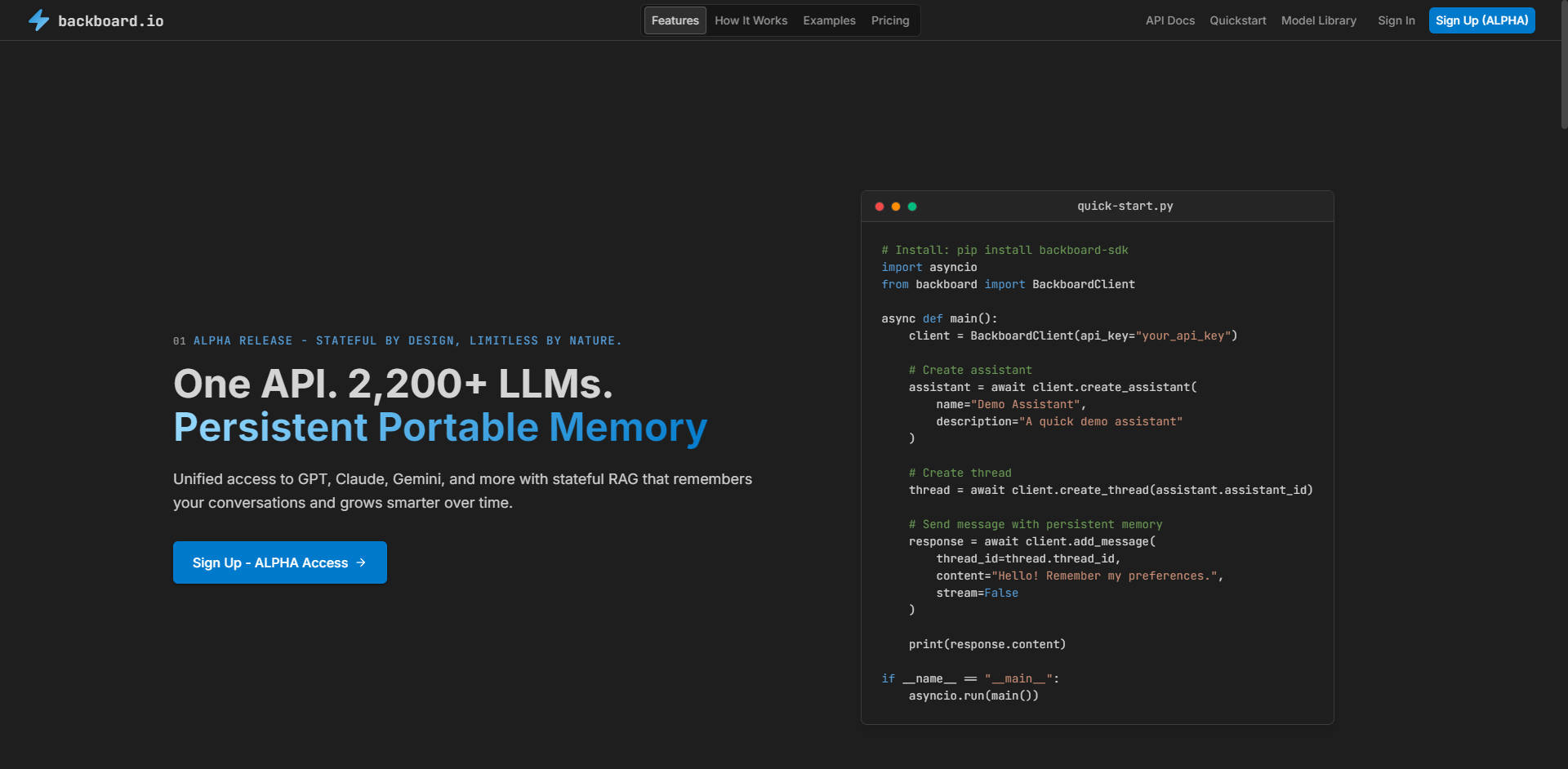

What is Backboard.io?

backboard.io provides developers with a single, unified API endpoint to access over 2,200 major language models, including GPT, Claude, and Gemini. Designed for stateful applications, backboard.io solves the fundamental challenge of context loss by integrating persistent memory and robust RAG capabilities directly into its conversational threads, ensuring your AI applications grow smarter with every interaction. If you're building intelligent applications that require reliable context and seamless model switching, backboard.io eliminates the complexity of API fragmentation and memory management.

Key Features

backboard.io is engineered to simplify the development lifecycle for complex, context-aware AI applications, offering immediate relief from managing disparate LLM providers.

🔌 Unified Access to 2,200+ Models

Stop juggling dozens of API keys and maintaining fragmented codebases. backboard.io provides one consistent endpoint and request format to access a continuously growing library of major LLMs. You can switch providers (e.g., from GPT-4o-mini to Claude 3) seamlessly within the same application thread, optimizing cost and performance without rewriting core logic.

🧠 Persistent, Stateful Threads

Unlike standard stateless API calls, backboard.io is architecturally stateful by design. When you initiate a conversation thread, the context persists across messages, sessions, models, and even applications. This foundational capability ensures that your AI assistants remember user preferences and past interactions, leading to dramatically improved relevance and reduced token consumption for context history.

📂 Integrated RAG and Knowledge Indexing

Easily augment your LLMs with proprietary data. Simply upload documents (PDFs, Office files), connect databases, or link external APIs. backboard.io automatically handles the indexing, chunking, and vectorization of your knowledge base, ensuring your assistants can retrieve accurate, up-to-date, and domain-specific information on demand.

💼 Portable Memory and Context Management

The context generated within a conversation thread isn't locked down. backboard.io allows you to export, share, and migrate conversation memory between teams and environments. This portable memory ensures complex, learned context can be leveraged immediately across different development stages or user groups without requiring retraining or manual data transfer.

Use Cases

Leverage backboard.io's stateful architecture to build reliable, high-performing AI solutions across various domains.

1. Context-Aware Customer Support Bots

Scenario: A user asks your support bot about a specific product configuration, returns three days later, and asks a follow-up question related to their previous inquiry. Outcome: Because the conversation utilizes a persistent thread, the bot instantly recalls the initial configuration details, avoiding repetitive prompting and providing immediate, relevant support. This significantly enhances the user experience and reduces resolution time.

2. Internal Knowledge Management & Research

Scenario: Your development team is building an assistant that needs to summarize complex internal API documentation and legal contracts. Outcome: By connecting your document library via RAG integration, the assistant uses the unified API to query multiple LLMs against the indexed data. The stateful thread allows team members to collaboratively build context over weeks—for example, marking specific passages or adding preferences—which benefits all subsequent queries.

3. Seamless Model Migration and A/B Testing

Scenario: You need to test a new, lower-cost LLM against your current high-performance model without disrupting the user’s experience or losing their conversation history. Outcome: Using the Unified API, you can switch the underlying LLM provider mid-thread, maintaining the full context of the conversation. This allows for rapid, real-time A/B testing of model performance and cost efficiency without requiring users to restart their sessions.

Why Choose backboard.io?

backboard.io is built specifically to address the complexity and fragmentation inherent in the modern LLM ecosystem, delivering functional advantages that accelerate development and enhance end-user intelligence.

Zero API Juggling: Eliminate the operational overhead of managing multiple vendor SDKs, API keys, and differing authentication methods. You consolidate your entire LLM infrastructure into one robust, reliable endpoint.

Intelligence That Persists: Unlike basic API wrappers, backboard.io’s core value is its stateful design. You are not just calling a model; you are building an assistant that continuously learns from every interaction, making your applications fundamentally smarter over time.

Enterprise-Grade Security: Your data is protected by end-to-end encryption. Crucially, backboard.io maintains a strict privacy policy: your proprietary data and conversation history are never used to train external models.

Developer-Focused Feature Suite: Enhance your application logic with advanced thread management features like multi-user threads, thread branching, user tagging, and organizational folders, enabling sophisticated collaboration and management of AI context.

Conclusion

backboard.io provides the essential infrastructure for developers ready to move beyond basic, stateless LLM calls. By unifying access to thousands of models and embedding persistent memory and RAG capabilities, you can focus on building truly intelligent, context-aware applications that deliver tangible value.

Explore how backboard.io can help you integrate advanced AI capabilities faster and more reliably.

More information on Backboard.io

Backboard.io Alternatives

Backboard.io Alternatives-

TaskingAI brings Firebase's simplicity to AI-native app development. Start your project by selecting an LLM model, build a responsive assistant supported by stateful APIs, and enhance its capabilities with managed memory, tool integrations, and augmented generation system.

-

Memory Box: Your universal AI memory. Unify enterprise knowledge across all tools, secure data, & deploy smart AI agents that truly remember.

-

Llongterm: The plug-and-play memory layer for AI agents. Eliminate context loss & build intelligent, persistent AI that never asks users to repeat themselves.

-

Supermemory gives your LLMs long-term memory. Instead of stateless text generation, they recall the right facts from your files, chats, and tools, so responses stay consistent, contextual, and personal.

-

Agents promote human-type reasoning and are a great advancement towards building AGI and understanding ourselves as humans. Memory is a key component of how humans approach tasks and should be weighted the same when building AI agents. memary emulates human memory to advance these agents.