Ktransformers

Ktransformers

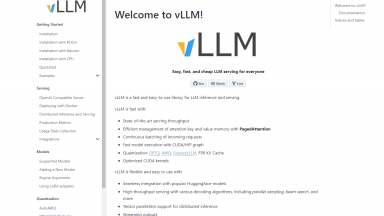

VLLM

VLLM

Ktransformers

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Developer Tools,Software Development,Data Science |

VLLM

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Software Development,Data Science |

Ktransformers Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

VLLM Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

Transformer Lab - Transformer Lab: An open - source platform for building, tuning, and running LLMs locally without coding. Download 100s of models, finetune across hardware, chat, evaluate, and more.

Megatron-LM - Ongoing research training transformer models at scale

OLMo 2 32B - OLMo 2 32B: Open-source LLM rivals GPT-3.5! Free code, data & weights. Research, customize, & build smarter AI.

Monster API - MonsterGPT: Fine-tune & deploy custom AI models via chat. Simplify complex LLM & AI tasks. Access 60+ open-source models easily.

Kolosal AI - Kolosal AI is an open-source platform that enables users to run large language models (LLMs) locally on devices like laptops, desktops, and even Raspberry Pi, prioritizing speed, efficiency, privacy, and eco-friendliness.