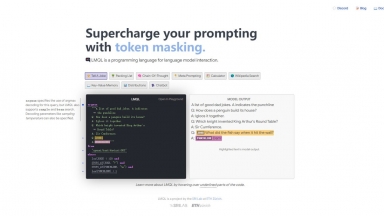

LMQL

LMQL

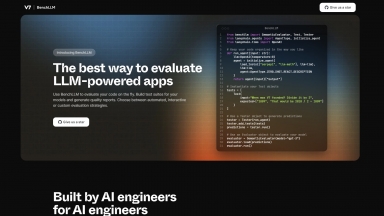

BenchLLM by V7

BenchLLM by V7

LMQL

| Launched | 2022-11 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | Cloudflare Analytics,Fastly,Google Fonts,GitHub Pages,Highlight.js,jQuery,Varnish |

| Tag | Text Analysis |

BenchLLM by V7

| Launched | 2023-07 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | Framer,Google Fonts,HSTS |

| Tag | Test Automation,Llm Benchmark Leaderboard |

LMQL Rank/Visit

| Global Rank | 2509184 |

| Country | United States |

| Month Visit | 8348 |

Top 5 Countries

Traffic Sources

BenchLLM by V7 Rank/Visit

| Global Rank | 12812835 |

| Country | United States |

| Month Visit | 961 |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

LM Studio - LM Studio is an easy to use desktop app for experimenting with local and open-source Large Language Models (LLMs). The LM Studio cross platform desktop app allows you to download and run any ggml-compatible model from Hugging Face, and provides a simple yet powerful model configuration and inferencing UI. The app leverages your GPU when possible.

LLMLingua - To speed up LLMs' inference and enhance LLM's perceive of key information, compress the prompt and KV-Cache, which achieves up to 20x compression with minimal performance loss.

LazyLLM - LazyLLM: Low-code for multi-agent LLM apps. Build, iterate & deploy complex AI solutions fast, from prototype to production. Focus on algorithms, not engineering.

vLLM - A high-throughput and memory-efficient inference and serving engine for LLMs

LLM-X - Revolutionize LLM development with LLM-X! Seamlessly integrate large language models into your workflow with a secure API. Boost productivity and unlock the power of language models for your projects.