What is LMQL?

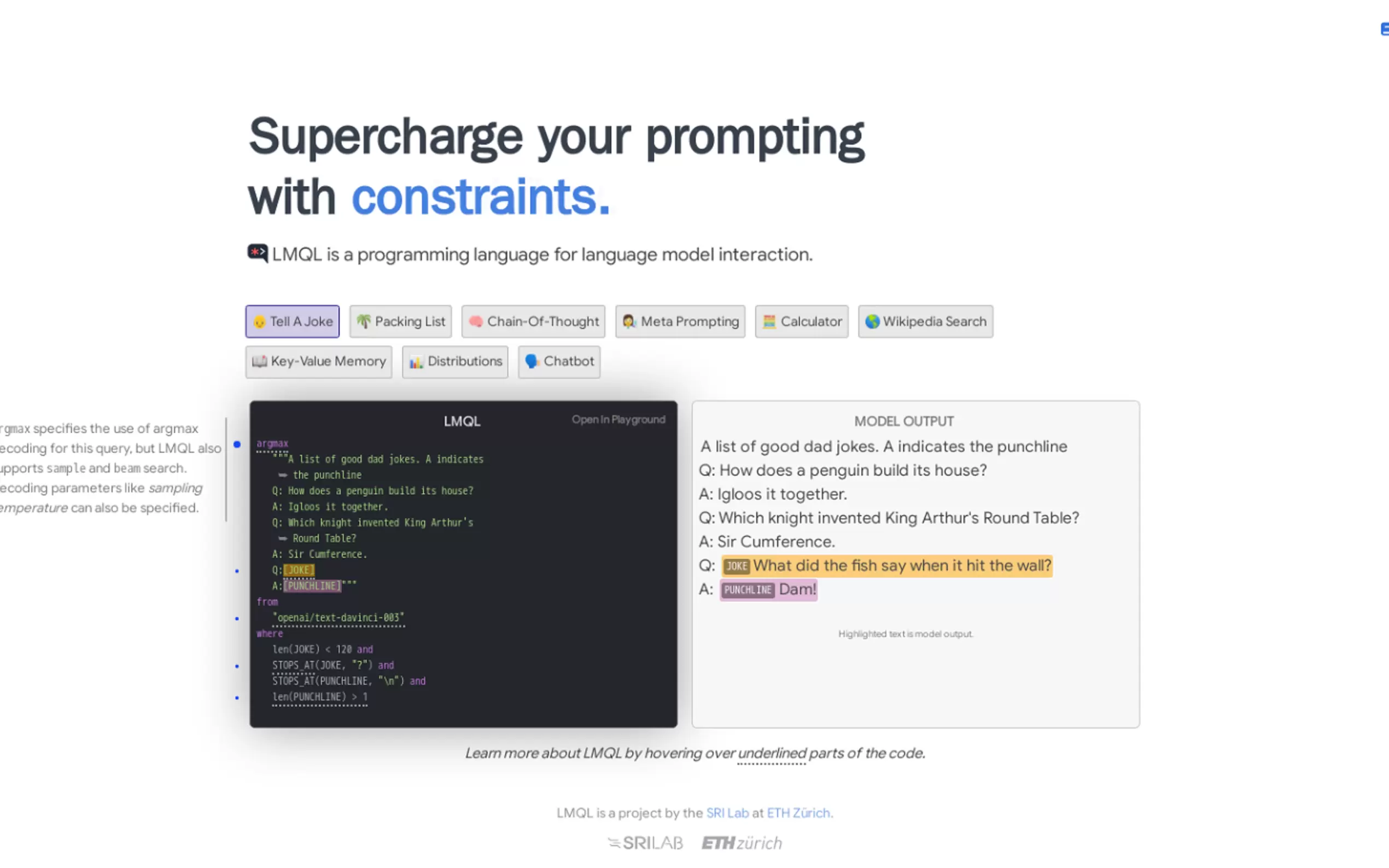

LMQL is a Python-based programming language designed for interacting with large language models (LLMs). It enables robust and modular LLM prompting through declarative templates, constraints, and an optimizing runtime. With LMQL, users can create structured programs that guide LLMs to produce reliable, well-formatted outputs, making it easier to integrate LLMs into automated workflows.

Key Features:

📝 Declarative Templates

Define LLM prompts using simple templates and variables, allowing for clear and structured outputs.🔒 Constraints for Reliable Outputs

Constrain LLM responses to specific formats or values, ensuring consistency and preventing unexpected results.📊 Distribution Clause for Confidence Scoring

Obtain probability distributions over possible outputs, giving insight into the model’s confidence in its classifications.🔄 Dynamic Control Flow

Use conditional logic to react to model outputs, enabling more sophisticated and interactive prompt designs.

Use Cases:

Sentiment Analysis Automation

LMQL can automate sentiment analysis on customer reviews, providing both classification and confidence scores.Customized Joke Generation

Users can create programs that generate dad jokes with specific punchlines, using constraints to ensure proper formatting.Detailed Review Breakdowns

Based on the sentiment of a review, LMQL can dynamically prompt the model to provide further details about what the reviewer liked or disliked.

Conclusion:

LMQL simplifies the process of programming LLMs by combining declarative elements, constraints, and dynamic control flow. Its ability to ensure reliable and structured outputs makes it an ideal tool for developers looking to integrate LLMs into their applications with precision and confidence.

FAQs:

What makes LMQL different from standard Python with LLMs?

LMQL offers declarative templates, constraints, and an optimizing runtime specifically designed for interacting with LLMs, making it easier to manage and structure LLM outputs.Can LMQL work with models other than OpenAI?

Yes, LMQL supports a range of models including those from HuggingFace Transformers, llama.cpp, and Azure OpenAI.How does the distribution clause benefit users?

The distribution clause provides probability distributions over possible outputs, giving users insight into the model's confidence in its responses, which is particularly useful for decision-making processes.

More information on LMQL

Top 5 Countries

Traffic Sources

LMQL Alternatives

Load more Alternatives-

LM Studio is an easy to use desktop app for experimenting with local and open-source Large Language Models (LLMs). The LM Studio cross platform desktop app allows you to download and run any ggml-compatible model from Hugging Face, and provides a simple yet powerful model configuration and inferencing UI. The app leverages your GPU when possible.

-

-

-

-