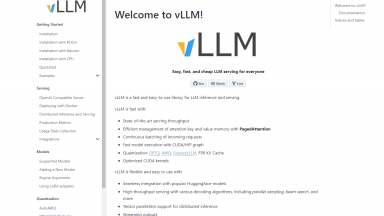

VLLM

VLLM

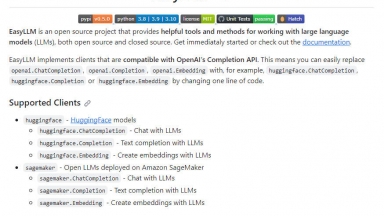

EasyLLM

EasyLLM

VLLM

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Software Development,Data Science |

EasyLLM

| Launched | 2024 |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Developer Tools,Chatbot Builder,Coding Assistants |

VLLM Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

EasyLLM Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

LLMLingua - To speed up LLMs' inference and enhance LLM's perceive of key information, compress the prompt and KV-Cache, which achieves up to 20x compression with minimal performance loss.

StreamingLLM - Introducing StreamingLLM: An efficient framework for deploying LLMs in streaming apps. Handle infinite sequence lengths without sacrificing performance and enjoy up to 22.2x speed optimizations. Ideal for multi-round dialogues and daily assistants.

LazyLLM - LazyLLM: Low-code for multi-agent LLM apps. Build, iterate & deploy complex AI solutions fast, from prototype to production. Focus on algorithms, not engineering.

OneLLM - OneLLM is your end-to-end no-code platform to build and deploy LLMs.