What is EasyLLM?

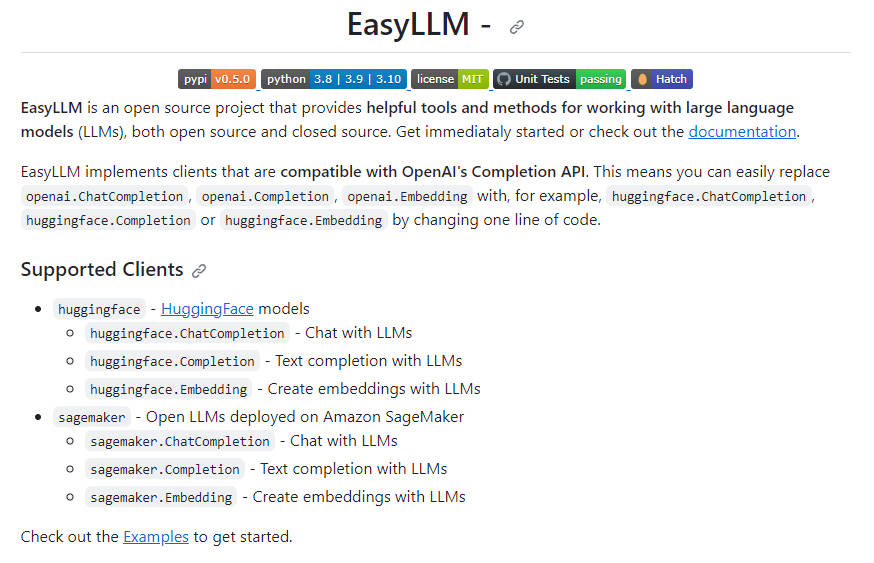

EasyLLM is an open-source project that offers useful tools and methods for working with large language models (LLMs). It provides compatible clients for OpenAI's Completion API, allowing for easy integration with different LLMs. With EasyLLM, users can switch between various LLMs, such as HuggingFace and SageMaker, by making a simple code change. The project aims to simplify the process of working with LLMs and offers examples and documentation to help users get started.

Key Features:

1. Compatible Clients: EasyLLM implements clients that are compatible with OpenAI's Completion API, including huggingface.ChatCompletion, huggingface.Completion, huggingface.Embedding, sagemaker.ChatCompletion, sagemaker.Completion, and sagemaker.Embedding. These clients enable users to interact with LLMs and perform tasks like chat completion, text completion, and creating embeddings.

2. Easy Integration: By changing just one line of code, users can switch between different LLMs, such as HuggingFace and SageMaker, using EasyLLM. This flexibility allows users to leverage the strengths of different LLMs for their specific needs.

3. Streaming Support: EasyLLM supports streaming of completions, which enables users to process large amounts of data efficiently. This feature is particularly useful when working with real-time applications or handling continuous streams of text.

4. Helper Modules: EasyLLM provides additional helper modules, such as evol_instruct and prompt_utils. These modules offer functionalities like using evolutionary algorithms to create instructions for LLMs and converting prompt formats between OpenAI Messages and open-source models like Llama 2.

Use Cases:

1. Chat Completion: EasyLLM's huggingface.ChatCompletion and sagemaker.ChatCompletion clients can be used to build chatbot applications. These clients allow users to have interactive conversations with LLMs, making them suitable for customer support, virtual assistants, or any scenario requiring real-time chat response.

2. Text Completion: With huggingface.Completion and sagemaker.Completion, EasyLLM enables users to generate text completions based on given prompts. This can be useful for tasks like content generation, writing assistance, or generating suggestions based on user input.

3. Embedding Creation: EasyLLM's huggingface.Embedding and sagemaker.Embedding clients allow users to create embeddings with LLMs. Embeddings are useful for tasks like natural language processing, sentiment analysis, or text classification.

EasyLLM is a versatile open-source project that simplifies working with large language models. Its compatible clients, easy integration with different LLMs, streaming support, and helper modules make it a valuable tool for various applications. Whether you need chat completion, text completion, or embedding creation, EasyLLM provides the necessary tools and resources to enhance your language model workflows. Get started with EasyLLM today and experience the power of large language models in your projects.