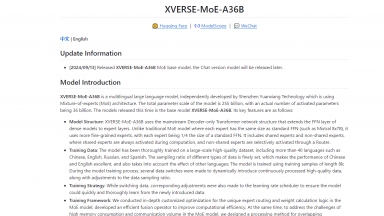

XVERSE-MoE-A36B

XVERSE-MoE-A36B

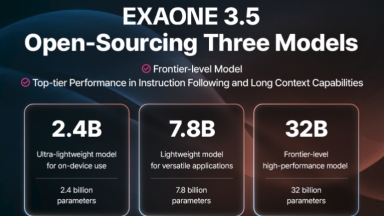

EXAONE 3.5

EXAONE 3.5

XVERSE-MoE-A36B

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Content Creation,Story Writing,Text Generators |

EXAONE 3.5

| Launched | |

| Pricing Model | Free |

| Starting Price | |

| Tech used | |

| Tag | Text Generators,Question Answering,Answer Generators |

XVERSE-MoE-A36B Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

EXAONE 3.5 Rank/Visit

| Global Rank | |

| Country | |

| Month Visit |

Top 5 Countries

Traffic Sources

Estimated traffic data from Similarweb

What are some alternatives?

Yuan2.0-M32 - Yuan2.0-M32 is a Mixture-of-Experts (MoE) language model with 32 experts, of which 2 are active.

DeepSeek Chat - DeepSeek-V2: 236 billion MoE model. Leading performance. Ultra-affordable. Unparalleled experience. Chat and API upgraded to the latest model.

JetMoE-8B - JetMoE-8B is trained with less than $ 0.1 million1 cost but outperforms LLaMA2-7B from Meta AI, who has multi-billion-dollar training resources. LLM training can be much cheaper than people generally thought.

Yi-VL-34B - Yi Visual Language (Yi-VL) model is the open-source, multimodal version of the Yi Large Language Model (LLM) series, enabling content comprehension, recognition, and multi-round conversations about images.