Best XVERSE-MoE-A36B Alternatives in 2025

-

Yuan2.0-M32 is a Mixture-of-Experts (MoE) language model with 32 experts, of which 2 are active.

-

DeepSeek-V2: 236 billion MoE model. Leading performance. Ultra-affordable. Unparalleled experience. Chat and API upgraded to the latest model.

-

JetMoE-8B is trained with less than $ 0.1 million1 cost but outperforms LLaMA2-7B from Meta AI, who has multi-billion-dollar training resources. LLM training can be much cheaper than people generally thought.

-

Discover EXAONE 3.5 by LG AI Research. A suite of bilingual (English & Korean) instruction - tuned generative models from 2.4B to 32B parameters. Support long - context up to 32K tokens, with top - notch performance in real - world scenarios.

-

Yi Visual Language (Yi-VL) model is the open-source, multimodal version of the Yi Large Language Model (LLM) series, enabling content comprehension, recognition, and multi-round conversations about images.

-

Qwen2.5 series language models offer enhanced capabilities with larger datasets, more knowledge, better coding and math skills, and closer alignment to human preferences. Open-source and available via API.

-

Qwen2 is the large language model series developed by Qwen team, Alibaba Cloud.

-

MOSS: an open-source language model supporting Chinese & English with 16B parameters. Run it on a single GPU for seamless conversations & plugin support.

-

Seed-X: Open-source, high-performance multilingual translation for 28 languages. Gain control, transparent AI & unparalleled accuracy.

-

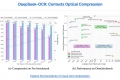

Boost LLM efficiency with DeepSeek-OCR. Compress visual documents 10x with 97% accuracy. Process vast data for AI training & enterprise digitization.

-

Hunyuan-MT-7B: Open-source AI machine translation. Master 33+ languages with unrivaled contextual & cultural accuracy. WMT2025 winner, lightweight & efficient.

-

Qwen2-VL is the multimodal large language model series developed by Qwen team, Alibaba Cloud.

-

GLM-130B: An Open Bilingual Pre-Trained Model (ICLR 2023)

-

Eagle 7B : Soaring past Transformers with 1 Trillion Tokens Across 100+ Languages (RWKV-v5)

-

OLMo 2 32B: Open-source LLM rivals GPT-3.5! Free code, data & weights. Research, customize, & build smarter AI.

-

Unlock powerful AI for agentic tasks with LongCat-Flash. Open-source MoE LLM offers unmatched performance & cost-effective, ultra-fast inference.

-

Enhance your NLP capabilities with Baichuan-7B - a groundbreaking model that excels in language processing and text generation. Discover its bilingual capabilities, versatile applications, and impressive performance. Shape the future of human-computer communication with Baichuan-7B.

-

DeepSeek LLM, an advanced language model comprising 67 billion parameters. It has been trained from scratch on a vast dataset of 2 trillion tokens in both English and Chinese.

-

GLM-4-9B is the open-source version of the latest generation of pre-trained models in the GLM-4 series launched by Zhipu AI.

-

GPT-NeoX-20B is a 20 billion parameter autoregressive language model trained on the Pile using the GPT-NeoX library.

-

CogVideoX models are based on advanced large-scale model technology to meet the needs of commercial-grade applications

-

C4AI Aya Vision 8B: Open-source multilingual vision AI for image understanding. OCR, captioning, reasoning in 23 languages.

-

A novel Multimodal Large Language Model (MLLM) architecture, designed to structurally align visual and textual embeddings.

-

Unlock powerful multilingual text understanding with Qwen3 Embedding. #1 MTEB, 100+ languages, flexible models for search, retrieval & AI.

-

A Trailblazing Language Model Family for Advanced AI Applications. Explore efficient, open-source models with layer-wise scaling for enhanced accuracy.

-

Molmo AI is an open-source multimodal artificial intelligence model developed by AI2. It can process and generate various types of data, including text and images.

-

ChatGLM-6B is an open CN&EN model w/ 6.2B paras (optimized for Chinese QA & dialogue for now).

-

MiniCPM is an End-Side LLM developed by ModelBest Inc. and TsinghuaNLP, with only 2.4B parameters excluding embeddings (2.7B in total).

-

BAGEL: Open-source multimodal AI from ByteDance-Seed. Understands, generates, edits images & text. Powerful, flexible, comparable to GPT-4o. Build advanced AI apps.

-

Meta's Llama 4: Open AI with MoE. Process text, images, video. Huge context window. Build smarter, faster!