What is Linggen?

Linggen is a free, local-first application designed to eliminate the critical "context gap" in AI development tools. It indexes your entire codebase and tribal knowledge, providing AI assistants with a persistent, deep understanding of your architecture, cross-project dependencies, and long-term design decisions. For developers juggling complex or multiple repositories, Linggen ensures your AI is always accurately informed, transforming it from a general chat tool into a sentient, knowledgeable team member.

Key Features

Linggen elevates your AI experience by providing persistent memory and deep architectural context, all while maintaining absolute control over your data.

🔒 Privacy-First, Local Architecture

Your code stays strictly on your machine. Utilizing a fast Rust backend and a local vector database (LanceDB), Linggen guarantees zero telemetry, cloud reliance, or data egress. This architecture allows you to harness the power of advanced AI assistance without compromising proprietary information or requiring external accounts.

🧠 Persistent Cross-Project Context

Eliminate the need to manually re-explain project architecture or manually copy-paste files. Linggen maintains separate, dedicated context for every codebase you index. This enables automatic context switching and allows your AI assistant to seamlessly retrieve design patterns or authentication logic from Project B while you actively work in Project A, overcoming session loss and context fatigue.

🔌 Unified MCP Integration

Linggen transforms into an authoritative knowledge source for your favorite AI tools via the Memory Context Provider (MCP) standard. By running a built-in MCP server, Linggen exposes its indexed knowledge to compatible IDE assistants (like Cursor, Zed, and VS Code extensions). This single endpoint allows your AI to query not just the current files, but the entire stored architectural memory, leading to significantly more accurate and contextual responses.

🗺️ Graph View System Map

Accelerate onboarding and clarify refactoring risks with an interactive, visual system map. The dependency graph clearly shows file and module relationships, providing an immediate, visual understanding of large codebases. This view is invaluable for performing accurate "blast-radius" analysis before beginning a refactor, ensuring changes don't unintentionally break critical dependencies.

📝 Doc-First Knowledge Capture

Linggen includes a built-in markdown editor that supports a doc-first workflow. Capture design decisions, architecture notes, and critical "gotchas" directly within your project's context. The AI can then utilize this tribal knowledge via semantic search, ensuring that institutional memory—which often resides outside the code—is always available and actionable during implementation.

Use Cases

Linggen enables developers to utilize AI for complex, architectural tasks that were previously impossible due to the "context gap."

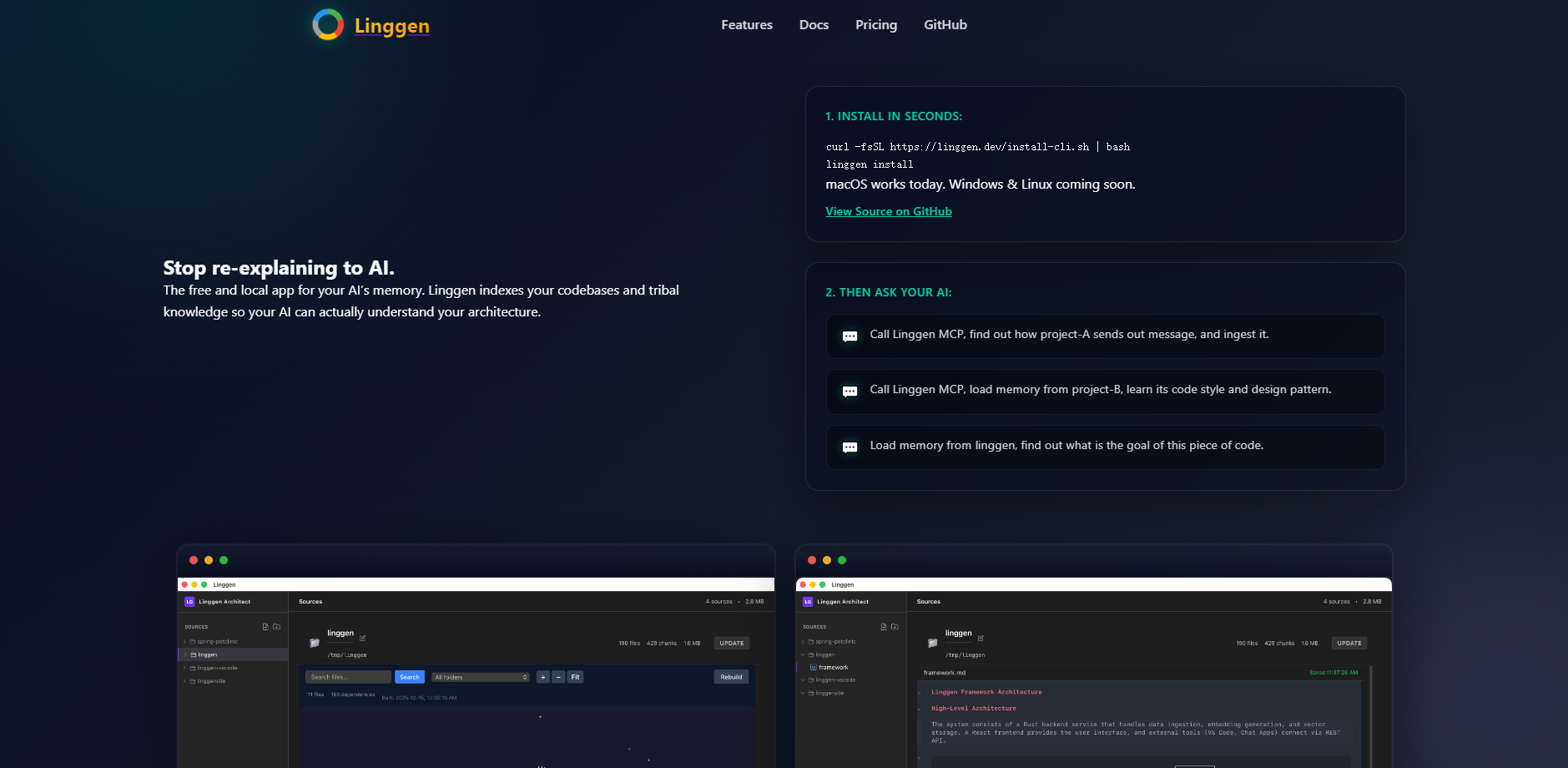

- Instant Architectural Deep Dive: A new developer needs to understand how a legacy microservice handles inter-service communication. Instead of spending hours hunting through files, they simply instruct their AI, "Call Linggen MCP, find out how project-A sends out messages, and ingest it." The AI instantly provides a clear summary of the mechanism, referencing relevant code and the associated architectural markdown documentation stored in Linggen's memory.

- Maintaining Cross-Project Consistency: You are developing a new service but need to ensure it adheres to the specific security patterns and code style established in another repository. You instruct your AI to "Load memory from project-B, learn its code style and design pattern." Your AI assistant now generates new code that is consistent with organizational standards, eliminating the need for manual cross-referencing and lengthy code reviews focused solely on style.

- Safe and Informed Refactoring: Before modifying a core utility function, you check the Graph View. You instantly visualize that the function is used by six modules across three different repositories. This immediate insight informs a safer, more targeted refactoring strategy and allows you to proactively test all affected systems, dramatically reducing the risk of production errors.

Conclusion

Linggen transforms AI assistants from limited conversational tools into deeply knowledgeable, persistent team members. By bridging the context gap and prioritizing a local-first, privacy-focused architecture, Linggen delivers the accurate, architectural-level insights modern development demands.

More information on Linggen

Linggen 대체품

더보기 대체품-

Claude-Mem seamlessly preserves context across sessions by automatically capturing tool usage observations, generating semantic summaries, and making them available to future sessions. This enables Claude to maintain continuity of knowledge about projects even after sessions end or reconnect.

-

존재조차 몰랐던 패턴을 발견하는 범용 AI 메모리. 하이브리드 검색(시맨틱+렉시컬+카테고리컬)은 순수 벡터 데이터베이스의 45%에 비해 정밀도@5에서 85%를 달성합니다. 지속적인 클러스터링을 통해 다음이 드러납니다: '인증 버그가 4개 프로젝트 전반에 걸쳐 근본 원인을 공유한다', '이 수정 사항은 3/4번 성공했지만 분산 시스템에서는 실패했다.' MCP-네이티브: Claude, Cursor, Windsurf, VS Code를 위한 하나의 두뇌. Docker를 통해 100% 로컬 — 귀하의 코드는 절대 귀하의 머신을 벗어나지 않습니다. 60초 만에 배포. 컨텍스트 손실을 멈추고 — 지식을 축적하기 시작하십시오.

-

-

-