What is Linggen?

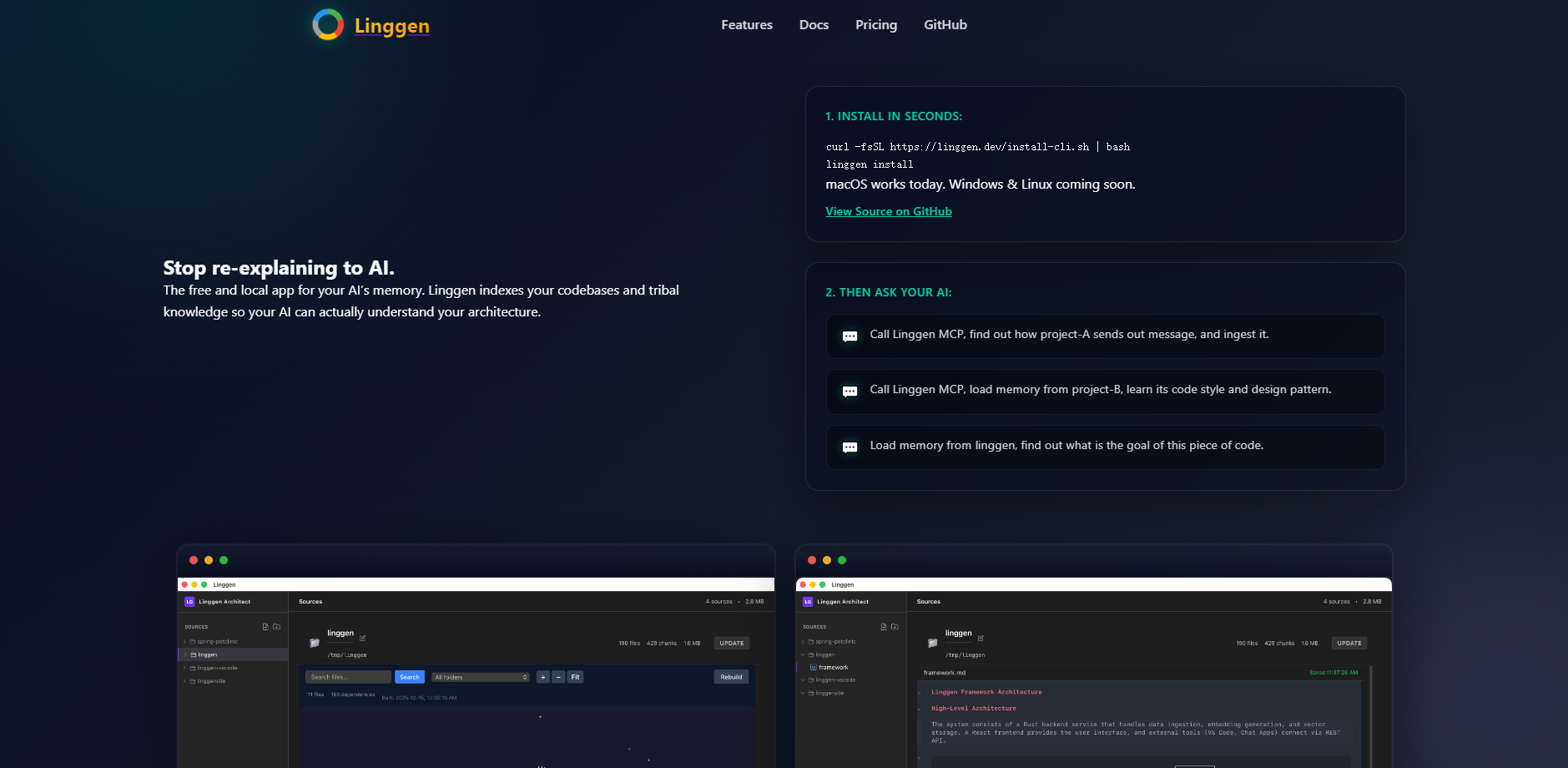

Linggen is a free, local-first application designed to eliminate the critical "context gap" in AI development tools. It indexes your entire codebase and tribal knowledge, providing AI assistants with a persistent, deep understanding of your architecture, cross-project dependencies, and long-term design decisions. For developers juggling complex or multiple repositories, Linggen ensures your AI is always accurately informed, transforming it from a general chat tool into a sentient, knowledgeable team member.

Key Features

Linggen elevates your AI experience by providing persistent memory and deep architectural context, all while maintaining absolute control over your data.

🔒 Privacy-First, Local Architecture

Your code stays strictly on your machine. Utilizing a fast Rust backend and a local vector database (LanceDB), Linggen guarantees zero telemetry, cloud reliance, or data egress. This architecture allows you to harness the power of advanced AI assistance without compromising proprietary information or requiring external accounts.

🧠 Persistent Cross-Project Context

Eliminate the need to manually re-explain project architecture or manually copy-paste files. Linggen maintains separate, dedicated context for every codebase you index. This enables automatic context switching and allows your AI assistant to seamlessly retrieve design patterns or authentication logic from Project B while you actively work in Project A, overcoming session loss and context fatigue.

🔌 Unified MCP Integration

Linggen transforms into an authoritative knowledge source for your favorite AI tools via the Memory Context Provider (MCP) standard. By running a built-in MCP server, Linggen exposes its indexed knowledge to compatible IDE assistants (like Cursor, Zed, and VS Code extensions). This single endpoint allows your AI to query not just the current files, but the entire stored architectural memory, leading to significantly more accurate and contextual responses.

🗺️ Graph View System Map

Accelerate onboarding and clarify refactoring risks with an interactive, visual system map. The dependency graph clearly shows file and module relationships, providing an immediate, visual understanding of large codebases. This view is invaluable for performing accurate "blast-radius" analysis before beginning a refactor, ensuring changes don't unintentionally break critical dependencies.

📝 Doc-First Knowledge Capture

Linggen includes a built-in markdown editor that supports a doc-first workflow. Capture design decisions, architecture notes, and critical "gotchas" directly within your project's context. The AI can then utilize this tribal knowledge via semantic search, ensuring that institutional memory—which often resides outside the code—is always available and actionable during implementation.

Use Cases

Linggen enables developers to utilize AI for complex, architectural tasks that were previously impossible due to the "context gap."

- Instant Architectural Deep Dive: A new developer needs to understand how a legacy microservice handles inter-service communication. Instead of spending hours hunting through files, they simply instruct their AI, "Call Linggen MCP, find out how project-A sends out messages, and ingest it." The AI instantly provides a clear summary of the mechanism, referencing relevant code and the associated architectural markdown documentation stored in Linggen's memory.

- Maintaining Cross-Project Consistency: You are developing a new service but need to ensure it adheres to the specific security patterns and code style established in another repository. You instruct your AI to "Load memory from project-B, learn its code style and design pattern." Your AI assistant now generates new code that is consistent with organizational standards, eliminating the need for manual cross-referencing and lengthy code reviews focused solely on style.

- Safe and Informed Refactoring: Before modifying a core utility function, you check the Graph View. You instantly visualize that the function is used by six modules across three different repositories. This immediate insight informs a safer, more targeted refactoring strategy and allows you to proactively test all affected systems, dramatically reducing the risk of production errors.

Conclusion

Linggen transforms AI assistants from limited conversational tools into deeply knowledgeable, persistent team members. By bridging the context gap and prioritizing a local-first, privacy-focused architecture, Linggen delivers the accurate, architectural-level insights modern development demands.

More information on Linggen

Linggen 替代方案

更多 替代方案-

Claude-Mem seamlessly preserves context across sessions by automatically capturing tool usage observations, generating semantic summaries, and making them available to future sessions. This enables Claude to maintain continuity of knowledge about projects even after sessions end or reconnect.

-

通用型人工智慧記憶體,能發掘您未曾察覺的潛在模式。 混合式搜尋 (結合語義、詞彙與類別) 在 precision@5 上能達到 85% 的精準度,相較於純向量資料庫的 45%,表現優異。 透過持續性叢集分析,我們發現:「四個專案中的身份驗證錯誤共享著相同的根本原因」;「某項修復在四次中成功了三次,但在分散式系統中卻告失敗」。 MCP 原生設計:為 Claude、Cursor、Windsurf、VS Code 提供統一智能核心。 透過 Docker 實現 100% 本機運行,確保您的程式碼絕不離開本機裝置。 60 秒快速部署。 不再流失情境,開始累積知識複利。

-

-

-