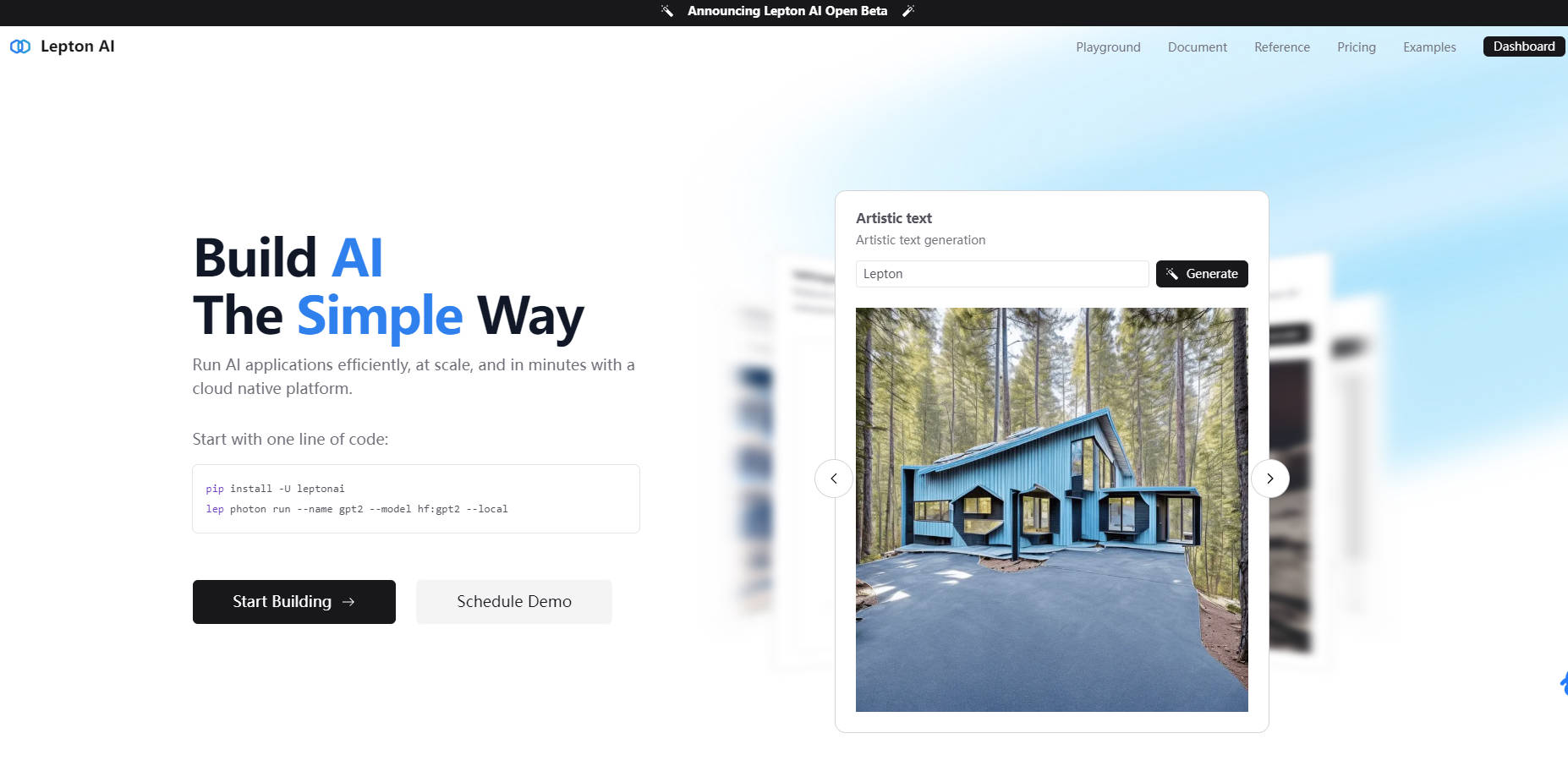

What is Lepton AI?

Lepton AI is the ultimate AI cloud platform designed to simplify the development, training, and deployment of AI models. Whether you’re building cutting-edge machine learning solutions or scaling your AI infrastructure, Lepton combines production-grade performance, cost efficiency, and comprehensive ML tooling to meet your needs. With flexible GPU options and enterprise-grade SLAs, Lepton empowers you to focus on innovation while it handles the complexities of AI infrastructure.

Key Features

🚀 Cloud Platform: A fully managed AI cloud platform that streamlines model development, training, and deployment.

🛠️ Serverless Endpoints: Use OpenAI-compatible APIs out of the box for seamless integration and deployment.

🔧 Dedicated Endpoints: Fully customizable endpoints for deploying your own models and configurations.

💻 Dev Pods: Run interactive development sessions with managed GPUs—perfect for SSH, Jupyter notebooks, and VS Code.

📊 Batch Jobs: Execute distributed training or batch processing jobs with high-performance interconnects and accelerated storage.

🛠️ Platform Features: Access serverless file systems, databases, and network tools to build your applications with ease.

🔒 Enterprise Support: Dedicated node groups and SOC2/HIPAA compliance for enterprise users.

Why Choose Lepton AI?

⚡ Performance: Process over 20 billion tokens daily with 100% uptime and achieve speeds of 600+ tokens per second with Tuna, Lepton’s fast LLM engine.

🖼️ Scalability: Generate 1 million+ images daily with 10K+ models and LORAs supported concurrently.

📈 Efficiency: 6x faster high-resolution image generation with DistriFusion and 5x performance boost through smart scheduling and optimized infrastructure.

🌐 Global Reach: Low-latency inference with time-to-first-token as low as 10ms for fast local deployment.

Use Cases

1️⃣ AI Model Development: Use Dev Pods and Python SDK to build and experiment with AI models in a managed cloud environment.

2️⃣ Enterprise AI Deployment: Deploy dedicated endpoints with customizable configurations for enterprise-scale AI solutions.

3️⃣ Distributed Training: Run batch jobs for large-scale training with accelerated storage and high-performance interconnects.

Conclusion

Lepton AI is more than just a cloud platform—it’s a complete ecosystem for building, training, and deploying AI models at scale. With unmatched performance, reliability, and ease of use, Lepton is the go-to solution for individuals and enterprises alike.

FAQ

Q: Is Lepton suitable for small-scale AI projects?

A: Absolutely! Lepton’s serverless endpoints and flexible GPU options make it ideal for projects of all sizes.

Q: Can I bring my own hardware?

A: Yes, Lepton supports Bring Your Own Machines (BYOM) for self-managed node groups.

Q: What kind of customer support does Lepton offer?

A: Lepton provides enterprise SLAs, and you can file issues directly on GitHub for quick assistance.

Q: How fast is Lepton’s LLM engine?

A: Lepton’s optimized LLM engine, Tuna, delivers speeds of 600+ tokens per second.

Start building with Lepton AI today and experience the future of AI development! 🚀

More information on Lepton AI

Top 5 Countries

Traffic Sources

Lepton AI Alternatives

Lepton AI Alternatives-

Accelerate your AI development with Lambda AI Cloud. Get high-performance GPU compute, pre-configured environments, and transparent pricing.

-

Design, optimize, and deploy with confidence. Latent AI helps you build executable neural network runtimes that are

-

Beam is a serverless platform for generative AI. Deploy inference endpoints, train models, run task queues. Fast cold starts, pay-per-second. Ideal for AI/ML workloads.

-

Lemon AI: Your private, self-hosted AI agent. Run powerful, open-source AI on your hardware. Securely tackle complex tasks, save costs, & control your data.

-

Build gen AI models with Together AI. Benefit from the fastest and most cost-efficient tools and infra. Collaborate with our expert AI team that’s dedicated to your success.