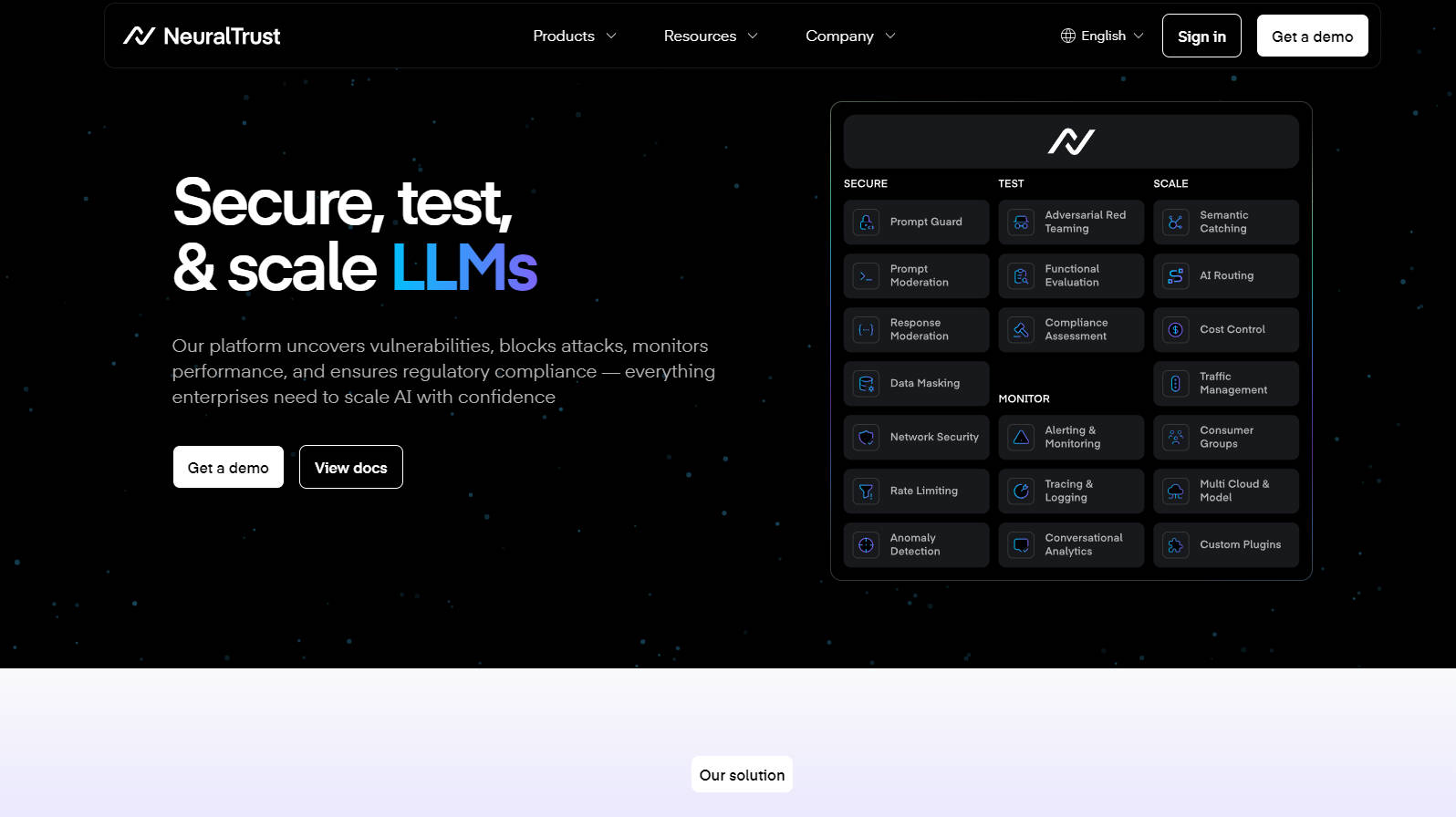

What is NeuralTrust?

NeuralTrust is an enterprise-grade security platform built to address the unique challenges of securing, testing, monitoring, and scaling Large Language Models (LLMs). It provides a unified command center for CISOs and AI teams, offering comprehensive protection, deep observability, and robust compliance capabilities.

Key Features:

🔒 TrustGate (AI Gateway): Implement a zero-trust, open-source AI Gateway to secure all LLM traffic. Enforce organization-wide policies, achieve vendor independence, and benefit from industry-leading performance (25k requests/second, <1ms response latency).

Technical Detail: Utilizes a multi-layered approach, combining network security, semantic defenses, and quota management.

🔬 TrustTest (Automated Red Teaming): Continuously assess your LLMs for vulnerabilities with automated penetration testing. Leverage a constantly updated threat database and domain-specific testing capabilities.

Technical Detail: Employs advanced algorithms for adversarial testing and offers customizable evaluation criteria (accuracy, tone, etc.).

👁️ TrustLens (Real-time Observability): Gain complete traceability and insights into AI behavior with advanced monitoring, alerting, and analytics. Ensure compliance with regulations like the EU AI Act and GDPR.

Technical Detail: Provides customizable conversational analytics, real-time anomaly detection, and detailed trace logging for debugging complex multi-agent systems.

🚀 Scale LLMs:

Technical Detail: Offers semantic caching to reduce costs and latency. Implement granular role-based access control with consumer groups. Benefit from traffic management features like load balancing and A/B testing.

Use Cases:

Mitigating Prompt Injection Attacks: NeuralTrust's TrustGate detects and blocks sophisticated prompt injection attempts, preventing malicious actors from manipulating LLM outputs.

Ensuring GDPR Compliance: TrustLens provides detailed audit trails and data lineage tracking, simplifying compliance with GDPR and other data privacy regulations.

Optimizing LLM Performance: TrustTest identifies performance bottlenecks and areas for improvement, enabling teams to optimize LLM deployments for speed and efficiency.

Conclusion:

NeuralTrust is the essential security platform for organizations deploying LLMs at scale. Its open-source core, high-performance architecture, and comprehensive feature set provide the tools needed to build secure, reliable, and compliant AI applications.

More information on NeuralTrust

Top 5 Countries

Traffic Sources

NeuralTrust Alternatives

Load more Alternatives-

Braintrust: The end-to-end platform to develop, test & monitor reliable AI applications. Get predictable, high-quality LLM results.

-

-

Neural Magic offers high-performance inference serving for open-source LLMs. Reduce costs, enhance security, and scale with ease. Deploy on CPUs/GPUs across various environments.

-

-

Companies of all sizes use Confident AI justify why their LLM deserves to be in production.