What is Ollama Docker?

Dive into the future of AI development with Ollama Docker, a pioneering containerized environment designed to streamline your deployments and empower you with GPU acceleration. Engineered with ease of setup, versatile development capabilities, and an intuitive web UI, Ollama Docker elevates your workflow, enabling faster, more efficient processing tasks and seamless integration with your projects.

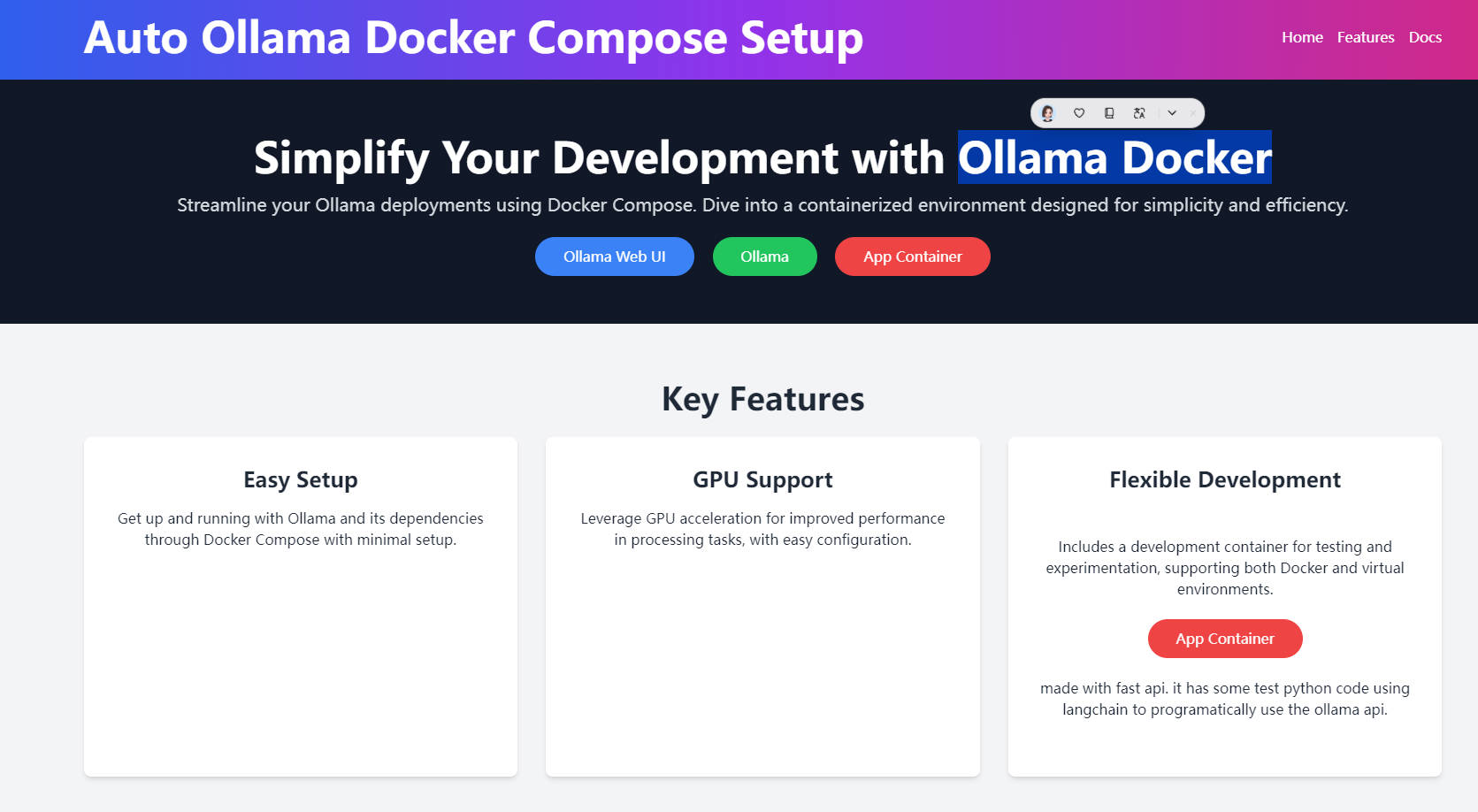

Key Features:

Easy Setup: With Docker Compose, deploying Ollama and its dependencies is a breeze, ensuring your system is up and running with minimal effort.

GPU Support: Experience enhanced performance in processing tasks with the integration of GPU acceleration, seamlessly configurable through the NVIDIA Container Toolkit.

Flexible Development: A dedicated container supports your development needs, offering an environment for testing and experimentation, whether you prefer Docker or virtual environments.

App Container Optimized for Fast API: This container, built with FastAPI, includes test Python code using Langchain for a programmatic approach to using the Ollama API, facilitating quick experimentation and development.

Use Cases:

Rapid AI Application Development: Developers can quickly prototype AI applications using Ollama's pre-trained models, accelerating the development cycle.

Educational Research in AI: Universities can leverage Ollama Docker to provide students with GPU-accelerated environments, enhancing AI learning and research capabilities.

Cloud-Based AI Testing: Companies can use Ollama Docker to deploy AI models in the cloud for testing and evaluation, without the need for local GPU infrastructure.

Conclusion:

Ollama Docker is the cornerstone of efficient AI development, providing a seamless, GPU-powered, and development-friendly environment. Whether you're a developer looking to accelerate AI application creation or an educator seeking to enhance AI curriculum, Ollama Docker is your key to unlocking new possibilities. Start exploring its capabilities today and transform the way you work with AI.

FAQs:

Q: What does Ollama Docker offer for developers interested in AI?

A:Ollama Docker provides a ready-to-use environment for AI development, featuring easy setup, GPU support, and a flexible development container optimized for testing and experimentation, accelerating the AI development process.

Q: Can I use Ollama Docker for educational purposes?

A:Absolutely, Ollama Docker is an excellent tool for educational settings, offering GPU-accelerated environments that enhance learning and research in AI for students and educators alike.

Q: How does Ollama Docker support cloud-based AI testing?

A:With Ollama Docker, you can deploy AI models in the cloud for testing, bypassing the need for local GPU infrastructure, making it ideal for companies looking to evaluate AI models in a scalable and resource-efficient manner.

More information on Ollama Docker

Top 5 Countries

Traffic Sources

Ollama Docker Alternatives

Load more Alternatives-

-

-

Oumi is a fully open-source platform that streamlines the entire lifecycle of foundation models - from data preparation and training to evaluation and deployment. Whether you’re developing on a laptop, launching large scale experiments on a cluster, or deploying models in production, Oumi provides the tools and workflows you need.

-

-

Kolosal AI is an open-source platform that enables users to run large language models (LLMs) locally on devices like laptops, desktops, and even Raspberry Pi, prioritizing speed, efficiency, privacy, and eco-friendliness.