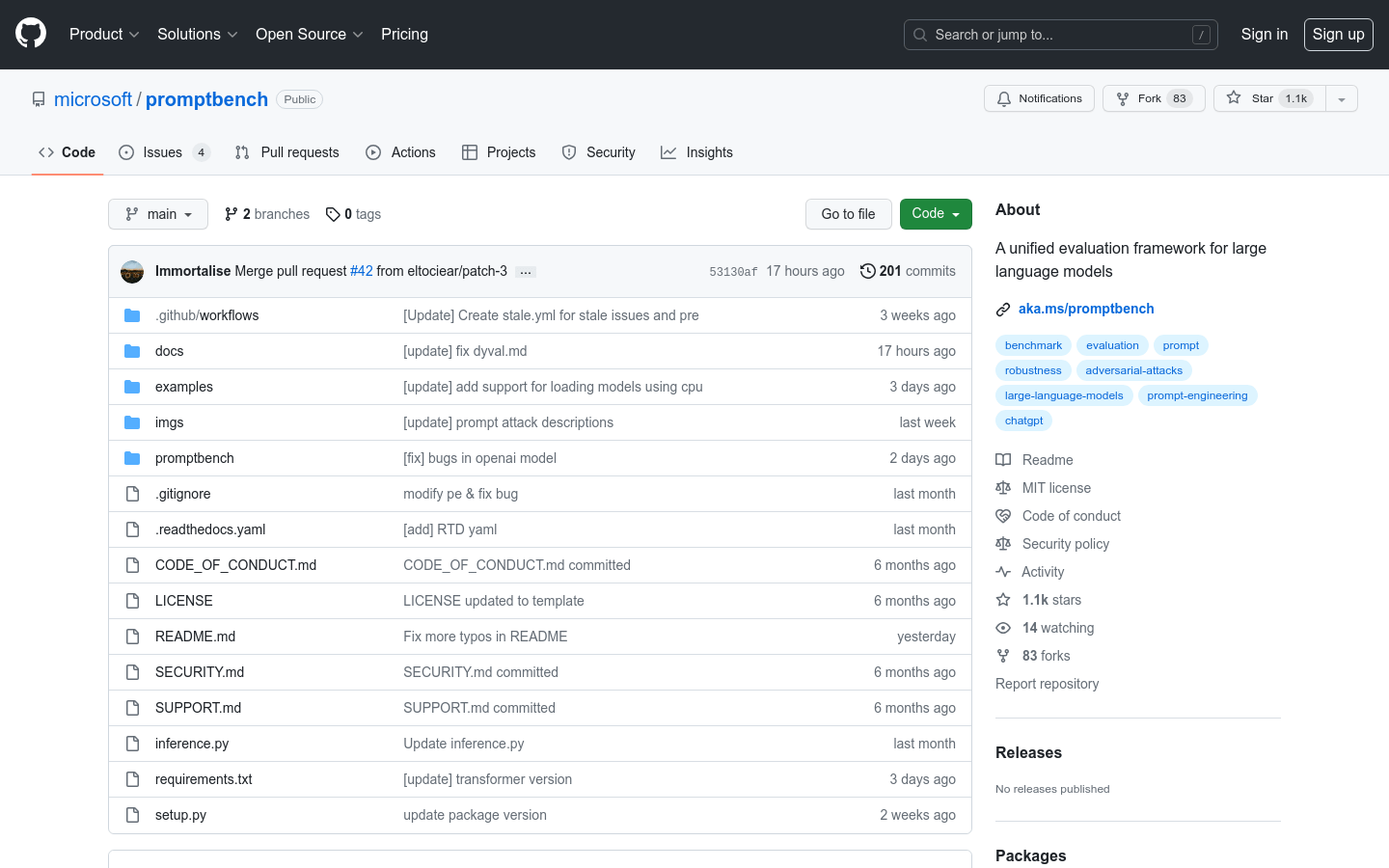

What is Promptbench?

PromptBench is a Pytorch-based Python package that allows researchers to evaluate Large Language Models (LLMs) easily. It offers user-friendly APIs for model performance assessment, prompt engineering, evaluating adversarial prompts, and dynamic evaluation. With support for various datasets, models, and prompt engineering methods, PromptBench is a versatile tool for evaluating and analyzing LLMs.

Key Features:

1. Quick Model Performance Assessment: PromptBench provides a user-friendly interface for building models, loading datasets, and evaluating model performance efficiently.

2. Prompt Engineering: The software implements several prompt engineering methods, such as Few-shot Chain-of-Thought, Emotion Prompt, and Expert Prompting, enabling researchers to enhance model performance.

3. Adversarial Prompt Attacks: PromptBench integrates prompt attacks, allowing researchers to simulate black-box adversarial prompt attacks on models and assess their robustness.

Use Cases:

1. Model Evaluation: Researchers can use PromptBench to evaluate LLMs on existing benchmarks like GLUE, SQuAD V2, and CSQA, enabling comprehensive analysis and comparison of model performance.

2. Prompt Engineering Research: PromptBench facilitates the exploration of different prompting techniques, including Chain-of-Thought and EmotionPrompt, helping researchers enhance model capabilities for specific tasks.

3. Robustness Testing: With the integrated prompt attacks, PromptBench enables researchers to assess the robustness of LLMs against adversarial prompts, supporting the development of more secure and reliable models.

Conclusion:

PromptBench offers a user-friendly and comprehensive solution for evaluating Large Language Models. With its easy-to-use interface, support for various datasets and models, and prompt engineering capabilities, researchers can assess model performance, explore different prompting techniques, and evaluate model robustness. By providing a versatile evaluation framework, PromptBench contributes to the advancement of LLM research and development.

More information on Promptbench

Promptbench Alternatives

Load more Alternatives-

Launch AI products faster with no-code LLM evaluations. Compare 180+ models, craft prompts, and test confidently.

-

Improve language models with Prompt Refine - a user-friendly tool for prompt experiments. Run, track, and compare experiments easily.

-

Streamline LLM prompt engineering. PromptLayer offers management, evaluation, & observability in one platform. Build better AI, faster.

-

-

PromptTools is an open-source platform that helps developers build, monitor, and improve LLM applications through experimentation, evaluation, and feedback.