What is Red Hat Enterprise Linux AI?

Red Hat Enterprise Linux AI is a groundbreaking platform designed to facilitate the development, testing, and deployment of large language models (LLMs) from the Granite family for enterprise applications. This innovative solution is built on the foundation of Red Hat Enterprise Linux, the world’s leading enterprise Linux platform, and is set to revolutionize the way businesses leverage generative AI.

Key Features:

Open Source LLMs:

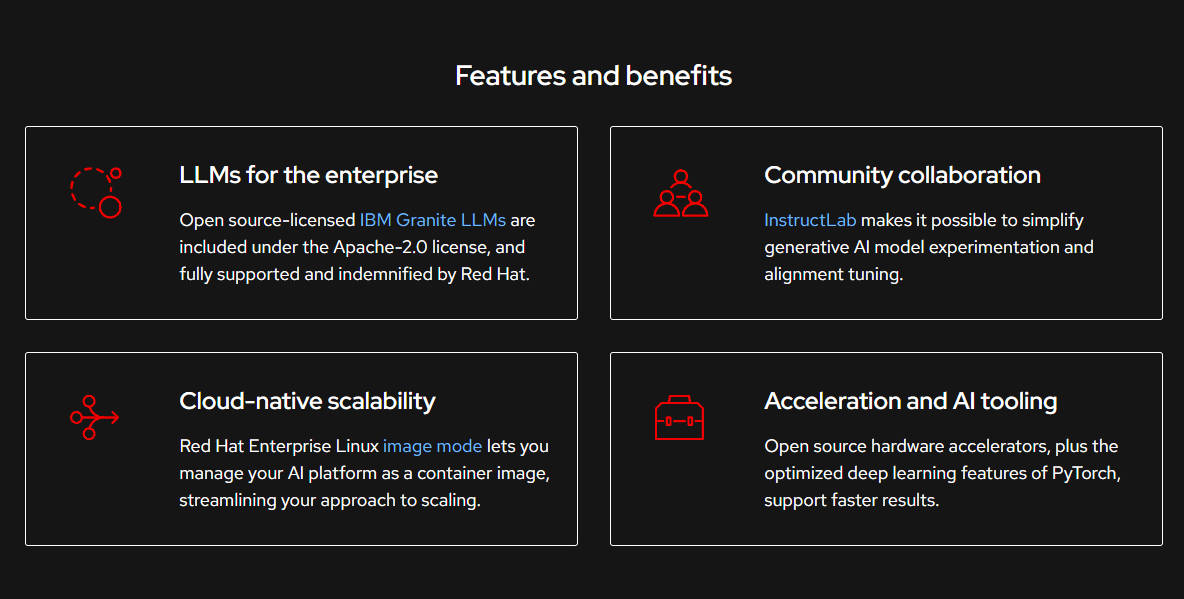

Granite Family:Red Hat Enterprise Linux AI includes the Granite family of open source-licensed LLMs, distributed under the Apache-2.0 license. This ensures complete transparency in training datasets, fostering trust and innovation in AI development.

IBM Granite LLMs:These LLMs are fully supported and indemnified by Red Hat, providing enterprises with the confidence to explore and utilize AI technologies.

Community Collaboration with InstructLab:

Model Alignment Tools:InstructLab offers a community-driven solution for enhancing LLM capabilities, simplifying model experimentation and alignment tuning. This empowers a wider range of users to contribute to and benefit from AI model development.

Cloud-Native Scalability:

Containerized Approach:The platform allows AI workflows to be managed as container images, streamlining the scaling process and ensuring portability across hybrid cloud environments.

Acceleration and AI Tooling:

Hardware Optimization:Red Hat Enterprise Linux AI includes hardware accelerators and optimized deep learning features of PyTorch, enabling faster and more efficient AI processing.

Use Cases:

Enterprise AI Development:Businesses can leverage Red Hat Enterprise Linux AI to develop and deploy AI models, driving innovation and efficiency in various applications.

Hybrid Cloud Scalability:The platform’s compatibility with Red Hat OpenShift® AI and IBM watsonx.ai allows for seamless scaling and advanced capabilities in enterprise AI development, data management, and model governance.

Why Red Hat Enterprise Linux AI?

Trusted Foundation:Red Hat Enterprise Linux is a trusted and widely-certified platform, ensuring compatibility with a vast array of clouds, hardware, and software vendors.

Open Hybrid Cloud Strategy:Red Hat’s commitment to open-source and hybrid cloud solutions provides businesses with the flexibility to run AI applications anywhere, breaking down cost and resource barriers.

Conclusion:

Red Hat Enterprise Linux AI is poised to be a game-changer in the enterprise AI landscape. By combining the power of open-source LLMs, community collaboration, cloud-native scalability, and advanced AI tooling, this platform empowers businesses to innovate, experiment, and scale their AI initiatives with trust and transparency.