What is TokenDagger?

In modern Natural Language Processing, efficient tokenization is fundamental. As your datasets and processing demands grow, standard tools like OpenAI's TikToken can become a significant performance bottleneck. TokenDagger is a high-performance, drop-in replacement specifically engineered to solve this problem, giving you the speed and throughput you need for any large-scale text processing task.

Key Features

TokenDagger is built to accelerate your NLP workflows without forcing you to refactor your code.

🚀 Accelerated Throughput and Speed Process text at a scale that was previously a challenge. TokenDagger delivers up to 2x the throughput of TikToken and is a remarkable 4.02x faster on code tokenization tasks. This translates directly into saved time, reduced compute costs, and faster project turnaround.

⚙️ Optimized Core Engine At its heart, TokenDagger leverages an optimized PCRE2 regex engine for highly efficient token pattern matching. It also features a simplified Byte Pair Encoding (BPE) algorithm, which significantly reduces the performance overhead often associated with large, complex vocabularies, especially those with many special tokens.

🔌 Seamless Drop-In Integration Migrating is effortless. TokenDagger is fully API-compatible with TikToken, meaning you can switch by changing a single line of code. Simply replace import tiktoken with import tokendagger as tiktoken, and your existing implementation will run significantly faster.

Unique Advantages

While TikToken provides a functional baseline, TokenDagger is built for users who demand superior performance and efficiency.

Unmatched Speed for Code: While standard tokenizers handle general text, TokenDagger is uniquely optimized for complex patterns found in source code, achieving a benchmarked 4.02x speed improvement over TikToken in this critical area.

Double the Processing Power: Unlike the standard performance of TikToken, TokenDagger delivers a proven 2x increase in overall throughput. This allows you to process the same volume of data in half the time, making it ideal for high-volume pipelines.

Frictionless, Zero-Refactor Upgrade: Instead of forcing you to rework your existing NLP pipelines, TokenDagger offers a true drop-in replacement. The transition is seamless, requiring zero changes to your

tiktoken.Encodingcalls or other logic.

Use Cases

Large-Scale Data Preprocessing: When preparing massive text corpora for model training, TokenDagger drastically cuts down your data prep time, letting you iterate on your models faster.

Developer Tooling & Code Analysis: If you're building a tool that parses and analyzes large code repositories, TokenDagger's speed ensures your application remains responsive and efficient, even with millions of lines of code.

High-Volume Information Retrieval: For search and retrieval systems that index vast amounts of text, TokenDagger accelerates the indexing process, ensuring your data is ingested and made searchable more quickly.

Conclusion

If your NLP workflows are hitting a performance wall with TikToken, TokenDagger is the clear, logical upgrade. It offers a significant boost in speed and throughput without demanding any changes to your established code. It’s the simplest way to unlock greater efficiency for your most demanding text processing tasks.

Install it today and experience the performance boost!

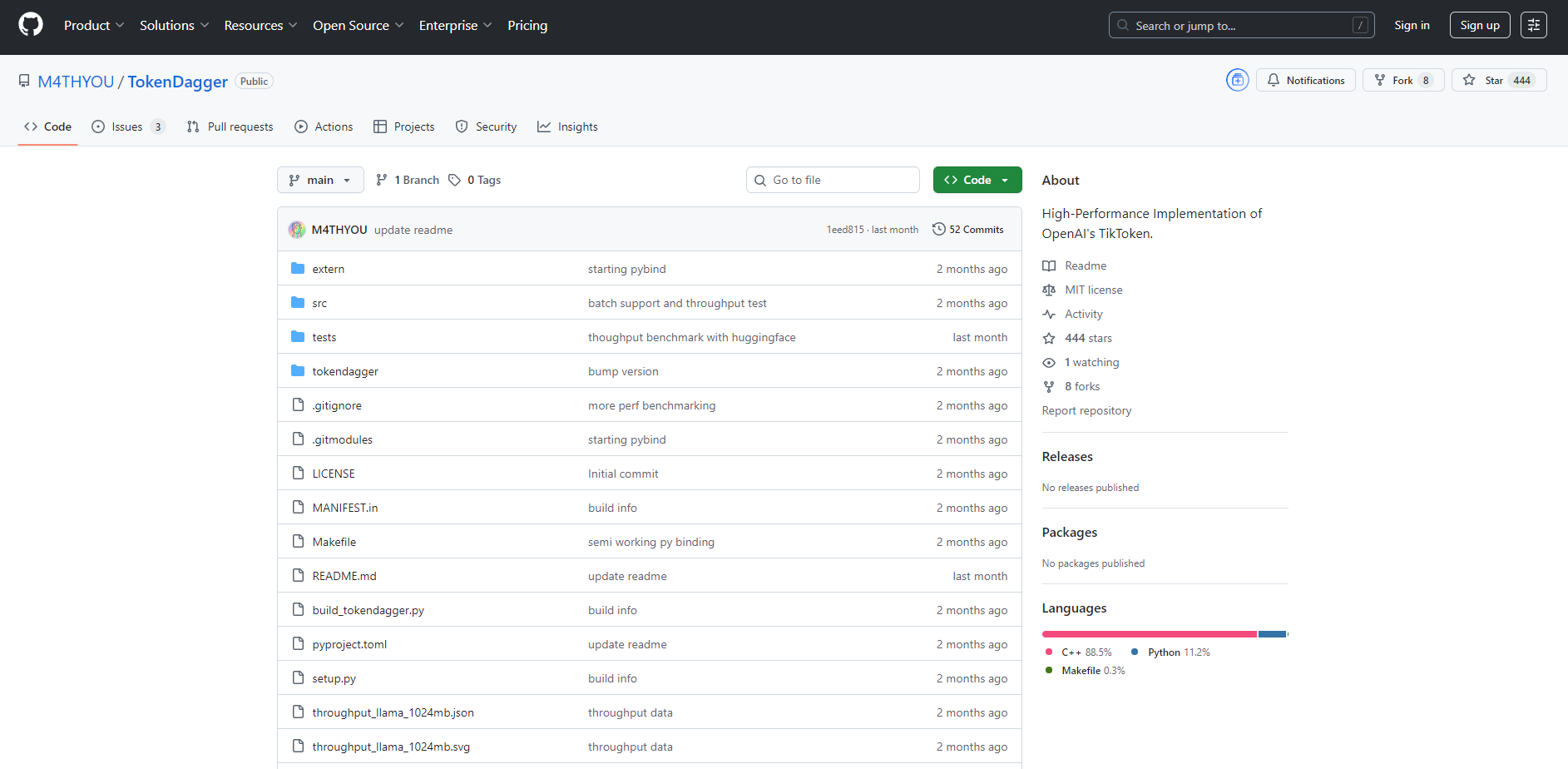

More information on TokenDagger

TokenDagger Alternatives

Load more Alternatives-

Tiktokenizer simplifies AI dev with real-time token tracking, in-app visualizer, seamless API integration & more. Optimize costs & performance.

-

-

Token Counter is an AI tool designed to count the number of tokens in a given text. Tokens are the individual units of meaning, such as words or punctuation marks, that are processed by language models.

-

Online tool to count tokens from OpenAI models and prompts. Make sure your prompt fits within the token limits of the model you are using.

-