What is Tokenomy?

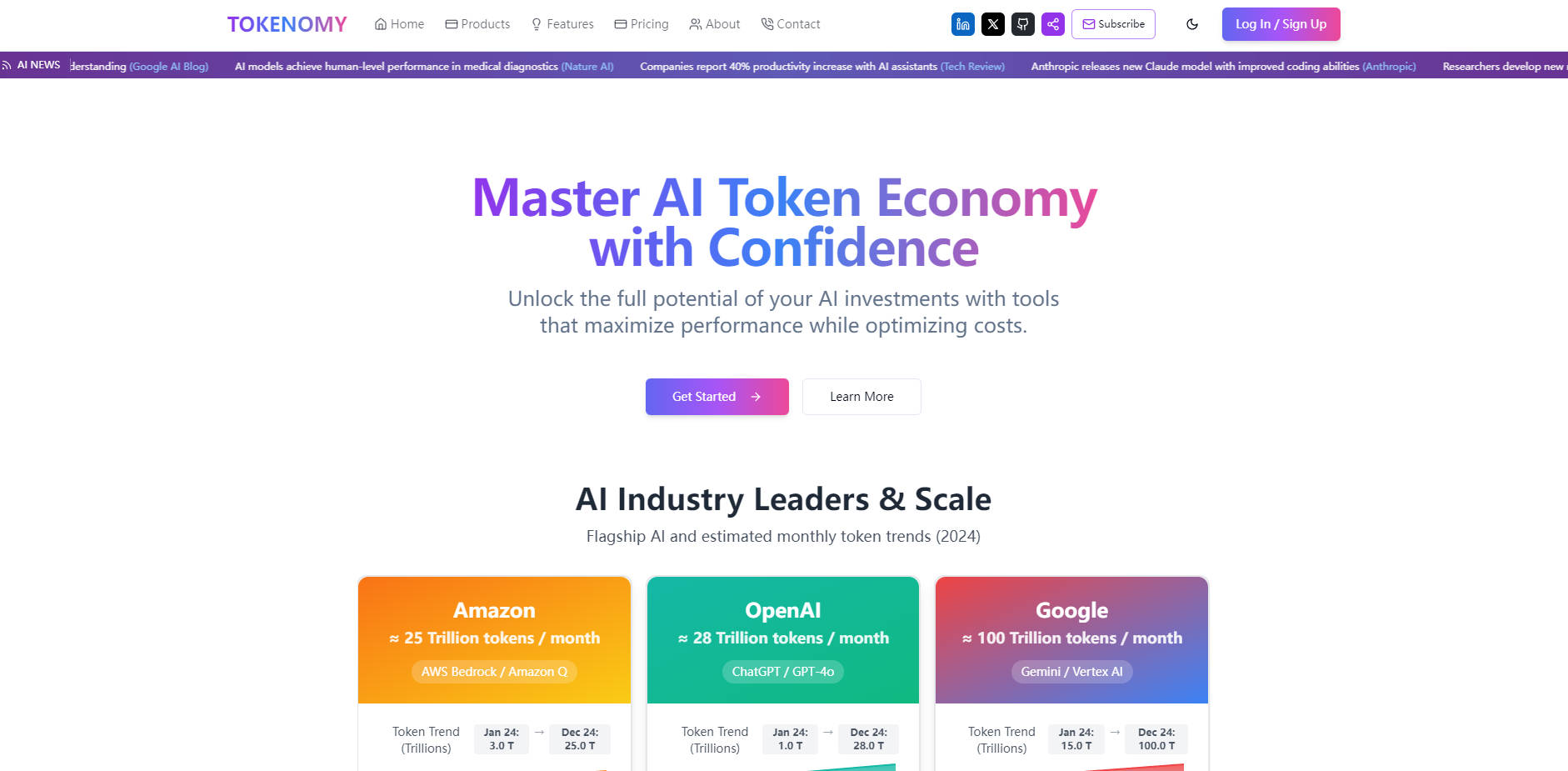

Working with Large Language Models (LLMs) like those from OpenAI, Anthropic, Google, and others offers incredible potential, but managing the associated costs and performance can feel complex. Understanding how your prompts translate into tokens – and how that impacts both your budget and the model's response – is crucial for efficient AI implementation.

Tokenomy provides the tools you need to gain clarity and control over your AI token usage. It's designed to help you analyze, optimize, and manage your interactions with LLM APIs, ultimately leading to smarter decisions and potentially significant cost savings.

Key Features for Smart Token Management

Precise Token Calculation: 🧮 Get accurate token counts for your content across various major AI models. This fundamental step helps you understand the true "size" of your input and output, essential for optimizing prompt length and complexity.

Accurate Cost Estimation: 💰 Immediately see the estimated cost of your API calls based on current pricing from major providers. This allows you to predict expenses, compare model costs for the same task, and make informed budget decisions before running expensive jobs.

Model Comparison Tools: ⚖️ Easily compare different AI models side-by-side. Analyze how the same content tokenizes and performs across models like GPT-4o, Claude 3, Gemini, and others, helping you find the best balance between performance and cost for your specific needs.

Tokenization Analysis: 👀 Visualize how different models break down your text into tokens. Understanding these differences is key to crafting efficient prompts that minimize token count while maximizing the model's understanding and output quality.

Speed Simulation: ⏱️ Simulate model processing speeds for your content. Get insight into potential latency and optimize your requests for faster response times, crucial for real-time applications or user-facing features.

Interactive Visualizations: 📊 Explore your token usage patterns, costs, and analysis results through clear charts and graphs. Visual data makes it easier to identify trends, pinpoint areas for optimization, and communicate insights to your team.

Practical Use Cases

Developing a New AI Feature: As a developer, planning a new application that uses an LLM API requires understanding potential costs and performance. Use Tokenomy to estimate the cost per user interaction, compare different models for speed and efficiency, and ensure your prompt structure is token-optimized before deploying.

Optimizing Content Generation Workflows: A content team using LLMs for drafting or summarizing can use Tokenomy to analyze prompt effectiveness. By understanding how different phrasing or instructions impact token count and cost, they can refine their prompts to get better results more economically.

Analyzing and Reducing LLM Expenses: For businesses with significant LLM API usage, Tokenomy provides a way to analyze overall spend patterns. Identify which types of requests or which models are consuming the most tokens and budget, then use the optimization tools to implement cost-saving strategies across your operations.

Conclusion

Tokenomy provides essential visibility and control in the complex world of AI tokens. By offering precise calculation, detailed analysis, and clear visualization, it helps you move beyond guesswork to confidently manage your LLM costs, optimize performance, and build more efficient AI applications. Gain a deeper understanding of your AI usage and make data-driven decisions to maximize your investment.

More information on Tokenomy

Tokenomy Alternatives

Load more Alternatives-

Online tool to count tokens from OpenAI models and prompts. Make sure your prompt fits within the token limits of the model you are using.

-

Calculate and compare the cost of using OpenAI, Azure, Anthropic Claude, Llama 3, Google Gemini, Mistral, and Cohere LLM APIs for your AI project with our simple and powerful free calculator. Latest numbers as of May 2024.

-

Token Counter is an AI tool designed to count the number of tokens in a given text. Tokens are the individual units of meaning, such as words or punctuation marks, that are processed by language models.

-

Tiktokenizer simplifies AI dev with real-time token tracking, in-app visualizer, seamless API integration & more. Optimize costs & performance.

-