What is Yi-VL-34B?

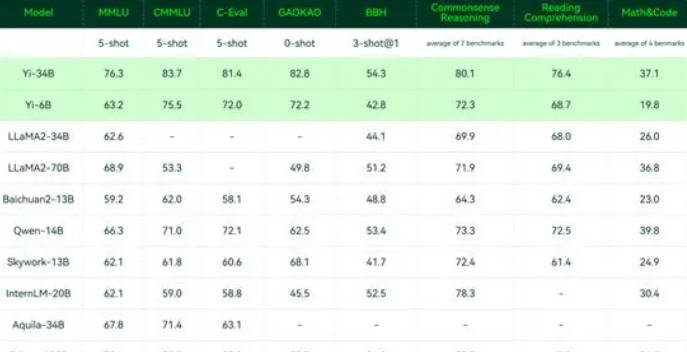

Yi-VL, a groundbreaking multimodal language model from Zero-One Things, marks a new era in multimodal AI. It builds upon the Yi language model, featuring the Yi-VL-34B and Yi-VL-6B versions, which excel in the novel MMMU benchmark test. Its innovative architecture, a blend of Vision Transformer (ViT) and Projection module, efficiently aligns image and text features, coupled with Yi's language capabilities.

Key Features:

🎨 Image Understanding:Yi-VL comprehends visual information through ViT, extracting crucial details and high-level concepts.

🤝 Multimodal Fusion:The Projection module seamlessly aligns image and text features, facilitating their effective interaction.

📚 Language Generation:Yi-VL harnesses its language capabilities to generate coherent and informative text responses, enhancing its multimodal communication.

Use Cases:

📖 Education:Yi-VL's ability to interpret diagrams and written instructions makes it a valuable tool for interactive learning.

🩺 Healthcare:Yi-VL can analyze medical images and patient records, assisting healthcare professionals in diagnosis and treatment decisions.

🎮 Entertainment:Yi-VL's image and language generation capabilities offer exciting possibilities for immersive gaming experiences.

Conclusion:

Yi-VL stands as a remarkable multimodal language model that opens up new frontiers in AI's comprehension and generation of complex information. Its potential extends across various domains, and its open-source nature promises to accelerate innovation in multimodal AI. Yi-VL's journey marks a pivotal moment in the advancement of AI, driving us closer to realizing its vast potential and transforming industries.

More information on Yi-VL-34B

Yi-VL-34B Alternatives

Yi-VL-34B Alternatives-

Qwen2-VL is the multimodal large language model series developed by Qwen team, Alibaba Cloud.

-

C4AI Aya Vision 8B: Open-source multilingual vision AI for image understanding. OCR, captioning, reasoning in 23 languages.

-

GLM-4-9B is the open-source version of the latest generation of pre-trained models in the GLM-4 series launched by Zhipu AI.

-

Transform businesses with YiVal, an enterprise-grade generative AI platform. Develop high-performing apps with GPT-4 at a lower cost. Explore endless possibilities now!

-

GLM-4.5V: Empower your AI with advanced vision. Generate web code from screenshots, automate GUIs, & analyze documents & video with deep reasoning.