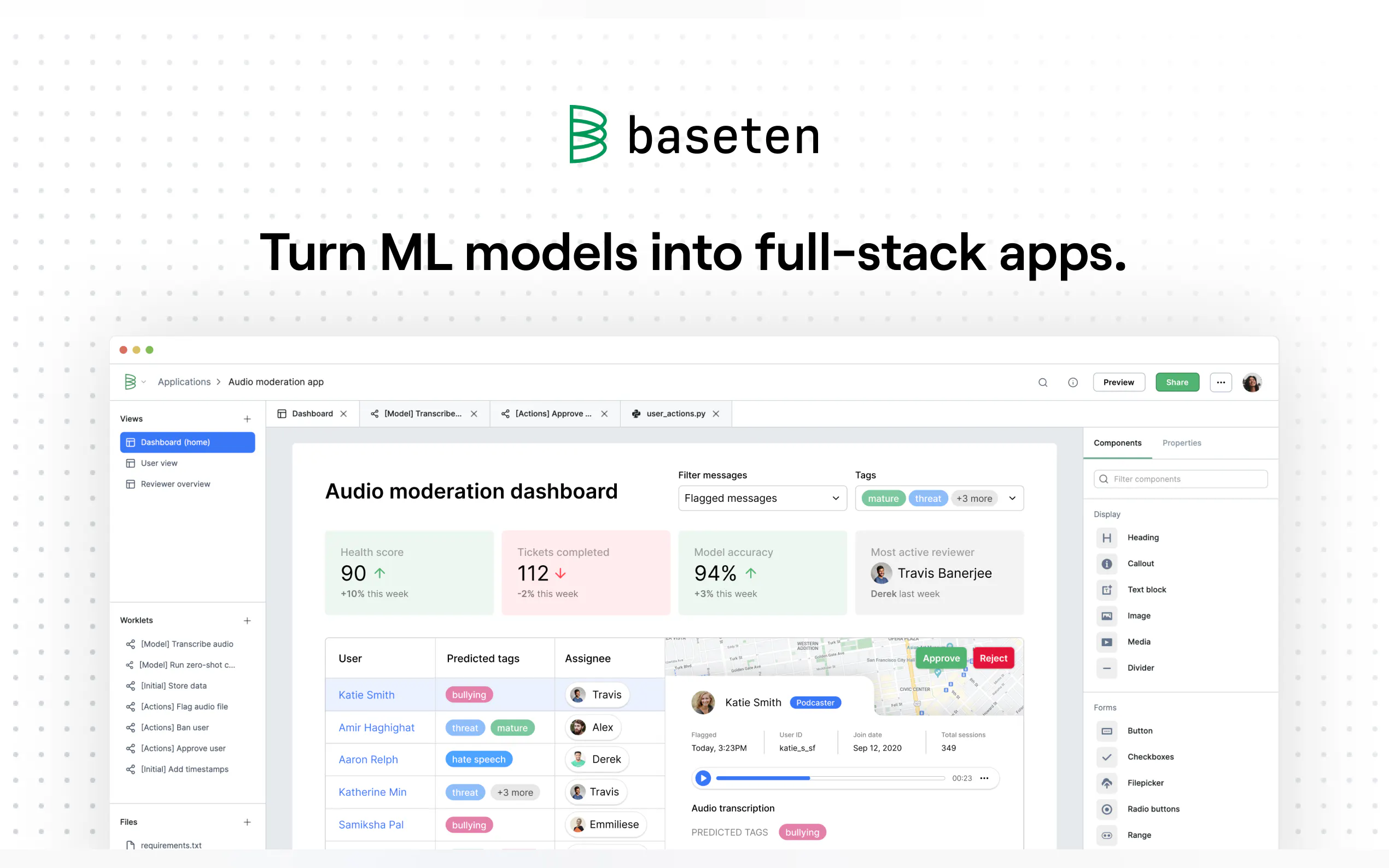

What is Baseten?

Deploying AI models in production just got easier. Baseten offers a fast, scalable, and reliable platform to serve both open-source and custom models, whether in your cloud or ours. Designed for developers and enterprises prioritizing performance, security, and a seamless workflow, Baseten helps you scale AI inference with confidence.

Key Features

🚀 High-Performance Inference

Achieve blazing-fast speeds with up to 1,500 tokens per second and cold starts optimized for mission-critical applications. Baseten’s infrastructure ensures low latency, making it ideal for real-time use cases like chatbots and virtual assistants.

🛠️ Developer-Friendly Workflow

With Truss, Baseten’s open-source model packaging tool, you can deploy models in just a few commands. Whether you’re working with PyTorch, TensorFlow, or Triton, Truss simplifies the transition from development to production.

💼 Enterprise-Ready Security

Baseten meets the highest standards for enterprise needs, offering HIPAA compliance and SOC 2 Type II certification. Deploy securely in your cloud or as a self-hosted solution with single-tenancy isolation.

📈 Effortless Autoscaling

Automatically scale your models to handle traffic spikes without overpaying for compute. Baseten’s autoscaler ensures optimal resource allocation, so your models are always available and cost-efficient.

🔍 Comprehensive Observability

Monitor your models in real-time with detailed logs, metrics, and cost-tracking tools. Quickly identify and resolve issues to maintain reliability and performance.

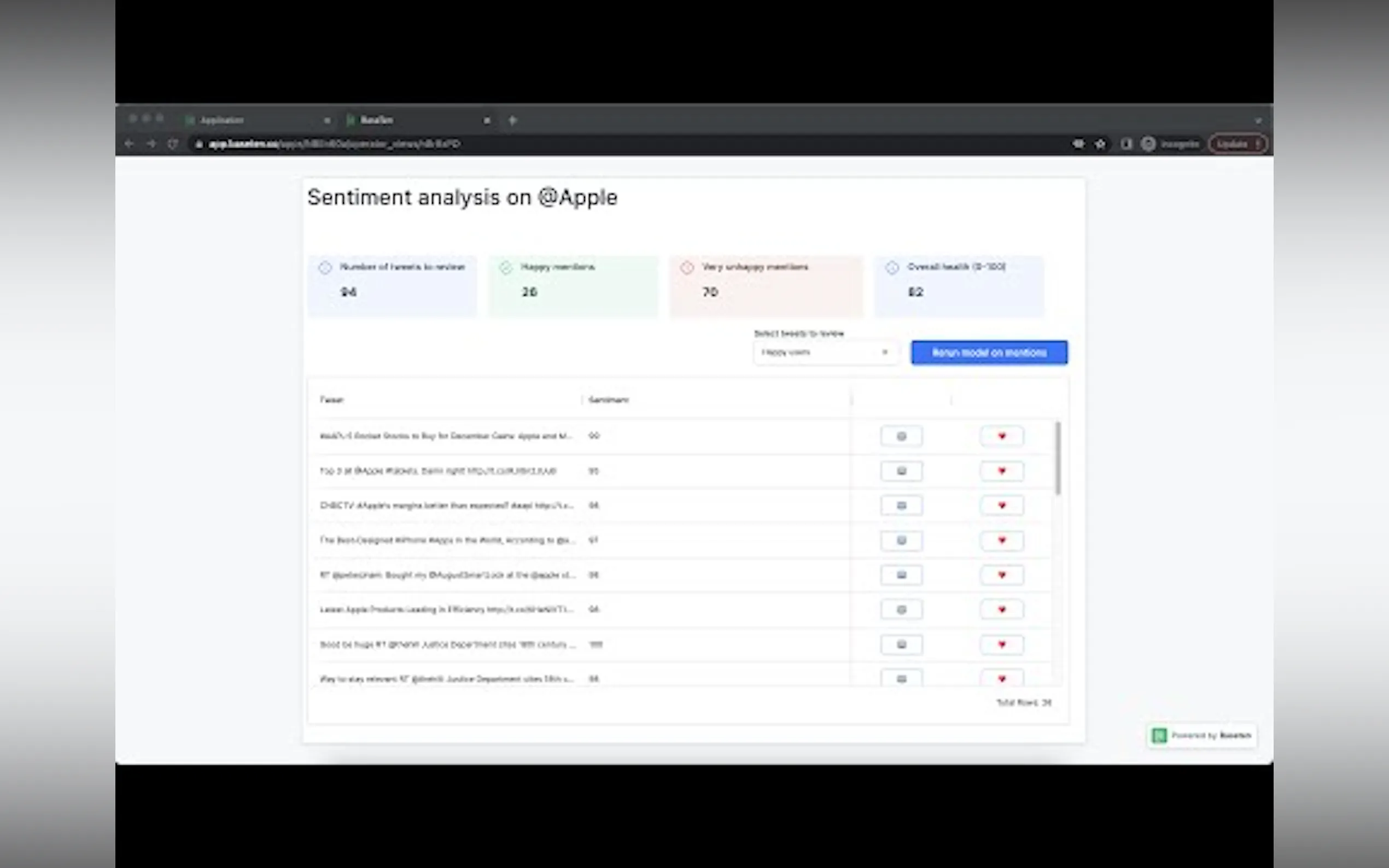

Use Cases

Interactive Applications

Power real-time experiences like chatbots, virtual assistants, or translation services with Baseten’s low-latency inference and autoscaling capabilities.Enterprise AI Solutions

Deploy secure, high-performance models for critical business operations, ensuring compliance with industry standards like HIPAA and SOC 2.Multi-Model Workflows

Build and orchestrate complex AI workflows by chaining multiple models together, all managed within Baseten’s intuitive platform.

Why Choose Baseten?

Baseten combines cutting-edge performance, developer-friendly tools, and enterprise-grade security to make AI model deployment seamless. Whether you’re scaling inference in your cloud or ours, Baseten ensures your models are fast, reliable, and cost-effective.

Ready to accelerate your AI deployment? Get started today or talk to our sales team to learn more.

More information on Baseten

Top 5 Countries

Traffic Sources

Baseten Alternatives

Baseten Alternatives-

Run the top AI models using a simple API, pay per use. Low cost, scalable and production ready infrastructure.

-

Stop struggling with AI infra. Novita AI simplifies AI model deployment & scaling with 200+ models, custom options, & serverless GPU cloud. Save time & money.

-

TitanML Enterprise Inference Stack enables businesses to build secure AI apps. Flexible deployment, high performance, extensive ecosystem. Compatibility with OpenAI APIs. Save up to 80% on costs.

-

Modelbit lets you train custom ML models with on-demand GPUs and deploy them to production environments with REST APIs.

-

NetMind: Your unified AI platform. Build, deploy & scale with diverse models, powerful GPUs & cost-efficient tools.