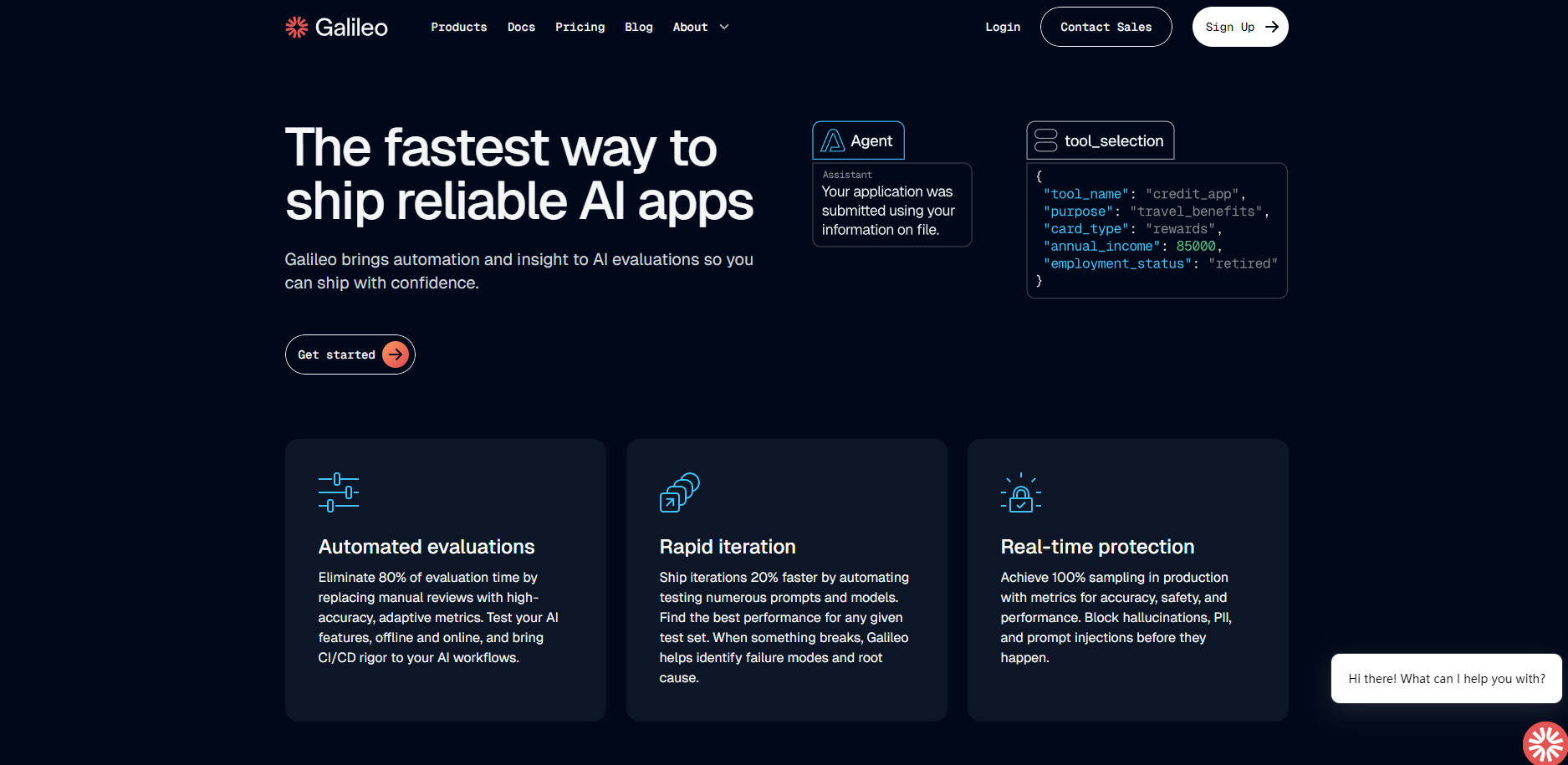

What is Galileo?

Building reliable generative AI applications at scale presents unique challenges. Unlike traditional software, AI outputs can vary, making consistent quality control and debugging difficult. As models and data evolve, ensuring your application behaves as expected requires continuous vigilance and sophisticated evaluation tools. This is where Galileo AI comes in. Designed specifically for AI teams, Galileo provides a comprehensive platform to evaluate, iterate on, monitor, and protect your generative AI applications, helping you ship with confidence and speed.

Key Capabilities

✨ Automate Evaluations: Replace time-consuming manual reviews with high-accuracy, adaptive metrics. Conduct rigorous testing for your AI features, both offline during development and online in production, integrating AI evaluation into your standard CI/CD workflows.

⚡ Accelerate Iteration: Speed up your development cycles by automating the testing of numerous prompts and models simultaneously. Galileo helps you quickly identify performance issues, pinpoint root causes, and understand failure modes to guide effective fixes.

🛡️ Ensure Real-time Protection: Achieve comprehensive monitoring in production with low-latency metrics for accuracy, safety, and performance. Proactively block undesirable outputs like hallucinations, PII leakage, and prompt injections before they reach users.

🔬 Leverage Powerful Evaluation Engine: Access a flexible system powered by prebuilt, accurate evaluators and the ability to easily create custom metrics tailored to your specific application. Continuously improve your evaluation criteria with techniques like Continuous Learning with Human Feedback (CLHF).

📊 Gain End-to-End Visibility: Track your AI application's performance throughout its lifecycle, from initial prompt design through production monitoring. Visualize trends, set up alerts for potential issues, and debug efficiently with detailed traces.

Practical Applications

Debugging Complex Issues: When your RAG application starts generating incorrect answers, use Galileo's token-level analysis and root cause identification features. Pinpoint whether the issue stems from retrieval errors, hallucinated content, or incorrect tool usage based on millions of signals processed by the platform. The system can even suggest potential fixes, such as adding specific few-shot examples.

Comparing Model Performance: Before deploying a new LLM or changing your prompting strategy, upload your test datasets to Galileo. Run automated evaluations side-by-side, comparing metrics across correctness, safety, and relevance dimensions to make data-driven decisions on which approach yields the best results for your specific use case.

Implementing Production Guardrails: Deploy Galileo's low-latency evaluators directly into your production environment. Set up policies to automatically detect and block harmful responses, PII, or hallucinations in real-time, ensuring your application maintains quality and safety standards even as user inputs vary and models evolve.

Galileo AI provides the essential tools AI teams need to navigate the complexities of generative AI development. By offering automated, accurate, and low-latency evaluation, powerful debugging insights, and real-time production protection, Galileo empowers you to build, test, and deploy reliable AI applications faster and with greater confidence. It's an end-to-end platform designed to bring rigor and insight to your AI workflows.