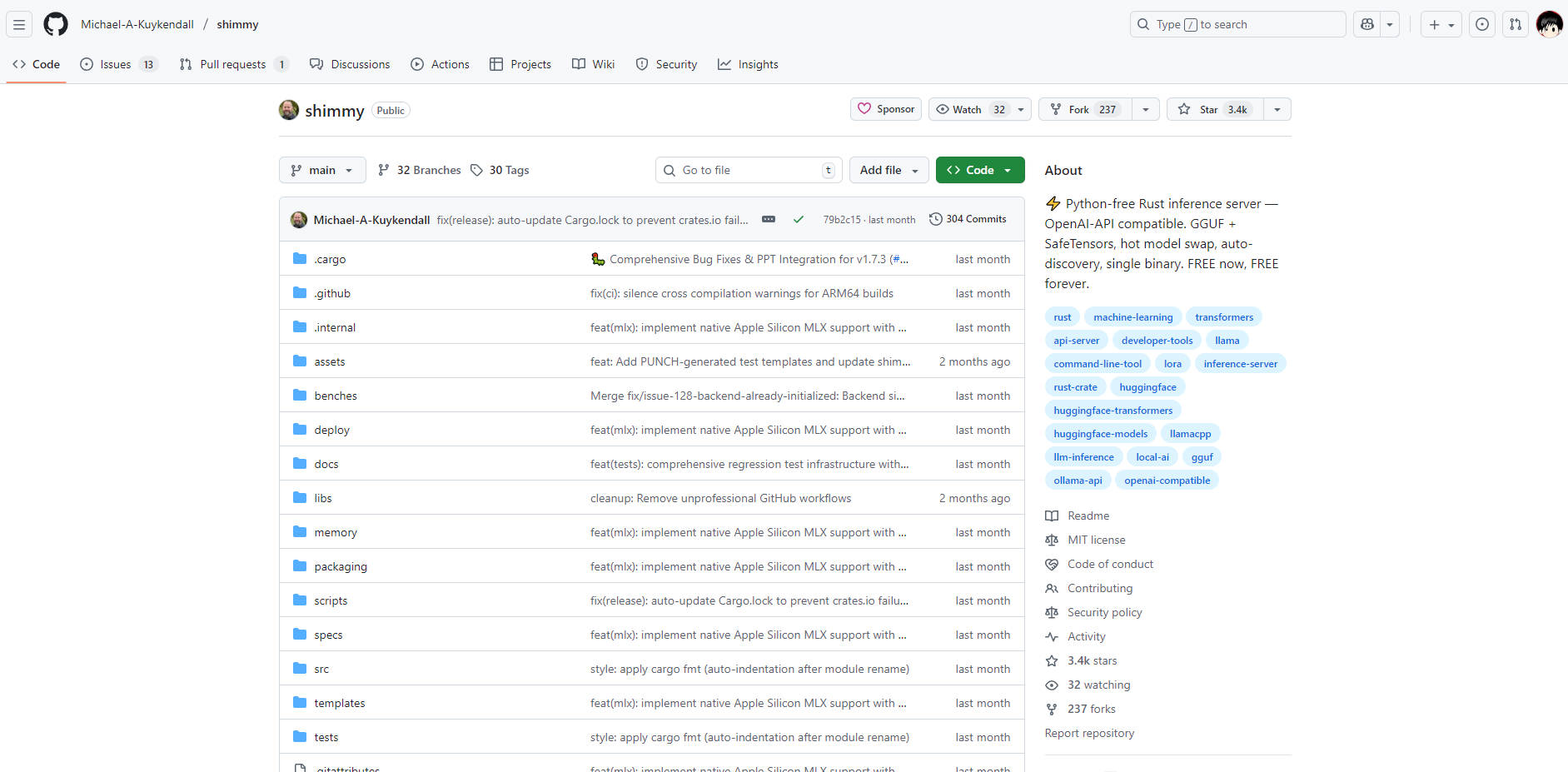

What is Shimmy?

Shimmy is a high-performance, lightweight inference server built entirely in Rust, designed to be a 100% compliant drop-in replacement for the OpenAI API. It solves the complexity, cost, and privacy challenges associated with local LLM development by providing a fast, single-binary solution for running GGUF and SafeTensors models. For developers, this means seamless integration of powerful, private language models into existing toolchains without any code changes or external dependencies.

Key Features

🔌 Seamless OpenAI API Drop-in Compatibility

Shimmy provides API endpoints that mirror the official OpenAI specification (/v1/chat/completions, /v1/models). This crucial compatibility allows you to point your existing tools—including OpenAI SDKs (Python, Node.js), VSCode extensions, Cursor IDE, and Continue.dev—to your local Shimmy server simply by changing the API baseURL, requiring zero code modifications.

📦 Rust-Native, Python-Free Deployment

Built using Rust and packaged as a compact 4.8MB single binary, Shimmy eliminates the common headaches associated with Python dependency management, virtual environments, and complex runtime libraries. This architecture ensures memory safety, minimal overhead, maximum portability across platforms (Windows, macOS, Linux), and significantly faster deployment times.

🧠 Advanced MOE Hybrid Acceleration

Leverage intelligent CPU/GPU hybrid processing to run massive Mixture of Experts (MOE) models, including those exceeding 70 billion parameters, effectively on consumer hardware. Shimmy automatically handles CPU MOE Offloading, strategically placing layers across system RAM and VRAM to maximize performance and memory efficiency, making large-scale LLMs accessible even with limited VRAM.

⚙️ Zero-Configuration Auto-Discovery

Get models running instantly without setup wizards or configuration files. Shimmy automatically detects and loads models from common locations, including the Hugging Face cache, Ollama directories, and local paths. It also auto-allocates ports to prevent conflicts and automatically detects LoRA adapters for specialized models, ensuring a true "just works" experience.

Use Cases

Shimmy is engineered to enhance developer productivity, privacy, and cost efficiency across several critical scenarios:

- Ensuring Data Privacy and Security: For organizations or projects handling sensitive, proprietary, or regulated data, Shimmy enables you to run all code analysis, data querying, and model inference entirely on-premises. Your information remains local, eliminating external data transmission risks, API access logs, and compliance concerns.

- Accelerating Local Development and Testing: Eliminate API costs, rate limits, and network latency during rapid prototyping and testing cycles. Developers can execute thousands of local model calls instantly, using the exact same standard OpenAI SDKs and tooling, drastically speeding up iteration and reducing cloud infrastructure dependency.

- Deploying Large Models on Consumer Hardware: Utilize the MOE CPU Offloading feature to deploy high-capability 70B+ parameter models on standard workstations or laptops. This allows small teams or individual developers to access state-of-the-art model performance without the prohibitive cost and complexity of dedicated enterprise-grade GPU clusters.

Why Choose Shimmy?

Shimmy stands apart by offering a unique combination of technical robustness, uncompromising performance, and a strong commitment to accessibility:

- Unwavering Commitment to Free Software: Shimmy is proudly and permanently free, released under the permissive MIT license. There are no hidden fees, paid tiers, or planned pivots to a subscription model, ensuring long-term stability and cost predictability for all users.

- Superior Technical Foundation: Built on Rust and utilizing the industry-standard

llama.cppbackend for GGUF inference, Shimmy provides a memory-safe, asynchronous, and high-performance foundation. This architecture guarantees reliability and speed, especially when handling complex tasks like dynamic port management and smart model preloading. - Performance Through Advanced Features: Features like Smart Model Preloading (background loading with usage tracking for instant model switching) and Response Caching (LRU + TTL cache delivering up to 40% performance gains on repeat queries) ensure that local inference doesn't just work, it works fast.

Conclusion

Shimmy delivers the speed, security, and compatibility required for modern local LLM development. By combining the high performance of a Rust-native architecture with universal OpenAI API standards, it provides a stable, robust, and cost-free foundation for integrating advanced language models directly into your workflow.

Explore how Shimmy can enhance your development process today and bring powerful, private inference directly to your desktop.