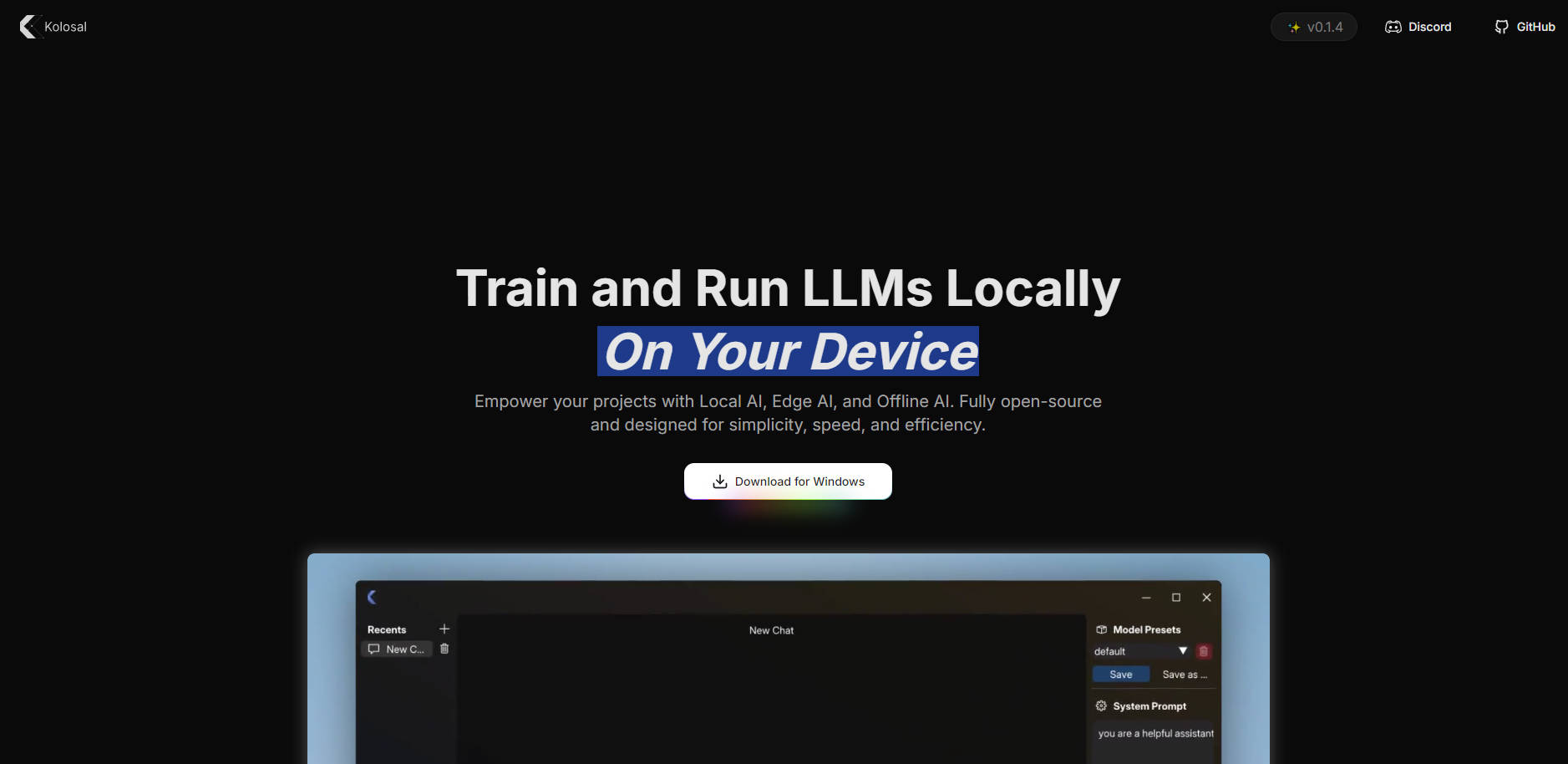

What is Kolosal AI?

You know those massive AI systems like ChatGPT and Gemini that drain your computer’s resources and compromise your privacy? What if you could run powerful language models right on your device—whether it’s a laptop, desktop, or even a Raspberry Pi—without sacrificing speed or efficiency? Meet Kolosal AI, an open-source platform that lets you train and deploy large language models (LLMs) locally, ensuring your data stays private and your energy consumption stays low. And the best part? It’s only 20 MB in size but packs a punch, running LLMs just as fast, if not faster, than its bulkier competitors.

Key Features:

🚀 Ultra-Lightweight

Kolosal AI is just 20 MB—that's 0.1–0.2% the size of platforms like Ollama or LMStudio. You won’t believe how such a small package can deliver top-tier performance.

🔒 Privacy Protection

Run LLMs on your device, ensuring all your data stays local and secure. No need to send sensitive information to the cloud.

🌱 Eco-Friendly

By running AI models on your device, you significantly cut down on energy use compared to cloud-based AI systems, contributing to a greener planet.

⚙️ Broad Compatibility

Whether you're on a CPU (with AVX2 instructions) or using AMD/NVIDIA GPUs, Kolosal AI has you covered. It even works on edge devices like the Raspberry Pi.

🎛️ Customizable Training

Fine-tune your models with synthetic data generation and personalized guardrails, making it easier to build highly accurate and tailored AI applications.

Use Cases:

1. Local AI Inference for Individual Developers

Imagine you’re a developer who wants to run LLMs on your personal laptop without relying on cloud services. Kolosal AI lets you do just that, offering fast and efficient on-device inference. Whether you're tweaking models for a side project or testing new ideas, you can do it all offline, keeping your data secure.

2. Edge Deployment for IoT Devices

You're part of a team building a smart home device that needs to process language locally to minimize latency and protect user privacy. Kolosal AI enables you to deploy lightweight language models on edge devices, ensuring smooth performance even on low-power hardware like the Raspberry Pi.

3. Custom Model Training for Enterprises

Your business needs to train large language models using proprietary data. Instead of relying on expensive cloud-based solutions, Kolosal AI allows you to train and fine-tune models locally, reducing costs and improving data security. Plus, with multi-GPU support, you can scale the training to handle even the largest datasets.

Conclusion:

In a world where AI is dominated by cloud-based solutions that consume vast amounts of energy and compromise on privacy, Kolosal AI offers a refreshing alternative. It’s fast, lightweight, and designed to run on your own device—whether that's a high-end GPU workstation or a low-power Raspberry Pi. With its open-source flexibility, broad compatibility, and privacy-first approach, Kolosal AI empowers both individual developers and large enterprises to harness the power of AI without the usual trade-offs. Ready to take control of your AI projects? Download Kolosal AI today and start building the future, locally.

FAQ:

Q: Is Kolosal AI really free to use?

A: Yes! Kolosal AI is open-source and available under the Apache 2.0 license, meaning you can use, modify, and distribute it freely.

Q: Can I run Kolosal AI on my old laptop?

A: Absolutely. Kolosal AI is designed to be lightweight and can run efficiently on a wide range of devices, including older laptops and low-power machines like the Raspberry Pi.

Q: How does Kolosal AI protect my privacy?

A: Since all the data processing happens locally on your device, none of your data is sent to external servers. This ensures your privacy remains intact.

More information on Kolosal AI

Top 5 Countries

Traffic Sources

Kolosal AI Alternatives

Kolosal AI Alternatives-

LM Studio is an easy to use desktop app for experimenting with local and open-source Large Language Models (LLMs). The LM Studio cross platform desktop app allows you to download and run any ggml-compatible model from Hugging Face, and provides a simple yet powerful model configuration and inferencing UI. The app leverages your GPU when possible.

-

Explore Local AI Playground, a free app for offline AI experimentation. Features include CPU inferencing, model management, and more.

-

CogniSelect SDK: Build AI apps that run LLMs privately in the browser. Get zero-cost runtime, total data privacy & instant scalability.

-

Lemon AI: Your private, self-hosted AI agent. Run powerful, open-source AI on your hardware. Securely tackle complex tasks, save costs, & control your data.

-

Klee: Your private desktop AI. Run LLMs offline & securely chat with your local documents and notes. Your data never leaves your device.