What is Llama 4?

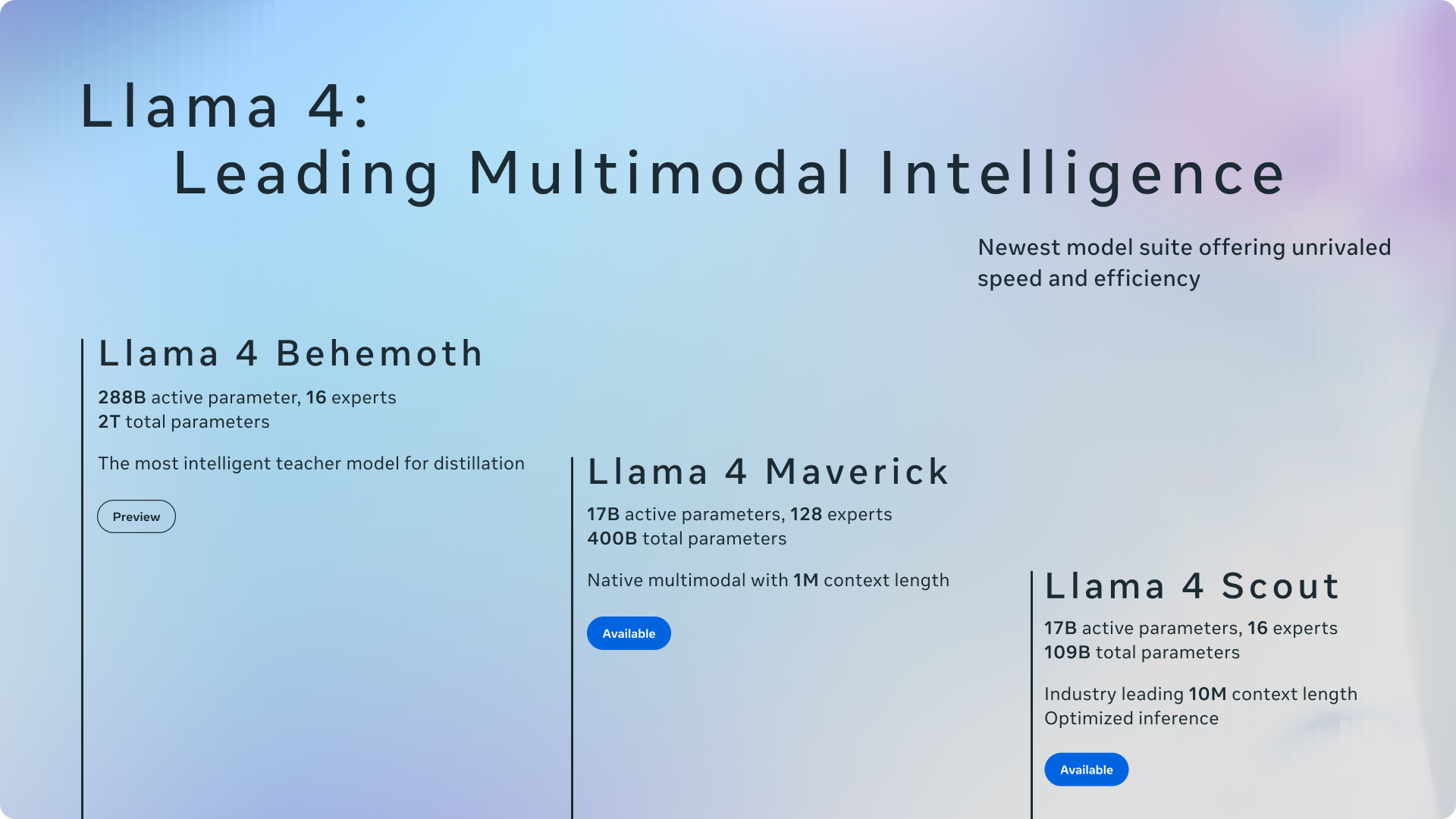

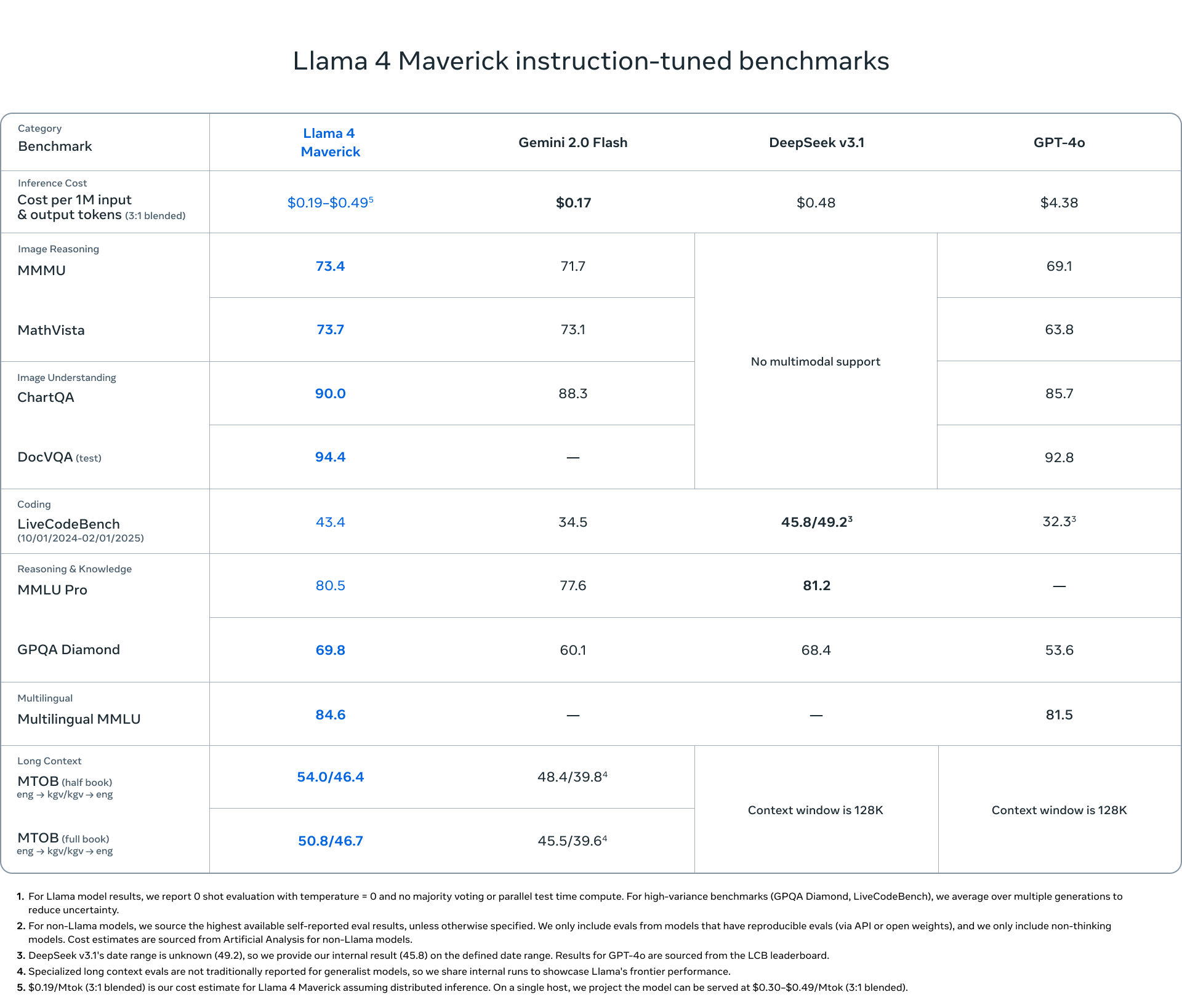

Welcome to Llama 4, the next step in Meta's open-source AI journey. This new series introduces powerful multimodal models designed for developers, researchers, and businesses seeking both high performance and computational efficiency. For the first time, Llama models leverage a Mixture of Experts (MoE) architecture, making them significantly faster and more cost-effective to run and train. Natively designed to understand and generate content across text, images, and video, Llama 4 offers specialized versions like Scout and Maverick, providing tailored solutions for diverse AI application needs.

Key Features

💬 Advanced Language Understanding & Generation: Accurately interprets complex text and generates coherent, context-aware content. Trained on vast text datasets (over 30 trillion tokens across modalities), Llama 4 excels in tasks ranging from creative writing and detailed article composition to sophisticated dialogue interactions, understanding user intent and providing relevant responses.

🖼️ Native Multimodal Processing: Seamlessly integrates and reasons across text, images, and video inputs thanks to its early fusion architecture. Llama 4 can identify objects, scenes, and colors within images, providing descriptions and analysis. The Scout version pushes boundaries with a 10 million token context window, capable of processing enormous documents (millions of words) or analyzing over 20 hours of video content in a single go. Its visual encoder, based on MetaCLIP, is specifically tuned for better alignment with the core language model.

⚡ Efficient MoE Architecture: Employs a Mixture of Experts (MoE) design, a first for the Llama series, enabling faster inference and reduced computational cost. Instead of activating the entire model for every task, MoE routes queries to specialized 'expert' sub-models. For instance, Llama 4 Maverick has 400 billion total parameters but only activates 17 billion for a given input, significantly lowering latency and operational expenses. This efficiency extends to training, where techniques like FP8 precision achieve high computational throughput (e.g., 390 TFLOPs/GPU for Behemoth pre-training) without sacrificing quality.

🌍 Extensive Multilingual Capabilities: Pre-trained on data spanning over 200 languages, Llama 4 offers robust cross-lingual understanding, translation, and content generation. This allows you to build applications that cater to a global audience, breaking down language barriers for communication and information processing tasks. It also supports open-source fine-tuning on specific language datasets.

Use Cases

How can you leverage Llama 4? Here are a few examples:

Building Advanced Research Assistants: Imagine feeding Llama 4 Scout hours of recorded lectures, lengthy research papers containing text and diagrams, or vast codebases. Its 10 million token context window allows it to synthesize information across these extensive inputs, providing comprehensive summaries, answering complex questions based on the material, or even identifying patterns within the code that a human might miss.

Developing Engaging Multimodal Chatbots: Use Llama 4 Maverick to power a customer service bot or a creative companion. Users could upload product images and ask detailed questions, which Maverick can understand visually and respond to contextually. Its strength in creative writing also enables it to generate engaging narratives, marketing copy, or personalized stories based on user prompts that might include both text and images.

Creating Cost-Effective Internal Tools: Deploy Llama 4 Scout (runnable on a single H100 GPU) to build an internal knowledge base search engine for your company. Its MoE architecture ensures efficient operation, allowing employees to quickly find information across company documents, reports, and potentially even internal video archives, without incurring massive computational costs.

Conclusion

Llama 4 marks a significant step forward for accessible, high-performance AI. By combining the efficiency of Mixture of Experts architecture with native multimodality and extensive multilingual support, Meta provides a powerful, flexible, and open-source foundation for your next AI project. Whether you need the immense context window of Scout, the balanced capabilities and image acuity of Maverick, or are anticipating the specialized power of Behemoth, Llama 4 offers compelling options for building sophisticated and efficient AI applications.

Frequently Asked Questions (FAQ)

What are the main differences between Llama 4 and previous Llama versions? Llama 4 introduces several key advancements: it's the first Llama series to use a Mixture of Experts (MoE) architecture for enhanced efficiency; it's natively multimodal, designed from the ground up to handle text, images, and video; it offers specialized versions (Scout, Maverick) tailored for different needs; Scout features an exceptionally large 10 million token context window; and it's trained on a significantly larger and more diverse dataset (>30T tokens across 200+ languages).

What is Mixture of Experts (MoE) and why is it significant in Llama 4? MoE is an architecture where a large model is composed of smaller, specialized sub-models called "experts." When processing an input, the model routes the data only to the most relevant experts, activating just a fraction of the total parameters. This makes inference (running the model) significantly faster and less computationally expensive compared to activating a dense model of equivalent total size. For Llama 4, this means lower latency, reduced serving costs, and more efficient training.

Which Llama 4 model should I choose: Scout or Maverick?

Choose Llama 4 Scout if your primary need is processing extremely long contexts (up to 10M tokens, like very long documents, codebases, or hours of video) or if you need a highly capable model that can run efficiently, potentially even on a single high-end GPU like the H100.

Choose Llama 4 Maverick for general-purpose assistant or chatbot applications where strong performance in creative writing and nuanced image understanding are important. It offers a balance of capabilities and ranks highly on general AI benchmarks like LMSYS.

Llama 4 Behemoth (currently in preview) is being trained with 2 trillion parameters and is showing exceptional promise in STEM benchmarks, targeting highly complex reasoning tasks.

How technically efficient is Llama 4 during training and inference? Llama 4 leverages several techniques for efficiency. The MoE architecture drastically reduces the active parameters during inference. For training, Meta employed FP8 precision, achieving high hardware utilization (e.g., 390 TFLOPs per GPU during Behemoth pre-training) while maintaining model quality. Meta also developed a new method called MetaP for optimizing crucial training hyperparameters, ensuring stability and performance across different scales.

Is Llama 4 truly open source? Yes, Meta is releasing the Llama 4 models under an open-source license, allowing researchers, developers, and businesses to access, modify, and build upon the models freely, fostering innovation within the AI community. The models also support open-source fine-tuning.

More information on Llama 4

Llama 4 Alternatives

Llama 4 Alternatives-

Discover the peak of AI with Meta Llama 3, featuring unmatched performance, scalability, and post-training enhancements. Ideal for translation, chatbots, and educational content. Elevate your AI journey with Llama 3.

-

LlamaIndex builds intelligent AI agents over your enterprise data. Power LLMs with advanced RAG, turning complex documents into reliable, actionable insights.

-

MonsterGPT: Fine-tune & deploy custom AI models via chat. Simplify complex LLM & AI tasks. Access 60+ open-source models easily.

-

The TinyLlama project is an open endeavor to pretrain a 1.1B Llama model on 3 trillion tokens.

-

WordLlama is a utility for natural language processing (NLP) that recycles components from large language models (LLMs) to create efficient and compact word representations, similar to GloVe, Word2Vec, or FastText.