What is LLMClient?

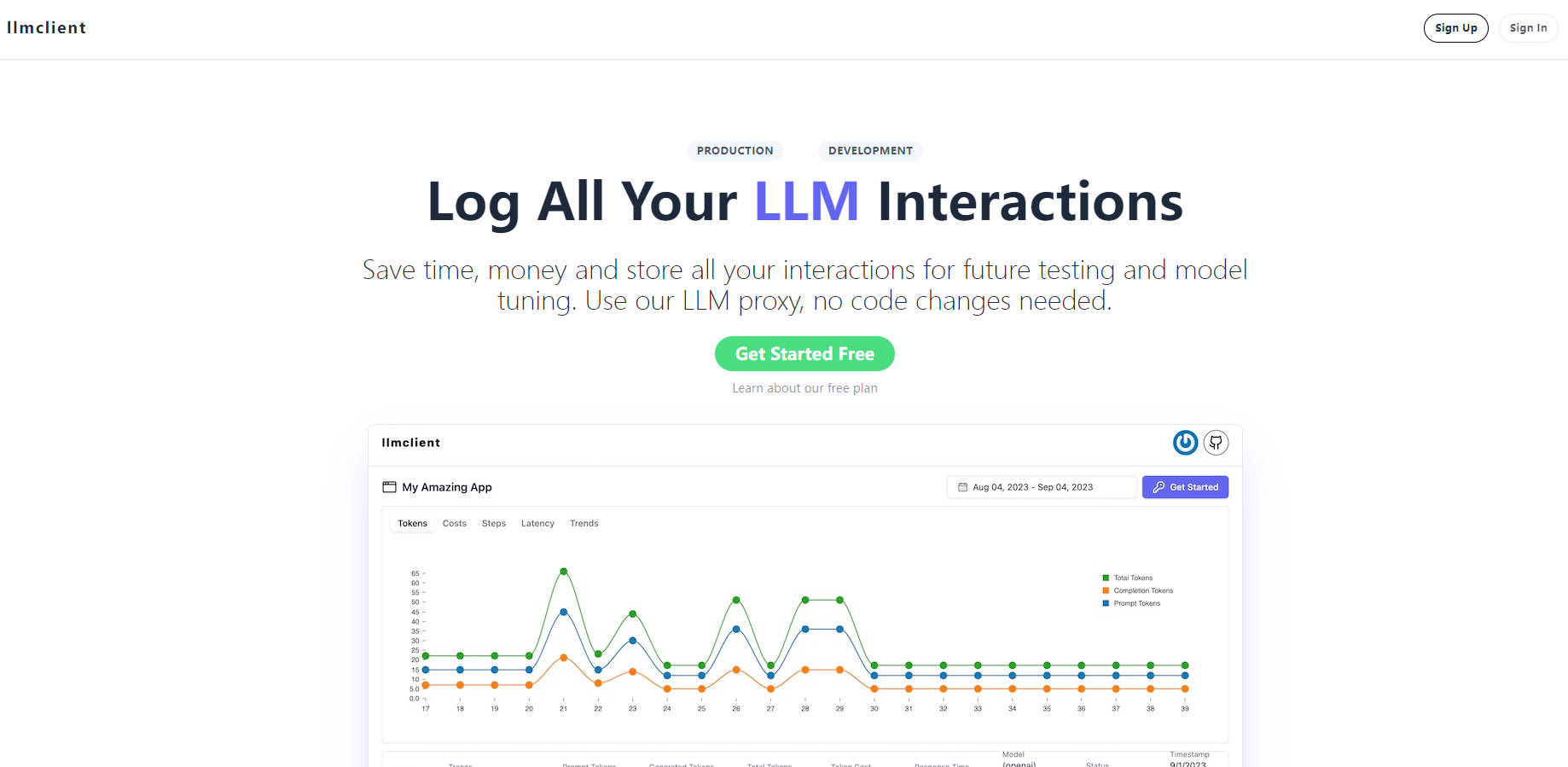

Log All Your LLM Interactions to save time, money, and store interactions for future testing and model tuning.

Key Features:

Use the LLM proxy provided, no code changes needed.

Keep track of prompts, models, responses, and share logs for debugging during development.

Monitor prompt performance, costs, user activity, and errors in production.

Use the highly scalable and fast hosted proxy or run your own open-source proxy.

Log every detail, including completion prompts, system prompts, chat prompts, function calls, model configuration, and API errors.

More information on LLMClient

Top 5 Countries

Traffic Sources

LLMClient Alternatives

Load more Alternatives-

Optimize your AI app with LLMonitor, an observability and logging platform for LLM-based apps.

-

BenchLLM: Evaluate LLM responses, build test suites, automate evaluations. Enhance AI-driven systems with comprehensive performance assessments.

-

Revolutionize LLM development with LLM-X! Seamlessly integrate large language models into your workflow with a secure API. Boost productivity and unlock the power of language models for your projects.

-

Integrate large language models like ChatGPT with React apps using useLLM. Stream messages and engineer prompts for AI-powered features.

-

An end-to-end platform for teams to gain insights into their LLM applications post-production