What is Qwen2-VL?

Qwen2-VL, the latest generation of visual language models, designed to bring clarity and depth to your understanding of the visual world. Built upon the foundation of Qwen2, Qwen2-VL offers significant advancements in image and video comprehension, making it a versatile tool for various applications.

Key Features:

Advanced Image Interpretation: Qwen2-VL excels in understanding images of different resolutions and aspect ratios. Its exceptional performance in visual understanding benchmarks like MathVista, DocVQA, RealWorldQA, and MTVQA positions it at the forefront of its field.

Long-Video Comprehension: Qwen2-VL extends its capabilities to understand videos longer than 20 minutes. This feature enables a wide range of applications, including video-based question-answering, dialogue, and content creation.

Visual Intelligent Agent: With its complex reasoning and decision-making abilities, Qwen2-VL can be integrated into smartphones and robots, allowing them to perform automated operations based on visual cues and textual instructions.

Multilingual Support: Qwen2-VL caters to a global audience by supporting the interpretation of multilingual text in images, including most European languages, Japanese, Korean, Arabic, Vietnamese, and more, in addition to English and Chinese.

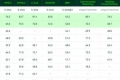

Model Performance: Qwen2-VL, available in sizes ranging from 2B to 72B, outperforms several leading models, especially in document understanding. The 72B version sets a new benchmark for open-source multimodal models.

Model Limitations: While Qwen2-VL offers numerous strengths, it does have limitations, such as the inability to extract audio from videos, knowledge cutoff at June 2023, and challenges in handling complex instructions and scenes, counting, person recognition, and 3D spatial awareness.

Model Architecture: The architecture of Qwen2-VL includes innovations like dynamic resolution support and Multimodal Rotated Position Embedding (M-ROPE), enhancing its ability to process and understand multimodal data.

Accessibility and Licensing: Qwen2-VL-2B and Qwen2-VL-7B are open-sourced under the Apache 2.0 License, and their integration into platforms like Hugging Face Transformers and vLLM makes them accessible for developers.

In conclusion, Qwen2-VL is a powerful tool that enhances visual understanding and offers a wide range of applications. Its advanced features, exceptional performance, and open-source availability make it a valuable resource for developers and researchers alike.

More information on Qwen2-VL

Qwen2-VL Alternatives

Load more Alternatives-

-

Qwen2.5 series language models offer enhanced capabilities with larger datasets, more knowledge, better coding and math skills, and closer alignment to human preferences. Open-source and available via API.

-

Qwen2-Audio, this model integrates two major functions of voice dialogue and audio analysis, bringing an unprecedented interactive experience to users

-

-

DeepSeek-VL2, a vision - language model by DeepSeek-AI, processes high - res images, offers fast responses with MLA, and excels in diverse visual tasks like VQA and OCR. Ideal for researchers, developers, and BI analysts.