Best Qwen2-VL Alternatives in 2026

-

Qwen2 is the large language model series developed by Qwen team, Alibaba Cloud.

-

Qwen2.5 series language models offer enhanced capabilities with larger datasets, more knowledge, better coding and math skills, and closer alignment to human preferences. Open-source and available via API.

-

Qwen2-Audio, this model integrates two major functions of voice dialogue and audio analysis, bringing an unprecedented interactive experience to users

-

Yi Visual Language (Yi-VL) model is the open-source, multimodal version of the Yi Large Language Model (LLM) series, enabling content comprehension, recognition, and multi-round conversations about images.

-

DeepSeek-VL2, a vision - language model by DeepSeek-AI, processes high - res images, offers fast responses with MLA, and excels in diverse visual tasks like VQA and OCR. Ideal for researchers, developers, and BI analysts.

-

Qwen2-Math is a series of language models specifically built based on Qwen2 LLM for solving mathematical problems.

-

GLM-4.5V: Empower your AI with advanced vision. Generate web code from screenshots, automate GUIs, & analyze documents & video with deep reasoning.

-

CogVLM and CogAgent are powerful open-source visual language models that excel in image understanding and multi-turn dialogue.

-

Unlock powerful multilingual text understanding with Qwen3 Embedding. #1 MTEB, 100+ languages, flexible models for search, retrieval & AI.

-

Qwen-MT delivers fast, customizable AI translation for 92 languages. Achieve precise, context-aware results with MoE architecture & API.

-

CodeQwen1.5, a code expert model from the Qwen1.5 open-source family. With 7B parameters and GQA architecture, it supports 92 programming languages and handles 64K context inputs.

-

Qwen2.5-Turbo by Alibaba Cloud. 1M token context window. Faster, cheaper than competitors. Ideal for research, dev & business. Summarize papers, analyze docs. Build advanced conversational AI.

-

Qwen Code: Your command-line AI agent, optimized for Qwen3-Coder. Automate dev tasks & master codebases with deep AI in your terminal.

-

Agent framework and applications built upon Qwen1.5, featuring Function Calling, Code Interpreter, RAG, and Chrome extension.

-

GLM-4-9B is the open-source version of the latest generation of pre-trained models in the GLM-4 series launched by Zhipu AI.

-

Boost search accuracy with Qwen3 Reranker. Precisely rank text & find relevant info faster across 100+ languages. Enhance Q&A & text analysis.

-

Cambrian-1 is a family of multimodal LLMs with a vision-centric design.

-

Janus: Decoupling Visual Encoding for Unified Multimodal Understanding and Generation

-

C4AI Aya Vision 8B: Open-source multilingual vision AI for image understanding. OCR, captioning, reasoning in 23 languages.

-

LongCat-Video: Unified AI for truly coherent, minute-long video generation. Create stable, seamless Text-to-Video, Image-to-Video & continuous content.

-

A novel Multimodal Large Language Model (MLLM) architecture, designed to structurally align visual and textual embeddings.

-

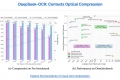

Boost LLM efficiency with DeepSeek-OCR. Compress visual documents 10x with 97% accuracy. Process vast data for AI training & enterprise digitization.

-

With a total of 8B parameters, the model surpasses proprietary models such as GPT-4V-1106, Gemini Pro, Qwen-VL-Max and Claude 3 in overall performance.

-

XVERSE-MoE-A36B: A multilingual large language model developed by XVERSE Technology Inc.

-

WizardLM-2 8x22B is Microsoft AI's most advanced Wizard model. It demonstrates highly competitive performance compared to leading proprietary models, and it consistently outperforms all existing state-of-the-art opensource models.

-

A high-throughput and memory-efficient inference and serving engine for LLMs

-

BAGEL: Open-source multimodal AI from ByteDance-Seed. Understands, generates, edits images & text. Powerful, flexible, comparable to GPT-4o. Build advanced AI apps.

-

OLMo 2 32B: Open-source LLM rivals GPT-3.5! Free code, data & weights. Research, customize, & build smarter AI.

-

RWKV is an RNN with transformer-level LLM performance. It can be directly trained like a GPT (parallelizable). So it's combining the best of RNN and transformer - great performance, fast inference, saves VRAM, fast training, "infinite" ctx_len, and free sentence embedding.

-

Step-1V: A highly capable multimodal model developed by Jieyue Xingchen, showcasing exceptional performance in image understanding, multi-turn instruction following, mathematical ability, logical reasoning, and text creation.