What is NeMo Guardrails?

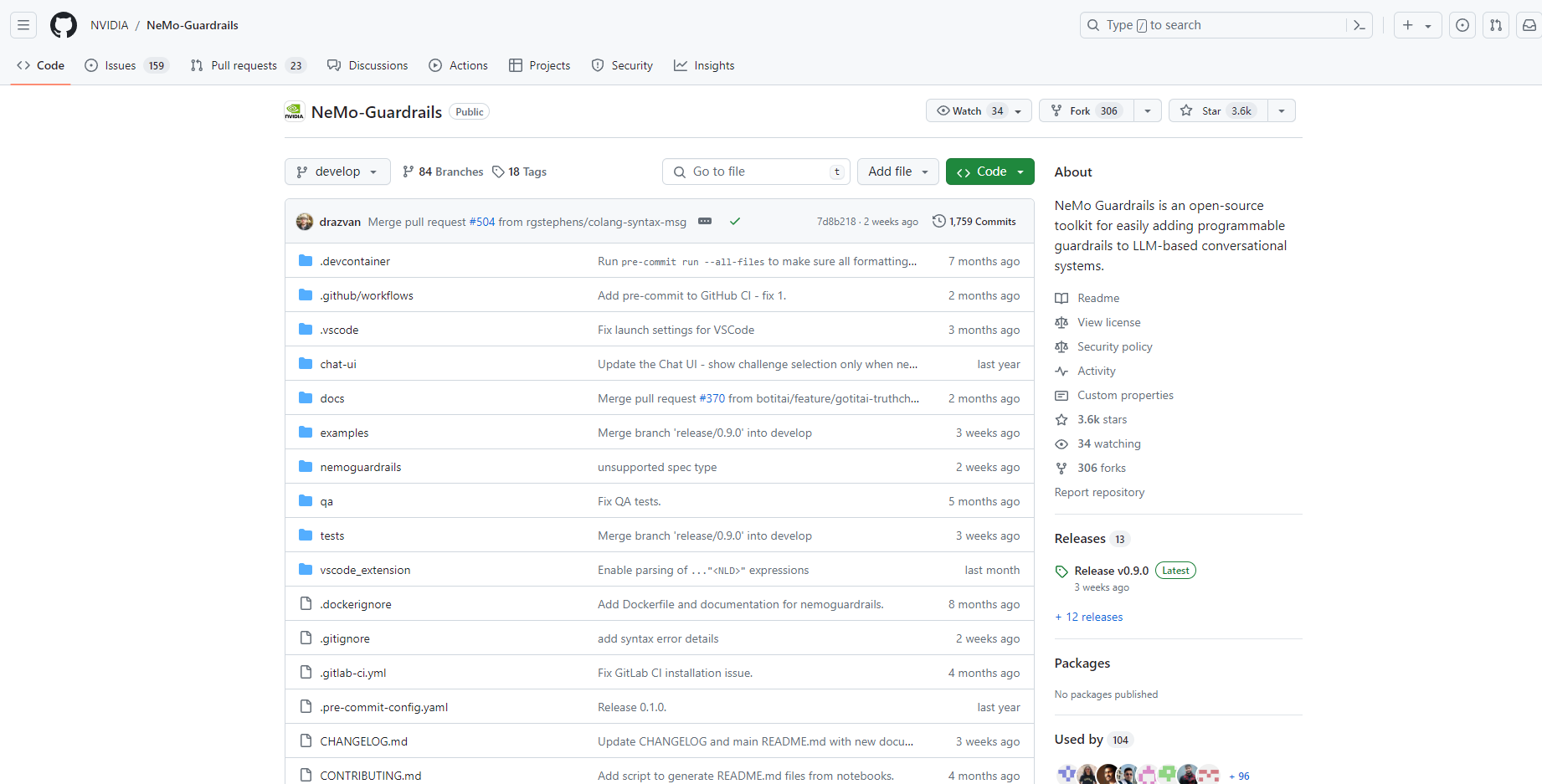

NeMo Guardrails is an innovative open-source toolkit designed to add programmable guardrails to Large Language Model (LLM)-based conversational applications. It offers developers a way to control and guide the output of LLMs, ensuring safer and more reliable interactions. With its support for multiple LLMs and a range of guardrail types, NeMo Guardrails empowers users to create applications that are both responsive and secure.

Key Features:

🛂 Customizable Guardrails: Define specific rules for your LLM’s behavior, such as avoiding certain topics or following predefined conversation paths.

🔄 Seamless Integration: Connect your LLM with other services and tools securely, enhancing the application’s capabilities.

🗣️ Controllable Dialogues: Steer conversations with pre-defined flows, ensuring adherence to conversation design best practices.

🛡️ Vulnerability Protection: Implement mechanisms to protect against common LLM vulnerabilities, like jailbreaks and prompt injections.

🌐 Language Support: Compatible with various LLMs, including OpenAI GPT-3.5, GPT-4, LLaMa-2, Falcon, Vicuna, and Mosaic.

Use Cases:

📚 Retrieval Augmented Generation: Enforce fact-checking and moderation in question-answering systems.

🤖 Domain-specific Assistants: Ensure chatbots stay on topic and follow designed conversational flows.

🛠️ LLM Endpoints: Add guardrails to custom LLMs for safer customer interactions.

🗄️ LangChain Chains: Integrate guardrails with LangChain for enhanced control and security.