What is nanochat?

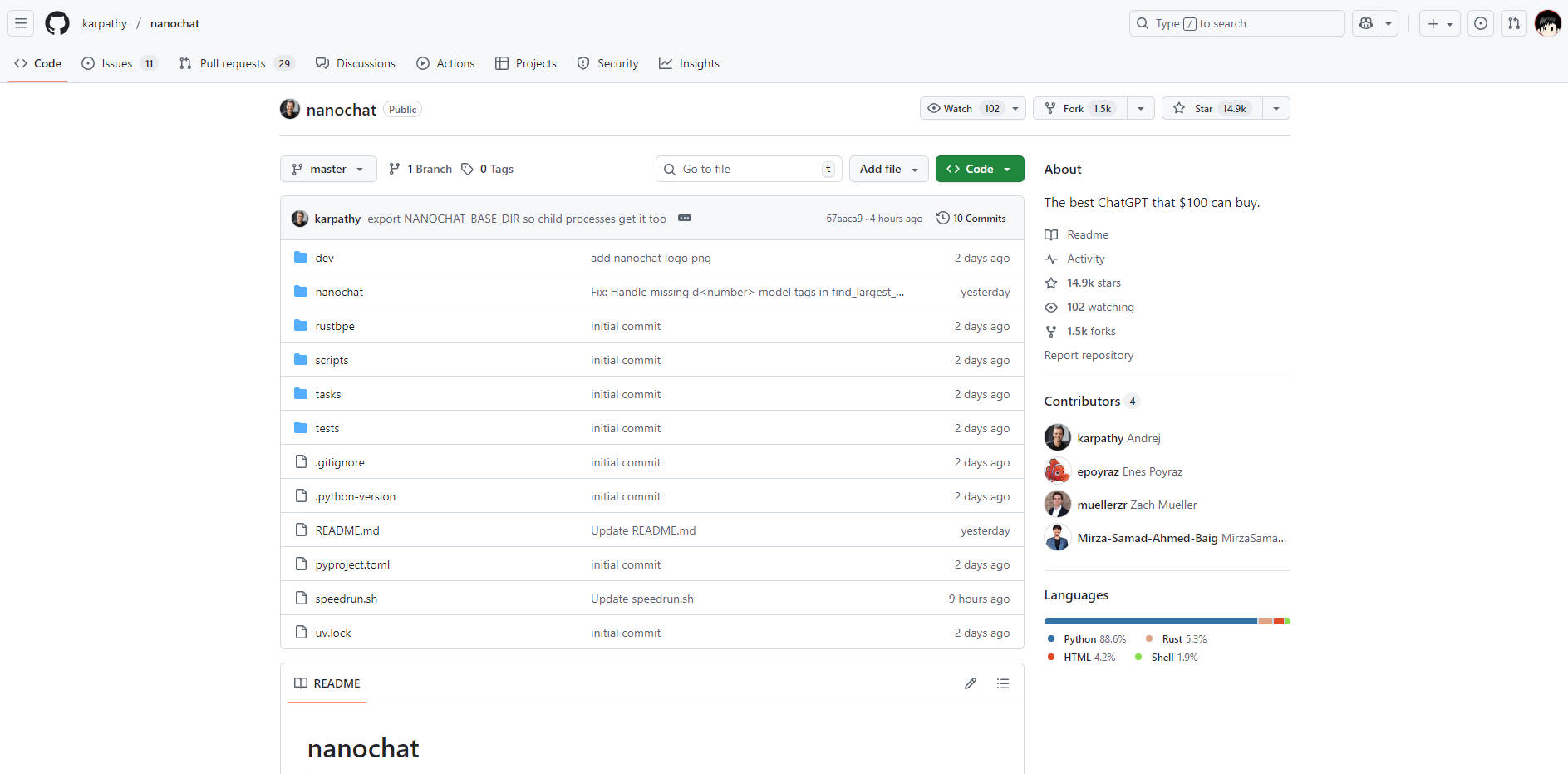

nanochat is a complete, minimal, and highly accessible implementation of a modern Large Language Model (LLM) designed for developers and researchers. It addresses the complexity and prohibitive cost typically associated with LLM development by packaging the entire pipeline—from tokenization to a functional web UI—into approximately 1000 lines of clean, hackable code. For anyone seeking to master the LLM stack by building and running their own model end-to-end, nanochat provides an unparalleled learning and prototyping environment.

Key Features

nanochat is engineered for comprehensive understanding and accessibility, enabling you to control every phase of the LLM lifecycle on a compact, dedicated system.

🛠️ End-to-End Pipeline Integration

Unlike frameworks that abstract away critical components, nanochat provides a full-stack implementation covering tokenization, pretraining, finetuning, evaluation, inference, and a simple web interface. This cohesive design ensures you can execute the entire process using a single automated script (speedrun.sh), providing immediate, verifiable results.

💡 Minimal, Hackable Codebase

The entire implementation is housed in a dependency-lite codebase of approximately 1000 lines (utilizing Python, Rust, HTML, and Shell). This minimal architecture drastically lowers the cognitive load, allowing developers to read, understand, and modify every component of the system without navigating massive configuration objects or complex factory patterns.

💰 Affordable Single-Node Deployment

Achieve full LLM training and deployment on a single 8XH100 node for under $1000. The $100 tier model, for example, can be trained and inferred in about four hours, making large-scale LLM experimentation accessible on a practical budget. This efficiency is achieved through careful resource management and streamlined code, avoiding the need for multi-million dollar capital expenditure.

📈 Scalable Performance Tiers

While the default speedrun offers a foundational model (~4e19 FLOPs) for proof-of-concept, nanochat is structured to scale. Developers can transition to larger, more capable models (such as the ~$300 tier, which slightly outperforms GPT-2 CORE score) simply by adjusting a few parameters like model depth and managing memory via the device batch size.

Use Cases

nanochat is designed to turn theoretical knowledge into tangible, executable models, making it ideal for both education and rapid development.

1. Mastering the LLM Stack

If you are a developer looking to move beyond black-box API calls and genuinely understand the mechanics of large language models, nanochat is your laboratory. By running the full pipeline script, you witness the entire training process—from raw data to a conversational web UI—allowing for deep, hands-on mastery of the underlying principles and algorithms.

2. Rapid Prototyping and Iteration

Use the minimal codebase to quickly test modifications to model architecture, optimization techniques, or novel fine-tuning methods. Since the entire stack is contained and easily runnable on a single node, you can drastically reduce the typical development cycle time required for validating new ideas in the LLM space.

3. Building Highly Specialized Micro-Models

Leverage nanochat’s efficiency to create custom, smaller models tailored for specific domain tasks or internal applications. The ability to run the full training and fine-tuning pipeline cost-effectively enables organizations to develop proprietary micro-models without investing in extensive, dedicated machine learning infrastructure.

Unique Advantages

nanochat’s value proposition lies in its unique balance of completeness, accessibility, and cost efficiency, providing a clear alternative to overly complex LLM frameworks.

Unmatched Cognitive Accessibility: The intentional focus on a minimal codebase means there are no complex, labyrinthine configuration objects or "if-then-else monsters." The code is designed to be a "strong baseline" that is readable, cohesive, and maximally forkable, drastically reducing the time needed for a developer to achieve full system comprehension.

Verifiable Metrics and Evaluation: Every run produces a comprehensive

report.mdfile containing detailed evaluation metrics (e.g., ARC-Challenge, MMLU, GSM8K scores). This commitment to measurable outcomes ensures you can scientifically track the performance and impact of your modifications and training runs.Designed for Learning by Doing: As the capstone project for LLM101n, nanochat is fundamentally engineered as an educational tool. It takes the complexity of modern LLM development and distills it into an achievable, runnable project, ensuring that the learning curve is steep in knowledge gained, not in setup frustration.

Conclusion

nanochat offers a direct, powerful pathway for developers to achieve true understanding and implementation mastery of large language models. By running the entire stack on an accessible budget, you gain deep insight into every phase of LLM creation.

Explore the nanochat repository today and start building the best ChatGPT clone that your budget can buy.

More information on nanochat

nanochat Alternatives

Load more Alternatives-

-

-

LM Studio is an easy to use desktop app for experimenting with local and open-source Large Language Models (LLMs). The LM Studio cross platform desktop app allows you to download and run any ggml-compatible model from Hugging Face, and provides a simple yet powerful model configuration and inferencing UI. The app leverages your GPU when possible.

-

-