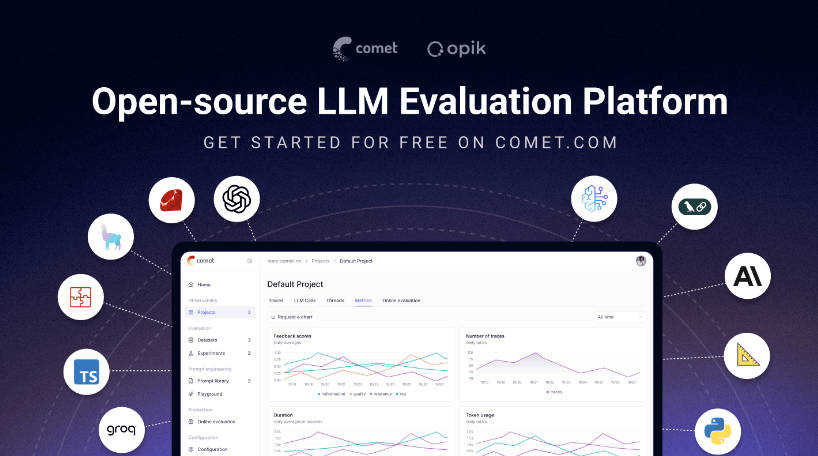

What is Opik?

Building reliable, production-ready LLM applications presents a unique set of challenges, from unpredictable model behavior to ensuring system-wide performance. Opik is a comprehensive, open-source platform designed to give you the clarity and control needed to build, evaluate, and monitor your RAG systems, agentic workflows, and other LLM-powered applications. It provides the essential tools to help you move from prototype to production with confidence.

Key Features

Opik equips you with a powerful, integrated toolkit to manage the entire lifecycle of your LLM applications.

🔍 Comprehensive Tracing and Observability Gain complete visibility into every step of your application's logic. Opik captures detailed traces of LLM calls, agent activity, and tool usage, providing the full context you need to debug issues quickly. With a vast library of native integrations for frameworks like LangChain, LlamaIndex, Autogen, and OpenAI, you can add powerful observability to your stack in minutes.

🧑⚖️ Automated LLM-as-a-Judge Evaluation Go beyond simple pass/fail tests. Opik empowers you to automate sophisticated evaluations using powerful LLM-as-a-Judge metrics. You can systematically assess complex qualities like hallucination, answer relevance, and context precision, ensuring your application meets a high standard of quality before it ever reaches users.

📊 Production-Ready Monitoring and Dashboards Confidently deploy and manage your applications at scale. Opik is built to handle high-volume production workloads (over 40 million traces per day), allowing you to log and analyze performance data in real-time. Use the production dashboards to monitor feedback scores, token usage, and latency, and set up online evaluation rules to catch issues as they happen.

⚙️ Integrated Optimization and Guardrails Opik helps you not only identify issues but also actively improve your systems. Use the Opik Agent Optimizer to systematically enhance your prompts and agents for better performance and lower costs. Implement Opik Guardrails to enforce responsible AI practices and ensure your application operates safely and predictably.

How Opik Solves Your Problems:

Pinpoint Failures in Your RAG System: When your RAG chatbot provides an irrelevant answer, you need to know why. Opik's detailed tracing lets you inspect the entire sequence—from the initial query and the retrieved documents to the final prompt and LLM generation. This allows you to instantly identify whether the issue lies in retrieval, context formulation, or the model itself.

Automate Pre-Deployment Quality Checks: Before pushing a new version of your application, you need to be sure it hasn't regressed. By integrating Opik's evaluation suite with your CI/CD pipeline via its PyTest integration, you can automatically run a benchmark dataset against your application and use LLM-as-a-judge to score for critical factors like hallucinations or toxicity, preventing bad deployments.

Optimize Cost and Performance in Production: You notice that your live agent's operational costs are climbing. With Opik's dashboards, you can track token consumption and latency over time, drill down into specific traces to find inefficient prompts, and use the Opik Agent Optimizer to refine them, directly improving performance and reducing your operational expenses.

Why Choose Opik?

Open-Source and Deployment Flexibility: Opik gives you total control over your data and infrastructure. You can self-host the platform on your own systems using Docker or Kubernetes for maximum privacy and customization, or use the managed Comet.com cloud service to get started instantly with zero setup.

A Unified, End-to-End Platform: Opik isn't just a single-purpose tool; it’s a cohesive platform that supports you through the entire development lifecycle. From initial debugging with tracing, to formal testing with evaluations, and finally to production monitoring and optimization, Opik provides a single, consistent workflow.

Conclusion:

Opik provides the specialized tools you need to master the complexity of building with large language models. By delivering deep observability, robust evaluation, and powerful optimization features, it empowers you to create LLM applications that are more reliable, efficient, and secure.