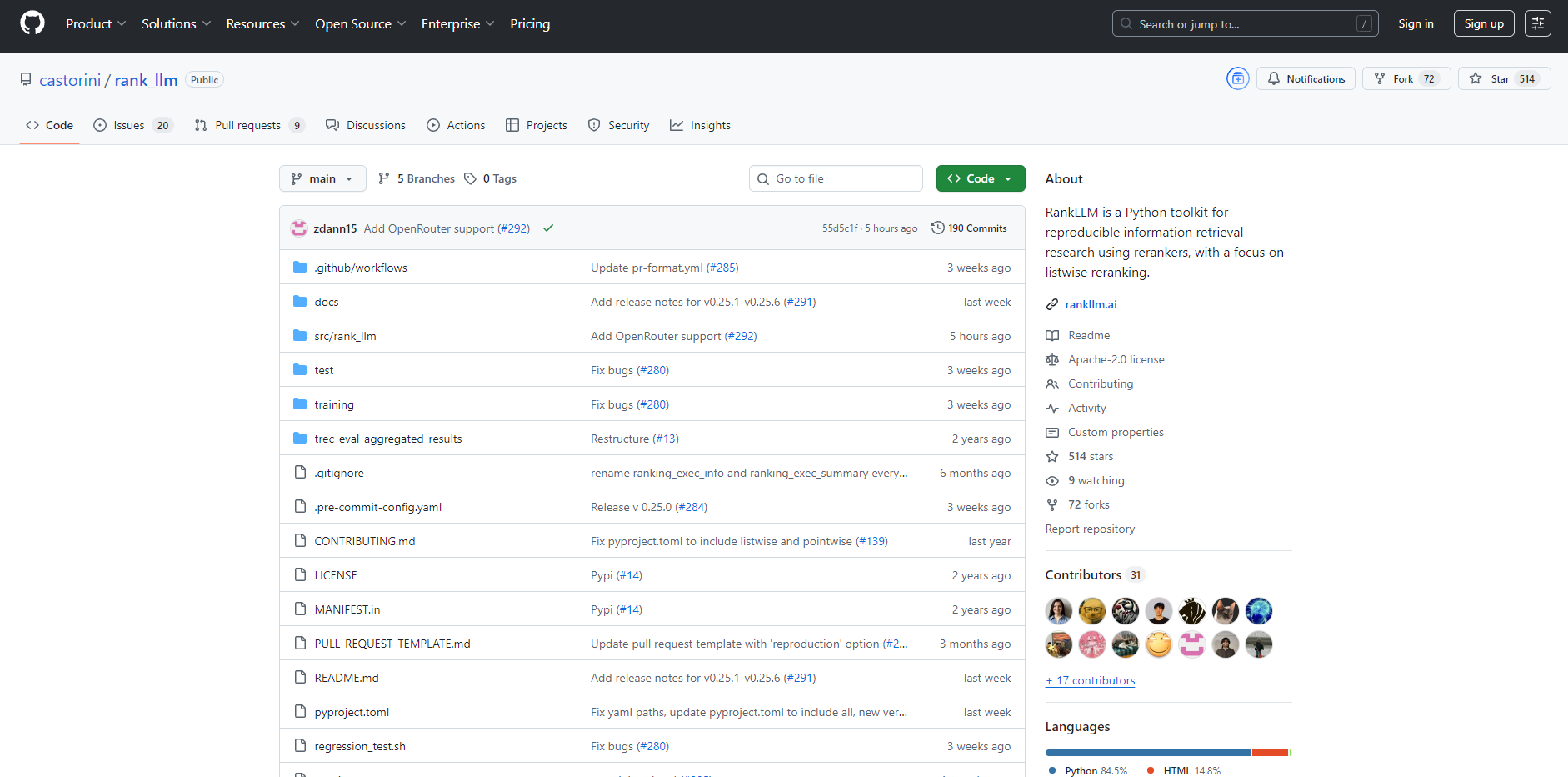

What is RankLLM?

RankLLM is a comprehensive Python toolkit designed for information retrieval (IR) researchers and engineers who need a robust and reproducible framework for leveraging Large Language Models (LLMs). It systematically addresses the complexities of reranking, providing a unified environment to experiment with, evaluate, and deploy a wide array of reranking models. RankLLM simplifies the entire research pipeline—from retrieval and reranking to evaluation—with a primary focus on powerful and efficient listwise rerankers.

Key Features

📚 Versatile Reranker Support Go beyond single-model limitations. RankLLM provides a unified interface for multiple reranking paradigms, including pointwise (MonoT5), pairwise (DuoT5), and advanced listwise models. You can seamlessly experiment with open-source LLMs like RankZephyr and RankVicuna or integrate with proprietary APIs for RankGPT and RankGemini, all within the same toolkit.

⚡ High-Performance Inference Run large-scale experiments without the typical performance bottlenecks. RankLLM is engineered for efficiency, with direct support for cutting-edge inference backends like vLLM, SGLang, and TensorRT-LLM. This allows you to dramatically accelerate your reranking tasks, making it practical to work with larger models and datasets.

🔁 End-to-End Reproducibility Eliminate the guesswork and setup overhead common in IR research. With convenient wrapper scripts and our "two-click reproduction" (2CR) commands, you can easily replicate the results from key research papers on standard datasets like MS MARCO. This provides a trusted baseline, allowing you to focus on innovation rather than implementation.

🗂️ Extensive and Curated Model Zoo Access a rich collection of pre-trained and optimized reranking models directly through the toolkit. The Model Zoo includes the full LiT5 suite for efficient single-pass reranking, various MonoT5 models for pointwise scoring, and the latest listwise models like RankZephyr. This curated selection saves you time and ensures you're working with proven, high-quality rerankers.

Use Cases

Comparing Reranking Architectures: As a researcher, you can use RankLLM to conduct a fair and controlled comparison between a pointwise model like MonoT5, a pairwise model like DuoT5, and a listwise model like RankZephyr on your specific dataset. The toolkit handles the boilerplate, so you can focus on analyzing the results and drawing clear conclusions.

Establishing a Strong Research Baseline: If you're a PhD student or a research scientist, you can use the provided 2CR commands to instantly reproduce state-of-the-art results on benchmarks like DL19 and DL20. This gives you a verified, high-performing baseline against which to measure your own novel contributions.

Building an Efficient Reranking Service: For engineering teams, RankLLM's integration with SGLang or TensorRT-LLM provides a clear path to building a high-throughput reranking component for a production search system. You can leverage a model like RankZephyr to improve search relevance while maintaining low latency.

Why Choose RankLLM?

This toolkit is designed to provide a distinct advantage over building custom scripts or using more generalized frameworks. Here’s how:

Focus on Reproducibility: While reproducing IR research is often a major hurdle, RankLLM is built around two-click reproduction (2CR) commands. This commitment ensures that you can validate and build upon prior work with confidence, a feature rarely found in other tools.

Specialization in Listwise Reranking: Unlike many toolkits that stop at simpler pointwise methods, RankLLM provides first-class support for advanced listwise reranking with open-source LLMs. This allows you to leverage the superior contextual understanding of models that evaluate an entire list of documents at once.

Engineered for Performance: Instead of relying on standard inference pipelines, RankLLM integrates directly with high-performance backends like vLLM, SGLang, and TensorRT-LLM. This provides an out-of-the-box solution for accelerating inference that you would otherwise have to engineer yourself.

Unmatched Model Diversity: The toolkit offers one of the most comprehensive and well-organized model zoos for reranking. It consolidates leading models from the Castorini group and beyond, giving you a single point of access to pointwise, pairwise, and listwise models for diverse domains, including biomedical search.

Conclusion

RankLLM is the definitive toolkit for researchers and practitioners looking to harness the power of LLMs for state-of-the-art information retrieval. By providing a reproducible, efficient, and versatile framework, it removes critical barriers to innovation and allows you to push the boundaries of search relevance.

Explore the documentation and start building your next-generation ranking pipeline with confidence and clarity.

More information on RankLLM

RankLLM Alternatives

RankLLM Alternatives-

High LLM costs? RouteLLM intelligently routes queries. Save up to 85% & keep 95% GPT-4 performance. Optimize LLM spend & quality easily.

-

LazyLLM: Low-code for multi-agent LLM apps. Build, iterate & deploy complex AI solutions fast, from prototype to production. Focus on algorithms, not engineering.

-

Discover, compare, and rank Large Language Models effortlessly with LLM Extractum. Simplify your selection process and empower innovation in AI applications.

-

Semantic routing is the process of dynamically selecting the most suitable language model for a given input query based on the semantic content, complexity, and intent of the request. Rather than using a single model for all tasks, semantic routers analyze the input and direct it to specialized models optimized for specific domains or complexity levels.

-

Crawl4LLM: Intelligent web crawler for LLM data. Get high-quality, open-source data 5x faster for efficient AI pre-training.