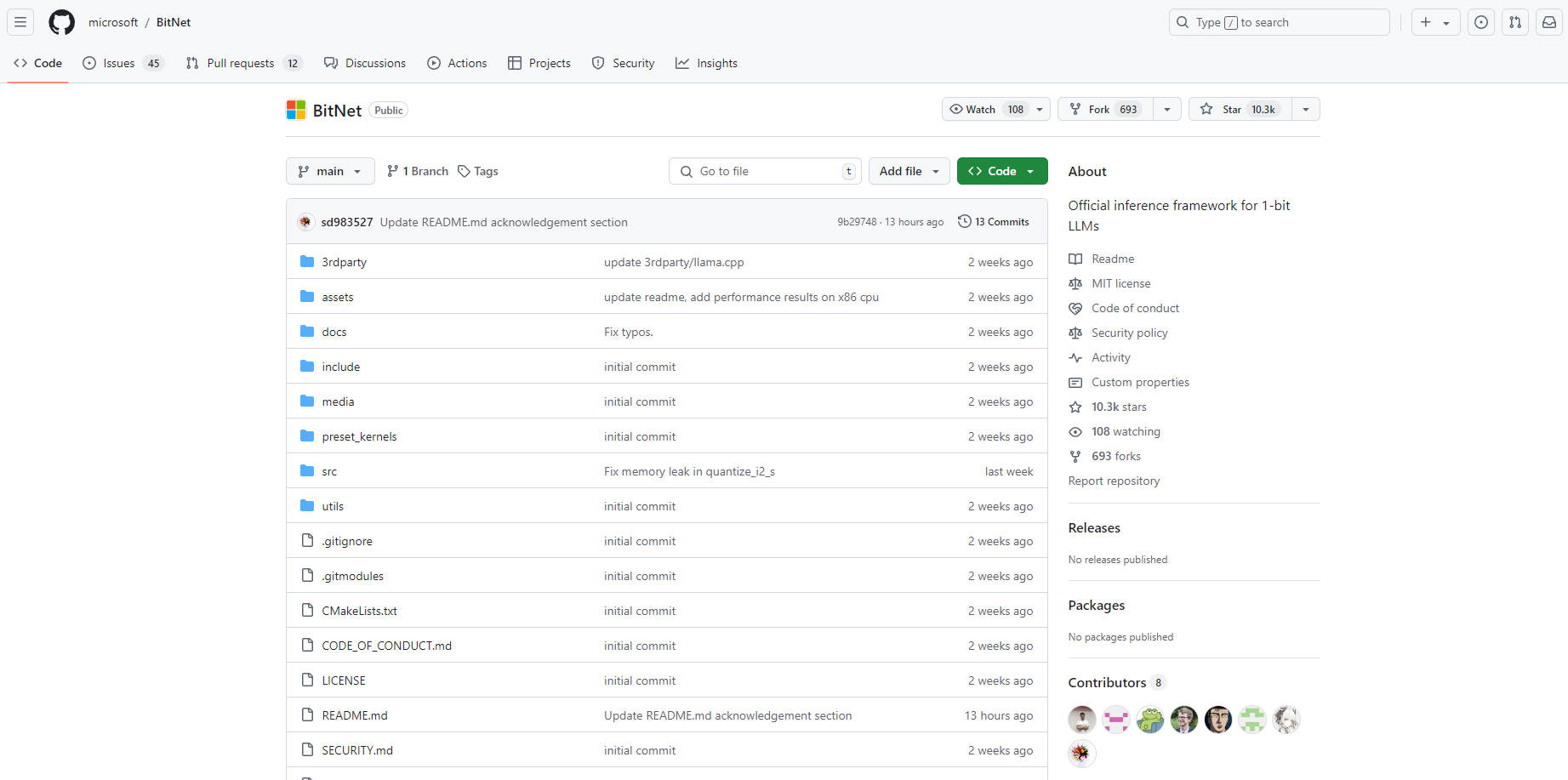

What is BitNet.cpp?

bitnet.cpp is a groundbreaking inference framework designed for 1-bit Large Language Models (LLMs) like BitNet b1.58. It delivers remarkable speed and efficiency gains on CPUs (with NPU and GPU support planned for the future), enabling even large LLMs to run locally on a single CPU with speeds comparable to human reading. By optimizing for 1-bit models, bitnet.cpp empowers broader access to powerful AI capabilities while minimizing hardware requirements and energy consumption.

Key Features:

CPU-Centric Inference: 💻 Enables fast and efficient execution of 1-bit LLMs directly on CPUs, eliminating the dependence on specialized hardware like GPUs.

Enhanced Speed: 🚀 Offers substantial speed improvements on both ARM (1.37x - 5.07x) and x86 (2.37x - 6.17x) CPUs compared to standard LLM inference methods.

Energy Efficiency: 🌱 Significantly reduces energy consumption by 55.4% to 82.2%, promoting eco-friendly AI deployments.

Local Execution of Large Models: 🖥️ Empowers users to run large-scale 1-bit LLMs, even exceeding 100B parameters, on standard CPUs without needing powerful servers or cloud services.

Use Cases:

Running personalized AI assistants on individual laptops or mobile devices without relying on cloud connectivity.

Deploying offline language translation tools in regions with limited internet access.

Empowering researchers and developers to experiment with large LLMs on readily available hardware.

Conclusion:

bitnet.cpp represents a significant advancement in making LLMs more accessible and sustainable. By unlocking efficient CPU-based inference, it paves the way for deploying powerful AI capabilities on a wider range of devices, reducing reliance on expensive infrastructure, and promoting broader access to large language models. bitnet.cpp promises to reshape the landscape of LLM deployment and empower a new wave of AI applications.